👁🗨👨🤖 The Turing Test and Machine Intelligence (part II)

By Enio...

Last time we were talking about the details of the Turing Test, which is the modern name given to an experiment devised by the pioneering computer scientist Alan Turing in the middle of the last century and which he made known in the academic paper called Computer Machine and Intelligence, which in this 2022 is about 72 years old, but whose problems are still valid.

We said that the proposed experiment consists of measuring the success that an artificial entity, be it a machine, a computer or a program, can achieve in a game typical of human beings, where the indicator of success is to demonstrate the same performance as a human player.

But such a game is not just any game, since computers match us and win in a multiplicity of games, but a very specific one that Turing called Imitation Game. Its difficulty for the artificial entity lies in the fact that the latter must use a natural language and with it exhibit an intelligent behavior that humans attribute uniquely and unmistakably to another human being. Hence the term 'imitation'.

The idea behind the measurement is to know whether an artificial entity has acquired the thinking quality, that is, it can think, so in principle the Turing Test provides a method to investigate whether computers-machine-programs can implement intellectual processes comparable to human thought. This is a topic of interest and a kind of Holy Grail within the discipline of Artificial Intelligence.

⬆️ Alan Turing, British mathematician, pioneering computer scientist, critographer and philosopher. Photo: Mike McBey (CC BY SA 3.0).

We also discuss some cases of chatbot programs that have been relatively successful in exhibiting some intelligent behaviors that humans ascribe to other humans, and that some chatbots such as Eugene Goostman have indeed managed to pass the Turing test, but not in a way that enjoys the consensus acceptance and acclaim of the scientific community. The reason: all the cases exhibit the appearance of thinking, but they are not really artificial entities that can think.

Hence it has been inevitable to ask: Is it then that the Turing Test can deal only with appearances instead of authentic intellectual processes? Is it a defect of the test itself? Is it not rather that computers will never be able to think and be as intelligent as humans? If so, are there conclusive obstacles that prevent it?

Among flaws, appearances, objections and obstacles, the truth is that there are reasonable points and they deserve to be answered. In fact, Turing devotes a part of his essay to respond to several objections to the ideas behind his experiment, some of which already dated from his time, while there are others that he imagined might become more serious in the future. And he was right: over the years some objections have been revitalized and critical remarks to the Turing Test and the thinking computer project have emerged.

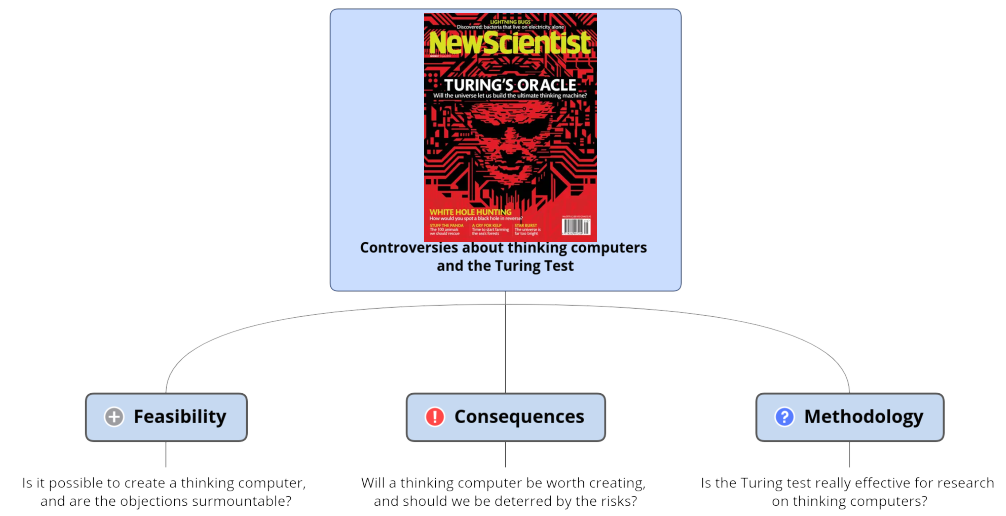

In the following I will comment on some controversial implications of the Turing Test that are part of the debate inaugurated by him within the area of artificial intelligence and even beyond. I propose to discuss those that I personally find most interesting, for which I also offer my own opinions. I even propose to organize and present them in three categories: controversies on feasibility, controversies on consequences and controversies on methodology. The following outline will help.

⬆️ Categories of controversial implications that we will address. Magazine cover image: NewScientist

Controversies about feasibility

Objections to the real possibility of developing a strong artificial intelligence are grouped here. This refers to that intelligence of non-biological origin that is as high as that of the human being or even higher, in which case we would also call it superintelligence. We should clarify that by the time Turing addressed the issue, the term artificial intelligence had not yet been coined, so he was speaking rather of "thinking machines". When we speak here of thinking computers and strong artificial intelligence we are basically alluding to the same thing.

Now, will it be theoretically valid, physically possible and technologically feasible to create an artificial entity that possesses such a level of intelligence equal to or greater than that of a human being? While researchers are working to increase our knowledge in this regard, we hear good logical arguments for and against this idea, but science does not have a definitive answer yet. I think that coming up with a positive answer to these questions involves overcoming different barriers in the research problem: the theoretical barrier, the physical barrier and the technical barrier.

But let's take a look at the arguments in favor of the negative answer, which are several and interesting.

Unraveling consciousness - a prerequisite?

One of the focal points of controversy in this subject has to do with the capacity of consciousness, that which allows us to have a sense of identity, to recognize what is happening in our environment and to feel our own existence. Many other animals have acquired this capacity, but in humans it stands out considerably.

Do computers have to be aware of their existence to achieve a high degree of intelligence? This is something that is often claimed. For example, in the first half of the 20th century the following argument was heard from the British neurologist Geoffrey Jefferson whom Turing quotes as saying:

Not until a machine can write a sonnet or compose a concerto because of thoughts and emotions felt, and not by the chance fall of symbols, could we agree that machine equals brain-that is, not only write it but know that it had written it. No mechanism could feel (and not merely artificially signal, an easy contrivance) pleasure at its successes, grief when its valves fuse, be warmed by flattery, be made miserable by its mistakes, be charmed by sex, be angry or depressed when it cannot get what it wants.

This is a fairly common opinion even in our days, shared by many people who want to have an opinion on the subject, from the highly educated to the poorly educated, and it is quite driven by common sense, as it is hard to believe that machines can develop and demonstrate sensitivity and other qualities that a human being possesses. Consciousness is an internal experience that human beings feel very special.

Turing bluntly qualifies this position as solipsist, that is, the philosophical belief that conscious beings can only be sure of their own consciousness, not of the consciousness of others, so it could not be used against proving the consciousness of machines. Turing considers it an extremist position if alleged as an obstacle and I would add that it is a bit romantic, i.e., argued from feelings. Turing also considers that, instead of adopting such a position, it is better not to hinder research on thinking machines that uses his test as a basis.

However, viewing comments such as Professor Jefferson's as a rhetorical device and putting aside the recrimination of solipsism, the question remains valid: must computers be aware of their existence in order to achieve a high degree of intelligence?

I do not propose here to define both concepts, but it is safe to say that both phenomena are quite related, for we know that physiological damage to the brain can affect both the consciousness and the intellectual capacities of an individual. This also suggests to us in principle that even though the constitution of both processes is based on a very complicated and exquisite organization of matter, they are still physical phenomena, so they can be studied and perhaps even replicated in the laboratory.

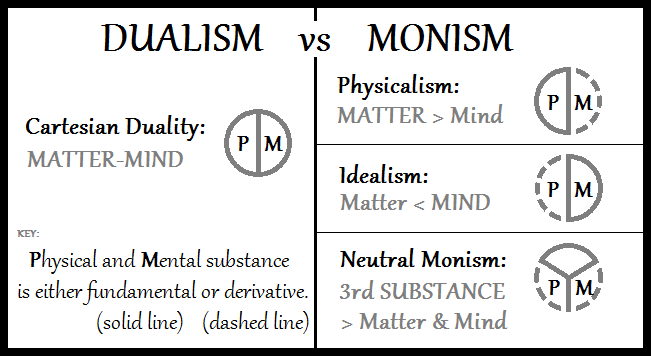

However, this last statement is dangerous, because there is a debate around the nature and origin of consciousness even more arduous than the artificial intelligence debate, where we have physicalist ideas (those that affirm that only the physical is real), some of which are mutually exclusive, and dualist ideas (those that affirm that consciousness transcends the physical). This disjunction basically tells us that science has not yet deciphered the mystery of consciousness and, therefore, we have no answer to the question posed.

⬆️ A diagram with neutral monism compared to Cartesian dualism, physicalism and idealism. A long-standing debate about the mind, although most scientists today are more inclined toward physicalist ideas. Image: Wikimedia Commons

One of the leading scientists currently arguing against the feasibility of conscious computers is theoretical physicist Roger Penrose. He has theorized that consciousness originates at the quantum level within neurons and not from processes between them, a hypothesis that he and Stuart Hameroff call Orchestrated objective reduction. If true, this would add an additional layer of impediments to the thinking computer project as long as there is a connection between consciousness and thought.

Other scholars such as David Wallace, on the other hand, believe that although intuition may lead us to think that consciousness is special and requires special explanations, this need not necessarily be true, just as we do not need a fundamental physical theory to explain digestion or respiration. Consciousness could perfectly well be generated at the level of neural activity, so physicists would not need to solve the problem, but biologists and neurologists would.

In Turing's time, when this debate was not yet so heated, recognizing the paradoxes and difficulties of the problem, Turing was inclined to consider it unnecessary to solve the mystery of consciousness in order to know whether computers will be able to think. Today, however, we are not so sure we agree with him, but that does not make us any less optimistic. We will have to wait for more research to understand more about the connection of consciousness capability with artificial intelligence.

Mathematics has something to say

Another of the interesting arguments for me against the feasibility of thinking computers is the mathematical objection, which Turing had already considered in his time, but which years later has been re-emphasized. What say can mathematics have in this respect? Plenty, for the theory of computation belongs to the branch of mathematics, especially discrete mathematics, and is built on formal models, such as those Turing himself used in On Computable Numbers with an Application to the Entscheidungsproblem (1937).

According to the mathematical objection, computational logic has clear limits and this is well established by Gödel's Incompleteness Theorems (1931) and Turing's Halting Problem (1937) which answer the question of the consistency, completeness and decidability of mathematics following a general procedure. These theorems show that there is a set of logical problems that cannot be solved in an algorithmic way, which happens to be the very essence of computation.

There is no simple way to exemplify this, since we are talking about quite formal systems, but the fundamental idea is that when trying to solve a so-called Gödel's sentence, the artificial intelligence program could enter a loop whose number of iterations can be huge or infinite, so that the program could _never stop or produce an output _. In other words, the program that simulates thought could be sabotaged, crashed, and given away when confronted with certain kinds of logical problems.

This has two implications: on the one hand, there is the possibility of giving the program away during the imitation game, where the judge could pose the participants a logic problem where the machines are supposed to fail. In reality, the program could have a mechanism to avoid solving the logic problem, just as the human participant will most likely do, since the logic problem is an extremely formal and technical matter and not every human being has the necessary knowledge and skills to solve it.

However, the second implication is that, in theory, the program will always be unable to beat the person at the logic problem, which assumes that it will not have the same intellectual capacity of a human being, revealing at least that computers that simulate thinking will never be able to match us.

Even worse, in more recent years it is suggested that, unlike human beings, the artificial entity could not even simulate thought, because the mechanics of the latter is appreciably different from the way computers operate. In essence, according to this argument it follows that:

- The ability to think cannot be algorithmic.

- The functioning of an intelligent computational entity is necessarily algorithmic.

- Humans can think.

This being so, the first logical conclusion is that the artificial entity, by requiring the implementation of an algorithm, would be governed by the principles of computational logic and, therefore, would have insurmountable limitations that would not allow it to reach the level of intelligence necessary to think. In turn, with premise three it can be concluded that human beings can think because the nature of their thinking is not algorithmic and escapes the limitations of computation.

This reasoning is known as the Lucas-Penrose constraint and if proven true, would qualify as a theoretical barrier, since it would be theoretically impossible to build an artificial intelligence based on computational and discrete-state machines.

In the case of Penrose, as already mentioned, he has also pointed out, with his proposal of Orchestrated objective reduction, possible limitations of computation to reach or simulate consciousness.

⬆️ Shadows of the mind: A Search for the Missing Science of Consciousness (1994), one of Roger Penrose's works where he debates the incomputability of consciousness, deepening what he had raised in The Emperor's New Mind: Concerning Computers, Minds and The Laws of Physics (1989). Image: Wikimedia Commons

The good news for artificial intelligence researchers is that both the orchestrated objective reduction and the Lucas-Penrose constraint have not been fully demonstrated scientifically either, so they remain only theoretical models and logical arguments. In particular it is not known whether humans really escape the Lucas-Penrose constraint, and so did Alan Turing 70 years ago when he responded to the mathematical objection. He said:

Although it is established that there are limitations to the Powers If any particular machine, it has only been stated, without any sort of proof, that no such limitations apply to the human intellect.

However, of all the existing objections this may be among the most serious and so Turing also acknowledges, "But I do not think this view can be dismissed quite so lightly." We will remain in expectation.

What other objections are held against the feasibility of developing strong artificial intelligence? And what about the methodological objections and implications of this research? We will be covering these in a future article.

Some references

- Turing, A. M. (1950) Computing Machinery and Intelligence. Mind 49: 433-460. PDF version here

- Saygin, Cicekli y Akman (2000) El test de Turing: 50 años después. Minds and Machines: 463–518. Artículo en inglés PDF version here

- Lucas J. R (1959) Minds, machines and Gödel. Philosophy XXXVI: 112-127.

- Penrose R. (1994) Shadows of the Mind: A Search for the Missing Science of Consciousness. Oxford University Press. ISBN 978-0-19-853978-0.

If you are interested in more STEM (Science, Technology, Engineering and Mathematics) topics, check out the STEMSocial community, where you can find more quality content and also make your contributions. You can join the STEMSocial Discord server to participate even more in our community and check out the weekly distilled.

Notes

- The cover image is by the author and was created with public domain images.

- Unless otherwise indicated, images are the author's or in the public domain.