Initially, I wanted to propose to build a community-based participatory research project on Hive (and STEMsocial). From my experience with students with no advanced knowledge in physics (and no knowledge in particle physics at all), I am quite convinced that such a project could work. This could yield a great outcome, not only scientifically but also in terms of introducing a way to use the chain to record systematically and transparently how a scientific project evolves. This would be, in my opinion, a cool precedent for Hive.

However, this requires that there are participants ready to invest a few hours of their time per week, and this during a few months. This is where I started to hesitate. After having slept several nights on this matter, I am still completely undecided about proposing this project or not. I will continue debating with myself this week, and anyone is free to enter the debate in the comment section of this blog (even if it is finally a bit far from the subject of the day).

I therefore needed to find something different to write about today. This is a piece of cake, as there are so many interesting particle physics topics that I work on. After having spent the last two weeks discussing neutrinos, it is time to change gears and focus on a different topic.

The idea I want to discuss is about how particle physics simulations can be easily achieved on computers such as those that can be bought in regular shops, and how there is no need to know anything about quantum field theory magic to run those simulations. As a matter of fact, my older son managed to simulate thousands of Large Hadron Collider collisions giving rise to the production of a Higgs boson when he was six. It is obviously clear that he didn’t know anything about physics at this age…

In this blog I discuss how collisions such as those ongoing at CERN’s Large Hadron Collider (the LHC) work. I focus in particular how and where new phenomena beyond the Standard Model of particle physics are expected to occur, and why we need high-energy collisions to explore territories beyond the Standard Model. Any excuse is good to provide bits of information on my world. From there, we naturally move on with computer codes, whose development consists of a sub-topic of my research work.

[Credits: Original image by phsymyst (CC BY 2.0)]

Accelerated protons as a probe of the high-energy frontier

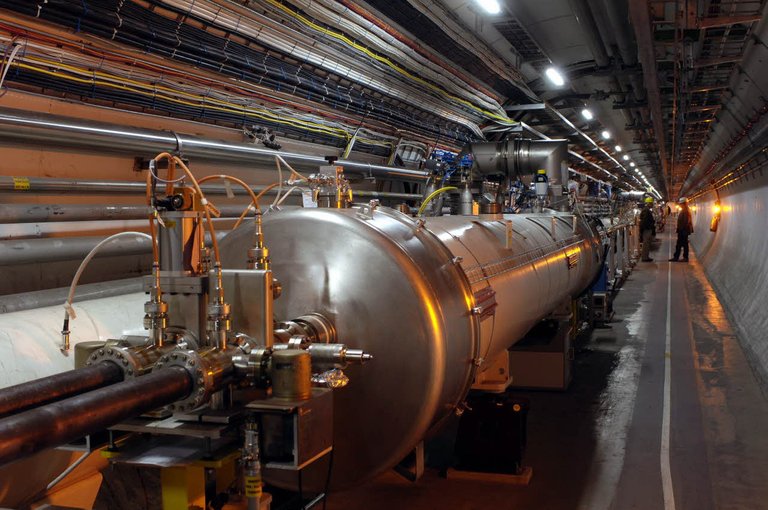

In a circular particle collider such as the LHC, protons are accelerated and collimated in two intense beams that are then smashed in different points of the machine. Whereas this sentence does not look like a special sentence, it introduces two important concepts: high energy and high intensity.

First of all, let’s focus on high-energy collisions. We have currently explored the Standard Model of particle physics quite deeply at all energies reached so far in an experiment. We however know that once extrapolated to higher energies, this very successful theoretical framework has conceptual issues and practical limitations. It is thus important to explore the high-energy regime as much as we can by tracking new particles and new phenomena (that may also not be directly associated with a new particle). This may reveal how the Standard Model should be extended to accommodate some of its current problems.

However, we should keep in mind that an exploration process is always paved with unknowns and no guarantee… This is why it is so exciting, in my opinion. We have no idea about what we will find, if anything.

Particle colliders consist of some of the tools we use to open a window on this high-energy world. Thanks to powerful electromagnetic fields (electric fields to be precise), it is possible to accelerate charged particles to an incredible speed. In the tunnel of the Large Hadron Collider, bunches of protons are accelerated to 6.5 TeV. This means that every proton has a kinetic energy equal to 6500 times its mass, reaching a speed of 99.99999896% the speed of light. We can thus see the LHC as the fastest racetrack on the planet (to take the expression found on this webpage).

This is where special relativity enters the game. A very energetic collision can lead to the production of very massive particles. Mass is indeed one of the three forms of energy that exist in the microscopic world. As already mentioned several times in other blogs, the golden rule of physics states that total energy is conserved in any process. This means that one form of energy (i.e. kinetic energy) can be converted into any other form of energy (i.e. mass energy) in a process, provided that their sum is constant. This is exactly what we expect to happen at particle colliders, namely the conversion of the protons’ kinetic energy into something new.

[Credits: P. Stroppa (CEA)]

Any particle collider also relies on powerful magnetic fields. Whereas electric fields are required to accelerate particles, magnetic fields are needed to control their trajectories. We indeed do not want to send our beams to the moon. Instead, we want to collide them in very well defined points. Moreover, magnetic fields are additionally used to collimate the beams as much as possible, so that we could reach a high intensity of particle collisions.

New phenomena are expected to be rare, and very intense beams are necessary to reach a satisfactory amount of collisions. Out of this large amount, there are hopes that a few collisions will be connected to those rare events. Of course, it is then crucial to have a good strategy to see them from an often overwhelming background. This is however a different story.

High-energy proton-proton collisions in a nutshell

For the sake of keeping the discussion as clear as possible, I consider from now on the Large Hadron Collider and assume that two beams of highly-accelerated protons collide.

As already mentioned in this earlier blog, protons are made of three smaller entities called quarks. When protons are accelerated at very high speed, the picture changes a little. They become complex systems made of a huge number of quarks, antiquarks (antiparticles corresponding to quarks) and gluons (the mediators of the strong force). This originates from the behaviour of the strong force itself, and I won’t enter into the details as this deserves an entire blog by itself.

In a high-energy collision, the constituents of the protons scatter, instead of the protons themselves. Therefore, at the LHC we have collisions of quarks, antiquarks and gluons. Whereas most the time, nothing happens when two protons cross each other, once in a while one of the constituents of the first proton interacts with one of the constituents of the second proton, to produce some final state.

Many final states are possible, especially if we consider theories extending the Standard Model of particle physics. Not all final states are however equal. Some of them are associated with a copious rate, and some are rarer. In order to know this, we rely on the ‘master equation governing the world’ (that could be the one of either the Standard Model, or of any other particle physics model). This equation contains all the ingredients to calculate the rate associated with any given process that could happen at a particle collider.

However, it is impossible to tell the outcome of a specific collision. From the knowledge of the rates of all possible processes, we can only associate a probability with each of the possible outcomes. Large rates correspond to large probabilities, while small rates correspond to small probabilities. We naturally go back to the reason of having a lot of collisions happening in an experiment. To be able to observe a rare final state in a few collisions, it is important to have a very large number of collisions in total. The small associated probability and the huge total number of collisions then guarantee that in a few cases, the rare final state considered is produced.

[Credits: CERN]

This probabilistic nature stems from the quantum nature of the microscopic world. The result of a given collision is not deterministic, but probabilistic. Therefore, we need to re-run experiments many many times (i.e. to have tons of collisions) to have a good idea of what is going on. Probabilities are naturally related to occurrence rates when collision statistics is large. Whereas this may sound weird, hundreds of years of data have proven it to work…

God is actually playing dice with the universe! We know today that the 20th was a quantum century. However, it is also clear that the 21st century will even be more quantum (quantum computers are coming…)!

A master equation to rule all calculations!

What about this master equation that I mentioned above? Do we really only need a single equation to be able to calculate anything relevant for particle colliders? The answer is positive, and this equation is called the Lagrangian of the particle physics model.

The Lagrangian contains all the building blocks of the theory: the manner each particle propagates, which particle interacts with which particle, which particle has a mass and which one has not, etc. Everything is in there. Everything. The Lagrangian is a unique way to fully define a theory.

So we have a model of particle physics, and therefore we have a Lagrangian. We are moreover interested in many collider processes. How can we relate the probabilities and the rates associated with these processes (which consist of the information relevant for a particle collider experiment) to the Lagrangian?

This answer originates from the concept of Feynman rules, as well as to the calculation of very highly-dimensional integrals. I skip any detail here, as this could become quite cumbersome. At the end of the day, what matters is that regardless of the process of interest, there are well-defined quantum field theory recipes that can be followed. Having recipes means that we can teach a computer how to do this systematically (and correctly).

This has been done, and the resulting computer codes (yes we even have several of them) are used on a daily basis by physicists from all over the world. An example of such a code is MadGraph5_aMC@NLO. In this code, users input details about their favourite collider, particle physics model and process of interest through a human-friendly Python interface.

[Credits: Edward Tufte (Twitter)]

The rest is automated. This means that anyone could run this code. Me, my son or even my bachelor students who don’t not know much about particle physics yet. What is needed to be known is the final state that we are interested in producing in a collider experiment, for a given particle physics model. That’s all. Really!

Obviously, this can be done only because the code has been carefully validated against tons of non-automated calculations and results coming from the hard work of many generations of physicists. This is however how progress is built. We move on from bases established by others to reach new summits.

By running these simulation code, we end up in a few minutes with predictions for the production rate of the process considered, but also with thousands of simulated collisions related to this process. The latter is a bonus coming from the implemented numerical adaptive integration procedure. This is a Monte Carlo integration procedure, that is the only way to solve a highly-dimensional integral numerically. In practice, it explores all configurations of the final state and assesses which ones are the most probable ones and which ones the rarest. We can then extract simulated collisions with configurations occurring in a satisfactory manner related to their level of rarity, or in other words as they occur in nature.

Summary: particle physics simulations on regular computers

In this blog, I started to discuss how particle collisions as happening in colliders such as the Large Hadron Collider at CERN work. I wrote ‘started’ as there is in fact much more to write on this topic, which is left for a future blog. Thanks to very energetic electric and magnetic fields, protons can be accelerated to very high speeds. And by high speed, we actually mean it. At the LHC, they reach 99.99999986% the speed of light. A world record!

When two of such accelerated protons collide, the reaction occurs at the level of their constituents (fundamental particles called quarks, antiquarks and gluons). Thanks to special relativity, the energy in the process can be converted into mass energy, and thus possibly yield the production of new particles beyond the Standard Model.

As a theorist, it is important to be able to calculate the production rates of those new processes, and to simulate collisions as they would occur in nature. This relies on possibly complex quantum field theory calculations. However, there are ways to automate them so that they become fully transparent to anyone. We did this and have taught quantum field theory recipes once and for all to various public computer codes.

In this way, users (who could be physicists or anyone, like my teenager son) only need to provide information about the particle physics model and process of interest. The code then deals with the rest, and in particular with the quantum field theory part. In other words, anyone could investigate any process of any model and see what would be the impact on present and future LHC data by means of easy-made computer simulations.

What I have not said is how this simple picture is getting messy by virtue of the strong interaction. This story will however be the topic of a next blog.

For now, I hope you enjoyed reading this post. On my side, it was quite a good fun to write. Please don’t hesitate to ask questions or provide comments. Feel also free to give some insight on setting up a stage for a community-based participatory research on Hive. I am wondering how doing so would be perceived…

See you next week for the next episode!