ΔS EVER, CĦIṈΔ COᕈIES ΔṈD IMᕈROVES

𝔻𝕖𝕖𝕡𝕊𝕖𝕖𝕜 ℝ𝟙 𝕣𝕖𝕡𝕣𝕖𝕤𝕖𝕟𝕥𝕤 𝕒 𝕤𝕚𝕘𝕟𝕚𝕗𝕚𝕔𝕒𝕟𝕥 𝕒𝕕𝕧𝕒𝕟𝕔𝕖 𝕚𝕟 𝔸𝕣𝕥𝕚𝕗𝕚𝕔𝕚𝕒𝕝 𝕀𝕟𝕥𝕖𝕝𝕝𝕚𝕘𝕖𝕟𝕔𝕖, 𝕠𝕗𝕗𝕖𝕣𝕚𝕟𝕘 𝕒𝕗𝕗𝕠𝕣𝕕𝕒𝕓𝕝𝕖 𝕞𝕠𝕕𝕖𝕝𝕤 𝕗𝕠𝕣 𝕤𝕥𝕒𝕣𝕥𝕦𝕡𝕤. 𝕌𝕟𝕝𝕚𝕜𝕖 𝕆𝕡𝕖𝕟𝔸𝕀, ℝ𝟙 𝕦𝕤𝕖𝕤 𝕚𝕟𝕟𝕠𝕧𝕒𝕥𝕚𝕧𝕖 𝕥𝕖𝕔𝕙𝕟𝕚𝕢𝕦𝕖𝕤 𝕥𝕙𝕒𝕥 𝕖𝕟𝕒𝕓𝕝𝕖 𝕔𝕙𝕖𝕒𝕡𝕖𝕣 𝕒𝕟𝕕 𝕞𝕠𝕣𝕖 𝕖𝕗𝕗𝕚𝕔𝕚𝕖𝕟𝕥 𝕥𝕣𝕒𝕚𝕟𝕚𝕟𝕘. 𝕋𝕙𝕚𝕤 𝕕𝕖𝕞𝕠𝕔𝕣𝕒𝕥𝕚𝕤𝕖𝕤 𝕒𝕔𝕔𝕖𝕤𝕤 𝕥𝕠 𝕒𝕕𝕧𝕒𝕟𝕔𝕖𝕕 𝕥𝕖𝕔𝕙𝕟𝕠𝕝𝕠𝕘𝕚𝕖𝕤, 𝕒𝕝𝕝𝕠𝕨𝕚𝕟𝕘 𝕤𝕞𝕒𝕝𝕝𝕖𝕣 𝕔𝕠𝕞𝕡𝕒𝕟𝕚𝕖𝕤 𝕥𝕠 𝕔𝕠𝕞𝕡𝕖𝕥𝕖 𝕚𝕟 𝕥𝕙𝕖 𝔸𝕀 𝕞𝕒𝕣𝕜𝕖𝕥.

𝕋𝕙𝕖 𝕔𝕣𝕖𝕒𝕥𝕚𝕠𝕟 𝕠𝕗 𝕝𝕒𝕟𝕘𝕦𝕒𝕘𝕖 𝕞𝕠𝕕𝕖𝕝𝕤 𝕤𝕦𝕔𝕙 𝕒𝕤 𝔾ℙ𝕋 𝕒𝕟𝕕 ℝ𝟙 𝕣𝕖𝕡𝕣𝕖𝕤𝕖𝕟𝕥𝕤 𝕒 𝕞𝕒𝕛𝕠𝕣 𝕤𝕙𝕚𝕗𝕥 𝕚𝕟 𝕥𝕙𝕖 𝕨𝕒𝕪 𝕨𝕖 𝕚𝕟𝕥𝕖𝕣𝕒𝕔𝕥 𝕨𝕚𝕥𝕙 𝕥𝕖𝕔𝕙𝕟𝕠𝕝𝕠𝕘𝕪. 𝕋𝕙𝕖𝕤𝕖 𝕕𝕖𝕧𝕖𝕝𝕠𝕡𝕞𝕖𝕟𝕥𝕤 𝕒𝕣𝕖 𝕣𝕖𝕧𝕠𝕝𝕦𝕥𝕚𝕠𝕟𝕚𝕤𝕚𝕟𝕘 𝕚𝕟𝕗𝕠𝕣𝕞𝕒𝕥𝕚𝕠𝕟 𝕒𝕔𝕔𝕖𝕤𝕤 𝕒𝕟𝕕 𝕡𝕣𝕠𝕘𝕣𝕒𝕞𝕞𝕚𝕟𝕘. 𝕋𝕙𝕖 𝕖𝕧𝕠𝕝𝕦𝕥𝕚𝕠𝕟 𝕠𝕗 𝔾ℙ𝕋 𝕞𝕠𝕕𝕖𝕝𝕤 𝕤𝕙𝕠𝕨𝕤 𝕙𝕠𝕨 𝕆𝕡𝕖𝕟𝔸𝕀 𝕙𝕒𝕤 𝕓𝕖𝕖𝕟 𝕒 𝕡𝕚𝕠𝕟𝕖𝕖𝕣 𝕚𝕟 𝕥𝕙𝕖 𝕕𝕖𝕧𝕖𝕝𝕠𝕡𝕞𝕖𝕟𝕥 𝕠𝕗 𝕒𝕣𝕥𝕚𝕗𝕚𝕔𝕚𝕒𝕝 𝕚𝕟𝕥𝕖𝕝𝕝𝕚𝕘𝕖𝕟𝕔𝕖. 𝔽𝕣𝕠𝕞 𝔾ℙ𝕋-𝟚 𝕥𝕠 𝔾ℙ𝕋-𝟛, 𝕖𝕒𝕔𝕙 𝕧𝕖𝕣𝕤𝕚𝕠𝕟 𝕙𝕒𝕤 𝕚𝕞𝕡𝕣𝕠𝕧𝕖𝕕 𝕤𝕚𝕘𝕟𝕚𝕗𝕚𝕔𝕒𝕟𝕥𝕝𝕪. 𝕄𝕖𝕥𝕒 𝕒𝕟𝕕 𝕠𝕥𝕙𝕖𝕣 𝕝𝕒𝕣𝕘𝕖 𝕔𝕠𝕞𝕡𝕒𝕟𝕚𝕖𝕤 𝕒𝕣𝕖 𝕥𝕣𝕪𝕚𝕟𝕘 𝕥𝕠 𝕤𝕥𝕒𝕪 𝕣𝕖𝕝𝕖𝕧𝕒𝕟𝕥 𝕚𝕟 𝕥𝕙𝕖 𝕗𝕚𝕖𝕝𝕕 𝕠𝕗 𝕒𝕣𝕥𝕚𝕗𝕚𝕔𝕚𝕒𝕝 𝕚𝕟𝕥𝕖𝕝𝕝𝕚𝕘𝕖𝕟𝕔𝕖. 𝕋𝕙𝕖 𝕔𝕣𝕖𝕒𝕥𝕚𝕠𝕟 𝕠𝕗 𝕞𝕠𝕕𝕖𝕝𝕤 𝕝𝕚𝕜𝕖 𝕃𝕒𝕞𝕒 𝕚𝕤 𝕒𝕟 𝕒𝕥𝕥𝕖𝕞𝕡𝕥 𝕥𝕠 𝕔𝕠𝕞𝕡𝕖𝕥𝕖 𝕨𝕚𝕥𝕙 𝕘𝕚𝕒𝕟𝕥𝕤 𝕝𝕚𝕜𝕖 𝕆𝕡𝕖𝕟𝔸𝕀. 𝕋𝕙𝕖 𝔻𝕖𝕖𝕡𝕤 ℝ𝟙 𝕞𝕠𝕕𝕖𝕝 𝕒𝕝𝕝𝕠𝕨𝕤 𝕤𝕞𝕒𝕝𝕝 𝕤𝕥𝕒𝕣𝕥𝕦𝕡𝕤 𝕥𝕠 𝕒𝕔𝕔𝕖𝕤𝕤 𝕒𝕕𝕧𝕒𝕟𝕔𝕖𝕕 𝕥𝕖𝕔𝕙𝕟𝕠𝕝𝕠𝕘𝕪. 𝕀𝕥𝕤 𝕒𝕓𝕚𝕝𝕚𝕥𝕪 𝕥𝕠 𝕕𝕚𝕤𝕥𝕚𝕝 𝕤𝕞𝕒𝕝𝕝𝕖𝕣 𝕒𝕟𝕕 𝕗𝕒𝕤𝕥𝕖𝕣 𝕞𝕠𝕕𝕖𝕝𝕤 𝕚𝕤 𝕔𝕣𝕦𝕔𝕚𝕒𝕝 𝕗𝕠𝕣 𝕚𝕟𝕟𝕠𝕧𝕒𝕥𝕚𝕠𝕟 𝕚𝕟 𝕥𝕙𝕖 𝕤𝕖𝕔𝕥𝕠𝕣.

𝕋𝕙𝕖 ℝ𝟙 𝕞𝕠𝕕𝕖𝕝 𝕙𝕒𝕤 𝕔𝕙𝕒𝕟𝕘𝕖𝕕 𝕥𝕙𝕖 𝕨𝕒𝕪 𝕒𝕣𝕥𝕚𝕗𝕚𝕔𝕚𝕒𝕝 𝕚𝕟𝕥𝕖𝕝𝕝𝕚𝕘𝕖𝕟𝕔𝕖 𝕚𝕤 𝕥𝕣𝕒𝕚𝕟𝕖𝕕 𝕓𝕪 𝕓𝕖𝕚𝕟𝕘 𝕞𝕠𝕣𝕖 𝕖𝕗𝕗𝕚𝕔𝕚𝕖𝕟𝕥 𝕒𝕟𝕕 𝕒𝕗𝕗𝕠𝕣𝕕𝕒𝕓𝕝𝕖. 𝕋𝕙𝕚𝕤 𝕒𝕝𝕝𝕠𝕨𝕤 𝕤𝕥𝕒𝕣𝕥𝕦𝕡𝕤 𝕥𝕠 𝕒𝕔𝕔𝕖𝕤𝕤 𝕒𝕕𝕧𝕒𝕟𝕔𝕖𝕕 𝕥𝕖𝕔𝕙𝕟𝕠𝕝𝕠𝕘𝕚𝕖𝕤 𝕨𝕚𝕥𝕙𝕠𝕦𝕥 𝕝𝕒𝕣𝕘𝕖 𝕚𝕟𝕧𝕖𝕤𝕥𝕞𝕖𝕟𝕥𝕤. 𝕋𝕙𝕖 ℍ𝕦𝕘𝕘𝕚𝕟𝕘 𝔽𝕒𝕔𝕖 𝕥𝕖𝕒𝕞 𝕚𝕤 𝕨𝕠𝕣𝕜𝕚𝕟𝕘 𝕠𝕟 𝕣𝕖𝕡𝕝𝕚𝕔𝕒𝕥𝕚𝕟𝕘 ℝ𝟙 𝕥𝕣𝕒𝕚𝕟𝕚𝕟𝕘, 𝕨𝕙𝕚𝕔𝕙 𝕔𝕠𝕦𝕝𝕕 𝕧𝕒𝕝𝕚𝕕𝕒𝕥𝕖 𝕚𝕥𝕤 𝕖𝕗𝕗𝕖𝕔𝕥𝕚𝕧𝕖𝕟𝕖𝕤𝕤 𝕒𝕟𝕕 𝕒𝕗𝕗𝕠𝕣𝕕𝕒𝕓𝕚𝕝𝕚𝕥𝕪. 𝕋𝕙𝕚𝕤 𝕚𝕤 𝕒𝕟 𝕚𝕞𝕡𝕠𝕣𝕥𝕒𝕟𝕥 𝕤𝕥𝕖𝕡 𝕗𝕠𝕣 𝕥𝕙𝕖 𝔸𝕀 𝕔𝕠𝕞𝕞𝕦𝕟𝕚𝕥𝕪. ℝ𝟙 𝕦𝕤𝕖𝕤 𝕚𝕟𝕟𝕠𝕧𝕒𝕥𝕚𝕧𝕖 𝕥𝕖𝕔𝕙𝕟𝕚𝕢𝕦𝕖𝕤 𝕤𝕦𝕔𝕙 𝕒𝕤 𝕓𝕚𝕥 𝕤𝕙𝕚𝕗𝕥𝕚𝕟𝕘 𝕥𝕠 𝕠𝕡𝕥𝕚𝕞𝕚𝕤𝕖 𝕞𝕖𝕞𝕠𝕣𝕪 𝕦𝕤𝕒𝕘𝕖 𝕒𝕟𝕕 𝕡𝕣𝕠𝕔𝕖𝕤𝕤𝕚𝕟𝕘 𝕡𝕠𝕨𝕖𝕣. 𝕋𝕙𝕚𝕤 𝕒𝕝𝕝𝕠𝕨𝕤 𝕗𝕠𝕣 𝕗𝕒𝕤𝕥𝕖𝕣 𝕒𝕟𝕕 𝕞𝕠𝕣𝕖 𝕖𝕗𝕗𝕚𝕔𝕚𝕖𝕟𝕥 𝕡𝕖𝕣𝕗𝕠𝕣𝕞𝕒𝕟𝕔𝕖.

𝕋𝕙𝕖 𝕚𝕞𝕡𝕝𝕖𝕞𝕖𝕟𝕥𝕒𝕥𝕚𝕠𝕟 𝕠𝕗 𝕒 𝕣𝕖𝕨𝕒𝕣𝕕 𝕗𝕦𝕟𝕔𝕥𝕚𝕠𝕟 𝕚𝕟 𝕞𝕠𝕕𝕖𝕝 𝕥𝕣𝕒𝕚𝕟𝕚𝕟𝕘 𝕚𝕞𝕡𝕣𝕠𝕧𝕖𝕤 𝕥𝕙𝕖 𝕢𝕦𝕒𝕝𝕚𝕥𝕪 𝕠𝕗 𝕥𝕙𝕖 𝕘𝕖𝕟𝕖𝕣𝕒𝕥𝕖𝕕 𝕣𝕖𝕤𝕡𝕠𝕟𝕤𝕖𝕤. 𝕋𝕙𝕚𝕤 𝕚𝕤 𝕜𝕖𝕪 𝕥𝕠 𝕞𝕒𝕜𝕚𝕟𝕘 𝕚𝕟𝕥𝕖𝕣𝕒𝕔𝕥𝕚𝕠𝕟 𝕨𝕚𝕥𝕙 𝕞𝕠𝕕𝕖𝕝𝕤 𝕞𝕠𝕣𝕖 𝕟𝕒𝕥𝕦𝕣𝕒𝕝 𝕗𝕠𝕣 𝕙𝕦𝕞𝕒𝕟𝕤. 𝕋𝕙𝕖 𝕒𝕣𝕥𝕚𝕗𝕚𝕔𝕚𝕒𝕝 𝕚𝕟𝕥𝕖𝕝𝕝𝕚𝕘𝕖𝕟𝕔𝕖 𝕞𝕠𝕕𝕖𝕝 𝕥𝕣𝕒𝕚𝕟𝕚𝕟𝕘 𝕡𝕣𝕠𝕔𝕖𝕤𝕤 𝕙𝕒𝕤 𝕖𝕧𝕠𝕝𝕧𝕖𝕕 𝕤𝕚𝕘𝕟𝕚𝕗𝕚𝕔𝕒𝕟𝕥𝕝𝕪, 𝕦𝕤𝕚𝕟𝕘 𝕣𝕖𝕚𝕟𝕗𝕠𝕣𝕔𝕖𝕞𝕖𝕟𝕥 𝕝𝕖𝕒𝕣𝕟𝕚𝕟𝕘 𝕨𝕚𝕥𝕙𝕠𝕦𝕥 𝕥𝕙𝕖 𝕟𝕖𝕖𝕕 𝕗𝕠𝕣 𝕙𝕦𝕞𝕒𝕟 𝕚𝕟𝕥𝕖𝕣𝕧𝕖𝕟𝕥𝕚𝕠𝕟. 𝕋𝕙𝕚𝕤 𝕙𝕒𝕤 𝕖𝕟𝕒𝕓𝕝𝕖𝕕 𝕥𝕙𝕖 𝕔𝕣𝕖𝕒𝕥𝕚𝕠𝕟 𝕠𝕗 𝕞𝕠𝕣𝕖 𝕖𝕗𝕗𝕚𝕔𝕚𝕖𝕟𝕥 𝕒𝕟𝕕 𝕒𝕔𝕔𝕦𝕣𝕒𝕥𝕖 𝕞𝕠𝕕𝕖𝕝𝕤.

ℙ𝕣𝕖𝕗𝕖𝕣𝕖𝕟𝕔𝕖 𝕥𝕦𝕟𝕚𝕟𝕘 𝕚𝕤 𝕔𝕣𝕦𝕔𝕚𝕒𝕝 𝕗𝕠𝕣 𝕝𝕒𝕟𝕘𝕦𝕒𝕘𝕖 𝕞𝕠𝕕𝕖𝕝𝕤 𝕥𝕠 𝕗𝕠𝕝𝕝𝕠𝕨 𝕘𝕦𝕚𝕕𝕖𝕝𝕚𝕟𝕖𝕤 𝕒𝕝𝕚𝕘𝕟𝕖𝕕 𝕨𝕚𝕥𝕙 𝕙𝕦𝕞𝕒𝕟 𝕖𝕩𝕡𝕖𝕔𝕥𝕒𝕥𝕚𝕠𝕟𝕤. 𝕋𝕙𝕚𝕤 𝕖𝕟𝕤𝕦𝕣𝕖𝕤 𝕥𝕙𝕒𝕥 𝕣𝕖𝕤𝕡𝕠𝕟𝕤𝕖𝕤 𝕒𝕣𝕖 𝕒𝕡𝕡𝕣𝕠𝕡𝕣𝕚𝕒𝕥𝕖 𝕒𝕟𝕕 𝕤𝕒𝕗𝕖. 𝕋𝕙𝕖 𝕘𝕖𝕟𝕖𝕣𝕒𝕥𝕚𝕠𝕟 𝕠𝕗 𝕣𝕖𝕒𝕤𝕠𝕟𝕚𝕟𝕘 𝕖𝕩𝕒𝕞𝕡𝕝𝕖𝕤 𝕚𝕤 𝕕𝕠𝕟𝕖 𝕨𝕚𝕥𝕙𝕠𝕦𝕥 𝕙𝕦𝕞𝕒𝕟𝕤, 𝕦𝕤𝕚𝕟𝕘 𝕒 𝕡𝕣𝕖𝕧𝕚𝕠𝕦𝕤 𝕞𝕠𝕕𝕖𝕝 𝕥𝕠 𝕧𝕖𝕣𝕚𝕗𝕪 𝕥𝕙𝕖 𝕒𝕔𝕔𝕦𝕣𝕒𝕔𝕪 𝕠𝕗 𝕥𝕙𝕖 𝕒𝕟𝕤𝕨𝕖𝕣𝕤. 𝕋𝕙𝕚𝕤 𝕚𝕞𝕡𝕣𝕠𝕧𝕖𝕤 𝕥𝕙𝕖 𝕖𝕗𝕗𝕖𝕔𝕥𝕚𝕧𝕖𝕟𝕖𝕤𝕤 𝕠𝕗 𝕞𝕠𝕕𝕖𝕝 𝕝𝕖𝕒𝕣𝕟𝕚𝕟𝕘. 𝕋𝕙𝕖 𝕡𝕣𝕖𝕤𝕖𝕟𝕥𝕒𝕥𝕚𝕠𝕟 𝕠𝕗 𝕥𝕙𝕖 𝕞𝕠𝕕𝕖𝕝𝕤' 𝕥𝕙𝕠𝕦𝕘𝕙𝕥 𝕡𝕣𝕠𝕔𝕖𝕤𝕤, 𝕒𝕤 𝕚𝕟 𝔻𝕖𝕖𝕡𝕤 ℝ𝟙, 𝕡𝕣𝕠𝕧𝕚𝕕𝕖𝕤 𝕥𝕣𝕒𝕟𝕤𝕡𝕒𝕣𝕖𝕟𝕔𝕪 𝕒𝕟𝕕 𝕔𝕠𝕟𝕗𝕚𝕕𝕖𝕟𝕔𝕖 𝕥𝕠 𝕥𝕙𝕖 𝕦𝕤𝕖𝕣. 𝕋𝕙𝕚𝕤 𝕒𝕡𝕡𝕣𝕠𝕒𝕔𝕙 𝕙𝕒𝕤 𝕣𝕖𝕧𝕠𝕝𝕦𝕥𝕚𝕠𝕟𝕚𝕤𝕖𝕕 𝕥𝕙𝕖 𝕘𝕣𝕒𝕡𝕙𝕚𝕔𝕒𝕝 𝕚𝕟𝕥𝕖𝕣𝕗𝕒𝕔𝕖 𝕚𝕟 𝕒𝕣𝕥𝕚𝕗𝕚𝕔𝕚𝕒𝕝 𝕚𝕟𝕥𝕖𝕝𝕝𝕚𝕘𝕖𝕟𝕔𝕖 𝕥𝕖𝕔𝕙𝕟𝕠𝕝𝕠𝕘𝕪.

𝕋𝕙𝕖 𝕘𝕣𝕠𝕨𝕥𝕙 𝕠𝕗 𝕒𝕣𝕥𝕚𝕗𝕚𝕔𝕚𝕒𝕝 𝕚𝕟𝕥𝕖𝕝𝕝𝕚𝕘𝕖𝕟𝕔𝕖 𝕣𝕖𝕝𝕚𝕖𝕤 𝕠𝕟 𝕚𝕟𝕧𝕖𝕤𝕥𝕞𝕖𝕟𝕥 𝕚𝕟 𝕔𝕙𝕚𝕡𝕤 𝕒𝕟𝕕 𝕡𝕣𝕠𝕔𝕖𝕤𝕤𝕚𝕟𝕘, 𝕨𝕙𝕚𝕔𝕙 𝕙𝕒𝕤 𝕝𝕖𝕕 𝕥𝕠 𝕒 𝕔𝕙𝕒𝕟𝕘𝕖 𝕚𝕟 𝕥𝕙𝕖 𝕨𝕒𝕪 𝕔𝕠𝕞𝕡𝕒𝕟𝕚𝕖𝕤 𝕚𝕞𝕡𝕝𝕖𝕞𝕖𝕟𝕥 𝕤𝕠𝕝𝕦𝕥𝕚𝕠𝕟𝕤. 𝕋𝕙𝕚𝕤 𝕙𝕒𝕤 𝕣𝕖𝕕𝕦𝕔𝕖𝕕 𝕔𝕠𝕤𝕥𝕤 𝕒𝕟𝕕 𝕥𝕣𝕒𝕟𝕤𝕗𝕠𝕣𝕞𝕖𝕕 𝕥𝕙𝕖 𝕔𝕦𝕝𝕥𝕦𝕣𝕖 𝕠𝕗 𝕤𝕠𝕗𝕥𝕨𝕒𝕣𝕖 𝕕𝕖𝕧𝕖𝕝𝕠𝕡𝕞𝕖𝕟𝕥 𝕚𝕟 𝕥𝕙𝕖 𝕚𝕟𝕕𝕦𝕤𝕥𝕣𝕪. 𝕋𝕙𝕖 ℝ𝟙 𝕞𝕠𝕕𝕖𝕝 𝕙𝕒𝕤 𝕣𝕖𝕧𝕠𝕝𝕦𝕥𝕚𝕠𝕟𝕚𝕤𝕖𝕕 𝕒𝕣𝕥𝕚𝕗𝕚𝕔𝕚𝕒𝕝 𝕚𝕟𝕥𝕖𝕝𝕝𝕚𝕘𝕖𝕟𝕔𝕖 𝕕𝕖𝕧𝕖𝕝𝕠𝕡𝕞𝕖𝕟𝕥, 𝕕𝕖𝕞𝕠𝕟𝕤𝕥𝕣𝕒𝕥𝕚𝕟𝕘 𝕥𝕙𝕒𝕥 𝕚𝕥 𝕚𝕤 𝕡𝕠𝕤𝕤𝕚𝕓𝕝𝕖 𝕥𝕠 𝕒𝕔𝕙𝕚𝕖𝕧𝕖 𝕤𝕚𝕘𝕟𝕚𝕗𝕚𝕔𝕒𝕟𝕥 𝕣𝕖𝕤𝕦𝕝𝕥𝕤 𝕨𝕚𝕥𝕙 𝕗𝕖𝕨𝕖𝕣 𝕣𝕖𝕤𝕠𝕦𝕣𝕔𝕖𝕤. 𝕋𝕙𝕚𝕤 𝕓𝕣𝕖𝕒𝕜𝕥𝕙𝕣𝕠𝕦𝕘𝕙 𝕚𝕤 𝕗𝕠𝕣𝕔𝕚𝕟𝕘 𝕔𝕠𝕞𝕡𝕒𝕟𝕚𝕖𝕤 𝕥𝕠 𝕣𝕖𝕥𝕙𝕚𝕟𝕜 𝕥𝕙𝕖𝕚𝕣 𝕥𝕖𝕔𝕙𝕟𝕠𝕝𝕠𝕘𝕪 𝕒𝕡𝕡𝕣𝕠𝕒𝕔𝕙𝕖𝕤. ℂ𝕠𝕞𝕡𝕒𝕟𝕚𝕖𝕤 𝕒𝕣𝕖 𝕚𝕟𝕧𝕖𝕤𝕥𝕚𝕟𝕘 𝕚𝕟 𝕞𝕠𝕣𝕖 𝕖𝕗𝕗𝕚𝕔𝕚𝕖𝕟𝕥 𝕔𝕙𝕚𝕡𝕤, 𝕞𝕒𝕜𝕚𝕟𝕘 𝕚𝕥 𝕡𝕠𝕤𝕤𝕚𝕓𝕝𝕖 𝕥𝕠 𝕠𝕗𝕗𝕖𝕣 𝕒𝕣𝕥𝕚𝕗𝕚𝕔𝕚𝕒𝕝 𝕚𝕟𝕥𝕖𝕝𝕝𝕚𝕘𝕖𝕟𝕔𝕖 𝕤𝕖𝕣𝕧𝕚𝕔𝕖𝕤 𝕒𝕥 𝕒 𝕝𝕠𝕨𝕖𝕣 𝕔𝕠𝕤𝕥. 𝕋𝕙𝕚𝕤 𝕨𝕚𝕝𝕝 𝕓𝕖𝕟𝕖𝕗𝕚𝕥 𝕔𝕠𝕞𝕡𝕒𝕟𝕚𝕖𝕤 𝕝𝕠𝕠𝕜𝕚𝕟𝕘 𝕥𝕠 𝕚𝕟𝕥𝕖𝕘𝕣𝕒𝕥𝕖 𝔸𝕀 𝕚𝕟𝕥𝕠 𝕥𝕙𝕖𝕚𝕣 𝕡𝕣𝕠𝕕𝕦𝕔𝕥𝕤.

ℕ𝕖𝕨 ℂℙ𝕌 𝕒𝕟𝕕 𝔾ℙ𝕌 𝕒𝕣𝕔𝕙𝕚𝕥𝕖𝕔𝕥𝕦𝕣𝕖𝕤 𝕒𝕣𝕖 𝕣𝕖𝕧𝕠𝕝𝕦𝕥𝕚𝕠𝕟𝕚𝕤𝕚𝕟𝕘 𝕥𝕙𝕖 𝕕𝕖𝕧𝕖𝕝𝕠𝕡𝕞𝕖𝕟𝕥 𝕠𝕗 𝕒𝕣𝕥𝕚𝕗𝕚𝕔𝕚𝕒𝕝 𝕚𝕟𝕥𝕖𝕝𝕝𝕚𝕘𝕖𝕟𝕔𝕖, 𝕓𝕖𝕟𝕖𝕗𝕚𝕥𝕚𝕟𝕘 𝕔𝕠𝕞𝕡𝕒𝕟𝕚𝕖𝕤 𝕤𝕦𝕔𝕙 𝕒𝕤 𝔸𝕡𝕡𝕝𝕖, 𝔸𝕄𝔻 𝕒𝕟𝕕 𝕄𝕖𝕥𝕒. 𝕋𝕙𝕚𝕤 𝕔𝕣𝕖𝕒𝕥𝕖𝕤 𝕒𝕔𝕔𝕖𝕤𝕤𝕚𝕓𝕝𝕖 𝕠𝕡𝕡𝕠𝕣𝕥𝕦𝕟𝕚𝕥𝕚𝕖𝕤 𝕗𝕠𝕣 𝕞𝕠𝕣𝕖 𝕡𝕖𝕠𝕡𝕝𝕖 𝕚𝕟 𝕥𝕙𝕖 𝕥𝕖𝕔𝕙𝕟𝕠𝕝𝕠𝕘𝕪 𝕗𝕚𝕖𝕝𝕕. 𝔸𝕡𝕡𝕝𝕖'𝕤 𝕒𝕣𝕔𝕙𝕚𝕥𝕖𝕔𝕥𝕦𝕣𝕖, 𝕨𝕙𝕚𝕔𝕙 𝕚𝕟𝕔𝕝𝕦𝕕𝕖𝕤 𝕞𝕖𝕞𝕠𝕣𝕪 𝕤𝕙𝕒𝕣𝕚𝕟𝕘 𝕓𝕖𝕥𝕨𝕖𝕖𝕟 ℂℙ𝕌𝕤 𝕒𝕟𝕕 𝔾ℙ𝕌𝕤, 𝕚𝕞𝕡𝕣𝕠𝕧𝕖𝕤 𝕡𝕖𝕣𝕗𝕠𝕣𝕞𝕒𝕟𝕔𝕖 𝕚𝕟 𝕄𝕒𝕔𝕙𝕚𝕟𝕖 𝕃𝕖𝕒𝕣𝕟𝕚𝕟𝕘 𝕥𝕒𝕤𝕜𝕤. 𝕋𝕙𝕚𝕤 𝕡𝕠𝕤𝕚𝕥𝕚𝕠𝕟𝕤 𝔸𝕡𝕡𝕝𝕖 𝕒𝕤 𝕒 𝕝𝕖𝕒𝕕𝕚𝕟𝕘 𝕥𝕖𝕔𝕙𝕟𝕠𝕝𝕠𝕘𝕪 𝕚𝕟𝕟𝕠𝕧𝕒𝕥𝕠𝕣.

ℂ𝕠𝕞𝕡𝕒𝕟𝕚𝕖𝕤 𝕝𝕚𝕜𝕖 𝔸𝕄𝔻 𝕒𝕣𝕖 𝕓𝕣𝕖𝕒𝕜𝕚𝕟𝕘 ℕ𝕧𝕚𝕕𝕚𝕒'𝕤 𝕞𝕠𝕟𝕠𝕡𝕠𝕝𝕪, 𝕖𝕟𝕒𝕓𝕝𝕚𝕟𝕘 𝕓𝕣𝕠𝕒𝕕𝕖𝕣 𝕒𝕟𝕕 𝕞𝕠𝕣𝕖 𝕔𝕠𝕞𝕡𝕖𝕥𝕚𝕥𝕚𝕧𝕖 𝕒𝕔𝕔𝕖𝕤𝕤 𝕥𝕠 𝕒𝕣𝕥𝕚𝕗𝕚𝕔𝕚𝕒𝕝 𝕚𝕟𝕥𝕖𝕝𝕝𝕚𝕘𝕖𝕟𝕔𝕖. 𝕋𝕙𝕚𝕤 𝕕𝕖𝕞𝕠𝕔𝕣𝕒𝕥𝕚𝕤𝕖𝕤 𝕥𝕖𝕔𝕙𝕟𝕠𝕝𝕠𝕘𝕚𝕔𝕒𝕝 𝕕𝕖𝕧𝕖𝕝𝕠𝕡𝕞𝕖𝕟𝕥 𝕒𝕞𝕠𝕟𝕘 𝕕𝕚𝕗𝕗𝕖𝕣𝕖𝕟𝕥 𝕡𝕝𝕒𝕪𝕖𝕣𝕤 𝕚𝕟 𝕥𝕙𝕖 𝕞𝕒𝕣𝕜𝕖𝕥. 𝕋𝕙𝕖 𝕖𝕞𝕖𝕣𝕘𝕖𝕟𝕔𝕖 𝕠𝕗 𝕠𝕡𝕖𝕟 𝔸𝕀 𝕞𝕠𝕕𝕖𝕝𝕤 𝕓𝕖𝕟𝕖𝕗𝕚𝕥𝕤 𝕄𝕖𝕥𝕒 𝕒𝕟𝕕 𝕖𝕟𝕒𝕓𝕝𝕖𝕤 𝕨𝕚𝕕𝕖𝕣 𝕕𝕚𝕤𝕥𝕣𝕚𝕓𝕦𝕥𝕚𝕠𝕟 𝕠𝕗 𝕔𝕠𝕟𝕥𝕖𝕟𝕥. 𝕋𝕙𝕚𝕤 𝕙𝕖𝕝𝕡𝕤 𝕥𝕙𝕖𝕞 𝕔𝕠𝕟𝕤𝕠𝕝𝕚𝕕𝕒𝕥𝕖 𝕥𝕙𝕖𝕚𝕣 𝕡𝕠𝕤𝕚𝕥𝕚𝕠𝕟 𝕒𝕤 𝕒 𝕝𝕖𝕒𝕕𝕖𝕣 𝕚𝕟 𝔸𝕀 𝕕𝕖𝕧𝕖𝕝𝕠𝕡𝕞𝕖𝕟𝕥 𝕚𝕟 𝕥𝕙𝕖 𝕎𝕖𝕤𝕥.

𝔹𝕦𝕥 𝕥𝕠 𝕓𝕖 𝕗𝕒𝕚𝕣, 𝕨𝕙𝕖𝕟 ℂ𝕙𝕒𝕥𝔾ℙ𝕋 𝕔𝕒𝕞𝕖 𝕠𝕦𝕥 𝕀 𝕨𝕒𝕤 𝕕𝕖𝕡𝕣𝕖𝕤𝕤𝕖𝕕. 𝕋𝕙𝕖 𝕣𝕖𝕒𝕝𝕚𝕥𝕪 𝕚𝕤, 𝕥𝕙𝕖 𝕕𝕒𝕪 ℂ𝕙𝕒𝕥𝔾ℙ𝕋 𝕔𝕒𝕞𝕖 𝕠𝕦𝕥 𝕀 𝕤𝕒𝕨 𝕚𝕟 𝕥𝕙𝕖 𝕗𝕦𝕥𝕦𝕣𝕖 𝕥𝕙𝕒𝕥 𝕥𝕙𝕖 𝕗𝕦𝕥𝕦𝕣𝕖 𝕨𝕒𝕤 𝕟𝕠𝕥 𝕘𝕠𝕚𝕟𝕘 𝕥𝕠 𝕓𝕖 𝕤𝕠 𝕗𝕦𝕟 𝕗𝕠𝕣 𝕤𝕥𝕒𝕣𝕥𝕦𝕡𝕤, 𝕓𝕖𝕔𝕒𝕦𝕤𝕖 𝕆𝕡𝕖𝕟𝔸𝕀 𝕟𝕖𝕖𝕕𝕖𝕕 𝕓𝕚𝕝𝕝𝕚𝕠𝕟𝕤 𝕠𝕗 𝕕𝕠𝕝𝕝𝕒𝕣𝕤 𝕥𝕠 𝕘𝕖𝕥 𝕥𝕠 𝕥𝕙𝕖 𝕡𝕠𝕚𝕟𝕥 𝕚𝕥 𝕘𝕠𝕥 𝕥𝕠, 𝕤𝕦𝕡𝕡𝕠𝕣𝕥 𝕗𝕣𝕠𝕞 𝕄𝕚𝕔𝕣𝕠𝕤𝕠𝕗𝕥, 𝕤𝕦𝕡𝕡𝕠𝕣𝕥 𝕗𝕣𝕠𝕞 𝕆𝕣𝕒𝕔𝕝𝕖, 𝕞𝕠𝕟𝕖𝕪 𝕗𝕣𝕠𝕞 𝕒 𝕝𝕠𝕥 𝕠𝕗 𝕡𝕝𝕒𝕪𝕖𝕣𝕤 𝕥𝕠 𝕘𝕖𝕥 𝕥𝕙𝕖𝕣𝕖 𝕗𝕚𝕣𝕤𝕥. 𝕋𝕙𝕖 𝕣𝕖𝕗𝕚𝕟𝕖𝕕 𝕞𝕠𝕕𝕖𝕝 𝕨𝕙𝕖𝕣𝕖 𝕥𝕙𝕖𝕪 𝕙𝕚𝕣𝕖𝕕 𝕥𝕙𝕠𝕦𝕤𝕒𝕟𝕕𝕤 𝕒𝕟𝕕 𝕥𝕙𝕠𝕦𝕤𝕒𝕟𝕕𝕤 𝕒𝕟𝕕 𝕥𝕙𝕠𝕦𝕤𝕒𝕟𝕕𝕤 𝕠𝕗 𝕡𝕖𝕠𝕡𝕝𝕖 𝕨𝕙𝕠 𝕥𝕣𝕒𝕚𝕟𝕖𝕕 𝕒𝕟𝕕 𝕣𝕖𝕗𝕚𝕟𝕖𝕕 𝕥𝕙𝕖 𝕓𝕖𝕙𝕒𝕧𝕚𝕠𝕦𝕣 𝕠𝕗 𝕥𝕙𝕖 𝕞𝕠𝕕𝕖𝕝, 𝕒𝕟𝕕 𝕥𝕙𝕖𝕟 𝕠𝕗 𝕔𝕠𝕦𝕣𝕤𝕖 𝕥𝕙𝕖 𝕓𝕦𝕚𝕝𝕕𝕚𝕟𝕘 𝕠𝕗 𝕥𝕙𝕖 𝕕𝕒𝕥𝕒, 𝕥𝕙𝕖 𝕚𝕟𝕗𝕖𝕣𝕖𝕟𝕔𝕖 𝕤𝕖𝕣𝕧𝕖𝕣𝕤, 𝕥𝕙𝕖 𝕥𝕣𝕒𝕚𝕟𝕚𝕟𝕘. 𝕋𝕙𝕒𝕥 𝕞𝕒𝕕𝕖 𝕞𝕖 𝕥𝕙𝕚𝕟𝕜 𝕥𝕙𝕒𝕥 𝕥𝕙𝕚𝕤 𝕨𝕒𝕤 𝕒 𝕘𝕒𝕞𝕖 𝕥𝕙𝕒𝕥 𝕠𝕟𝕝𝕪 𝕥𝕙𝕖 𝕞𝕦𝕝𝕥𝕚-𝕓𝕚𝕝𝕝𝕚𝕠𝕟 𝕕𝕠𝕝𝕝𝕒𝕣 𝕔𝕠𝕞𝕡𝕒𝕟𝕚𝕖𝕤 𝕨𝕖𝕣𝕖 𝕘𝕠𝕚𝕟𝕘 𝕥𝕠 𝕨𝕚𝕟, 𝕒𝕟𝕕 𝕥𝕙𝕒𝕥 𝕨𝕖, 𝕥𝕙𝕖 𝕤𝕥𝕒𝕣𝕥𝕦𝕡𝕤, 𝕨𝕖𝕣𝕖 𝕘𝕠𝕚𝕟𝕘 𝕥𝕠 𝕓𝕖 𝕔𝕠𝕟𝕕𝕖𝕞𝕟𝕖𝕕 𝕥𝕠 𝕤𝕚𝕞𝕡𝕝𝕪 𝕔𝕣𝕖𝕒𝕥𝕖 𝕡𝕣𝕠𝕕𝕦𝕔𝕥𝕤 𝕥𝕙𝕒𝕥 𝕦𝕤𝕖𝕕 𝕥𝕙𝕖 𝕞𝕠𝕕𝕖𝕝 𝕓𝕦𝕥 𝕒𝕝𝕨𝕒𝕪𝕤 𝕠𝕟𝕖 𝕤𝕥𝕖𝕡 𝕓𝕖𝕙𝕚𝕟𝕕.

𝔸𝕟𝕕 𝕥𝕙𝕒𝕥 𝕚𝕤 𝕒𝕝𝕤𝕠 𝕥𝕣𝕦𝕖 𝕗𝕠𝕣 𝕠𝕥𝕙𝕖𝕣 𝕔𝕠𝕞𝕡𝕒𝕟𝕚𝕖𝕤. 𝕄𝕖𝕥𝕒, 𝕗𝕠𝕣 𝕖𝕩𝕒𝕞𝕡𝕝𝕖, 𝕥𝕙𝕖 𝕔𝕣𝕖𝕒𝕥𝕠𝕣𝕤 𝕠𝕗 𝔽𝕒𝕔𝕖𝕓𝕠𝕠𝕜, 𝕦𝕟𝕕𝕖𝕣𝕤𝕥𝕠𝕠𝕕 𝕥𝕙𝕒𝕥 𝕥𝕙𝕖𝕪 𝕨𝕖𝕣𝕖 𝕒𝕝𝕤𝕠 𝕒 𝕝𝕚𝕥𝕥𝕝𝕖 𝕓𝕖𝕙𝕚𝕟𝕕 𝕨𝕚𝕥𝕙 𝕥𝕙𝕖𝕚𝕣 𝕠𝕨𝕟 𝕞𝕠𝕕𝕖𝕝, 𝕃𝕝𝕒𝕞𝕒. 𝕋𝕙𝕖𝕪 𝕕𝕖𝕞𝕠𝕝𝕚𝕤𝕙𝕖𝕕 𝕒 𝕕𝕒𝕥𝕒𝕔𝕖𝕟𝕥𝕖𝕣 𝕚𝕟 𝟚𝟘𝟚𝟛 𝕥𝕙𝕒𝕥 𝕥𝕙𝕖𝕪 𝕙𝕒𝕕 𝕒𝕝𝕣𝕖𝕒𝕕𝕪 𝕙𝕒𝕝𝕗-𝕔𝕠𝕞𝕡𝕝𝕖𝕥𝕖𝕕 𝕒𝕟𝕕 𝕣𝕖𝕤𝕥𝕒𝕣𝕥𝕖𝕕 𝕚𝕥 𝕗𝕣𝕠𝕞 𝕤𝕔𝕣𝕒𝕥𝕔𝕙 𝕥𝕠 𝕣𝕖𝕓𝕦𝕚𝕝𝕕 𝕚𝕥 𝕨𝕚𝕥𝕙 𝕥𝕙𝕖 𝕒𝕣𝕔𝕙𝕚𝕥𝕖𝕔𝕥𝕦𝕣𝕖 𝕟𝕖𝕖𝕕𝕖𝕕 𝕗𝕠𝕣 𝕥𝕙𝕖 𝕗𝕦𝕥𝕦𝕣𝕖 𝕠𝕗 𝕒𝕣𝕥𝕚𝕗𝕚𝕔𝕚𝕒𝕝 𝕚𝕟𝕥𝕖𝕝𝕝𝕚𝕘𝕖𝕟𝕔𝕖. 𝕌𝕟𝕥𝕚𝕝 𝕠𝕟𝕖 𝕕𝕒𝕪 𝕒 𝕄𝕖𝕥𝕒 𝕖𝕞𝕡𝕝𝕠𝕪𝕖𝕖 𝕝𝕖𝕒𝕜𝕤 𝕥𝕙𝕖 𝕃𝕝𝕒𝕞𝕒 𝕞𝕠𝕕𝕖𝕝 𝕒𝕟𝕕 𝕗𝕠𝕣𝕔𝕖𝕤 ℤ 𝕦𝕔𝕜𝕖𝕣𝕓𝕖𝕣𝕘 𝕥𝕠 𝕔𝕣𝕖𝕒𝕥𝕖 𝕒 𝕗𝕣𝕖𝕖 𝕞𝕠𝕕𝕖𝕝 𝕥𝕣𝕒𝕚𝕟𝕚𝕟𝕘 𝕤𝕥𝕒𝕟𝕕𝕒𝕣𝕕 𝕒𝕟𝕕 𝕣𝕖𝕝𝕖𝕒𝕤𝕖 𝕥𝕙𝕖 𝕡𝕣𝕖-𝕥𝕣𝕒𝕚𝕟𝕖𝕕 𝕨𝕖𝕚𝕘𝕙𝕥𝕤 𝕨𝕚𝕥𝕙𝕠𝕦𝕥 𝕣𝕖𝕝𝕖𝕒𝕤𝕚𝕟𝕘 𝕥𝕙𝕖 𝕕𝕒𝕥𝕒 𝕠𝕗 𝕒𝕟 𝕒𝕣𝕥𝕚𝕗𝕚𝕔𝕚𝕒𝕝 𝕚𝕟𝕥𝕖𝕝𝕝𝕚𝕘𝕖𝕟𝕔𝕖 𝕞𝕠𝕕𝕖𝕝 𝕒𝕥 𝕥𝕙𝕖 𝕝𝕖𝕧𝕖𝕝 𝕠𝕗 𝔾ℙ𝕋-𝟚 𝕒𝕟𝕕 𝔾ℙ𝕋-𝟛. 𝕋𝕙𝕚𝕤 𝕚𝕤 𝕜𝕟𝕠𝕨𝕟 𝕒𝕤 𝕃𝕝𝕒𝕞𝕒, 𝕒𝕟𝕕 𝕃𝕝𝕒𝕞𝕒 𝕔𝕙𝕒𝕟𝕘𝕖𝕕 𝕖𝕧𝕖𝕣𝕪𝕥𝕙𝕚𝕟𝕘. 𝕃𝕝𝕒𝕞𝕒 𝕨𝕒𝕤 𝕒 𝕤𝕞𝕒𝕝𝕝 𝕙𝕠𝕡𝕖 𝕗𝕠𝕣 𝕤𝕥𝕒𝕣𝕥𝕦𝕡𝕤, 𝕒𝕟𝕕 𝕪𝕠𝕦 𝕔𝕒𝕟 𝕣𝕦𝕟 𝕃𝕝𝕒𝕞𝕒 𝕠𝕟-𝕡𝕣𝕖𝕞𝕚𝕤𝕖𝕤 𝕨𝕚𝕥𝕙𝕠𝕦𝕥 𝕒 𝕕𝕠𝕦𝕓𝕥.

𝔹𝕦𝕥 𝕥𝕙𝕖 𝕚𝕞𝕡𝕠𝕣𝕥𝕒𝕟𝕥 𝕥𝕙𝕚𝕟𝕘 𝕒𝕓𝕠𝕦𝕥 𝕃𝕝𝕒𝕞𝕒 𝕚𝕤 𝕟𝕠𝕥 𝕛𝕦𝕤𝕥 𝕥𝕙𝕒𝕥, 𝕚𝕥 𝕤𝕙𝕠𝕨𝕖𝕕 𝕥𝕙𝕖 𝕨𝕠𝕣𝕝𝕕 𝕙𝕠𝕨 𝕥𝕠 𝕕𝕠 𝕥𝕙𝕚𝕤 𝕒𝕟𝕕 𝕟𝕠𝕨 𝕥𝕙𝕚𝕤 𝕥𝕖𝕒𝕞 𝕠𝕗 ℂ𝕙𝕚𝕟𝕖𝕤𝕖 𝕔𝕣𝕖𝕒𝕥𝕖𝕕 𝔻𝕖𝕖𝕡𝕊𝕖𝕖𝕜 ℝ𝟙, 𝕒 𝕞𝕠𝕕𝕖𝕝 𝕥𝕙𝕒𝕥 𝕀 𝕒𝕝𝕣𝕖𝕒𝕕𝕪 𝕙𝕒𝕧𝕖 𝕣𝕦𝕟𝕟𝕚𝕟𝕘 𝕠𝕟 𝕞𝕪 𝕝𝕒𝕡𝕥𝕠𝕡. ℕ𝕠𝕥 𝕠𝕟𝕝𝕪 𝕚𝕤 𝕥𝕙𝕖 𝕞𝕠𝕕𝕖𝕝 𝕒𝕞𝕒𝕫𝕚𝕟𝕘, 𝕓𝕦𝕥 𝕥𝕙𝕖𝕪 𝕕𝕚𝕤𝕥𝕚𝕝𝕝𝕖𝕕 𝕚𝕥. 𝔻𝕚𝕤𝕥𝕚𝕝𝕝𝕚𝕟𝕘 𝕚𝕤 𝕒 𝕡𝕣𝕠𝕔𝕖𝕤𝕤 𝕨𝕙𝕖𝕣𝕖 𝕥𝕙𝕖 𝕓𝕚𝕘 𝕞𝕠𝕕𝕖𝕝 𝕒𝕔𝕥𝕤 𝕒𝕤 𝕒 𝕥𝕖𝕒𝕔𝕙𝕖𝕣 𝕒𝕟𝕕 𝕥𝕙𝕖 𝕝𝕚𝕥𝕥𝕝𝕖 𝕞𝕠𝕕𝕖𝕝𝕤 𝕒𝕔𝕥 𝕒𝕤 𝕤𝕥𝕦𝕕𝕖𝕟𝕥𝕤. 𝔸𝕟𝕕 𝕟𝕠𝕥 𝕥𝕠 𝕞𝕒𝕜𝕖 𝕚𝕥 𝕥𝕠𝕠 𝕔𝕠𝕞𝕡𝕝𝕚𝕔𝕒𝕥𝕖𝕕, 𝕚𝕥 𝕒𝕝𝕝𝕠𝕨𝕤 𝕪𝕠𝕦 𝕥𝕠 𝕔𝕣𝕖𝕒𝕥𝕖 𝕤𝕞𝕒𝕝𝕝𝕖𝕣 𝕞𝕠𝕕𝕖𝕝𝕤 𝕥𝕙𝕒𝕥 𝕒𝕣𝕖 𝕒𝕝𝕞𝕠𝕤𝕥 𝕒𝕤 𝕘𝕠𝕠𝕕 𝕒𝕤 𝕥𝕙𝕖 𝕓𝕚𝕘 𝕞𝕠𝕕𝕖𝕝 𝕓𝕦𝕥 𝕒𝕣𝕖 𝕞𝕦𝕔𝕙 𝕗𝕒𝕤𝕥𝕖𝕣. 𝕀'𝕧𝕖 𝕓𝕖𝕖𝕟 𝕥𝕖𝕤𝕥𝕚𝕟𝕘 ℝ𝟙 𝕚𝕟 𝕥𝕙𝕖 𝕗𝕒𝕤𝕥 𝕧𝕖𝕣𝕤𝕚𝕠𝕟 𝕠𝕟 𝕞𝕪 𝕠𝕨𝕟 𝕤𝕖𝕣𝕧𝕖𝕣𝕤, 𝕥𝕙𝕖 𝕔𝕠𝕞𝕡𝕒𝕔𝕥 𝕧𝕖𝕣𝕤𝕚𝕠𝕟, 𝕒𝕟𝕕 𝕚𝕥'𝕤 𝕚𝕞𝕡𝕣𝕖𝕤𝕤𝕚𝕧𝕖. 𝕀𝕥'𝕤 𝕓𝕒𝕤𝕚𝕔𝕒𝕝𝕝𝕪 𝔾ℙ𝕋-𝟜 𝕝𝕖𝕧𝕖𝕝 𝕠𝕣 𝕀 𝕨𝕠𝕦𝕝𝕕 𝕤𝕒𝕪 𝕓𝕖𝕥𝕥𝕖𝕣, 𝕚𝕥'𝕤 𝔾ℙ𝕋-𝟜 𝕞𝕚𝕟𝕚 𝕝𝕖𝕧𝕖𝕝, 𝕤𝕠 𝕚𝕥 𝕞𝕠𝕧𝕖𝕤 𝕤𝕦𝕡𝕖𝕣𝕗𝕒𝕤𝕥.

ℝ𝟙 𝕚𝕤 𝕤𝕠 𝕘𝕠𝕠𝕕 𝕥𝕙𝕒𝕥 𝕥𝕙𝕖 𝕎𝕖𝕓𝔸𝕤𝕤𝕖𝕞𝕓𝕝𝕪 𝕥𝕖𝕒𝕞, 𝕥𝕙𝕖 𝕥𝕖𝕒𝕞 𝕥𝕙𝕒𝕥 𝕞𝕒𝕜𝕖𝕤 𝕎𝕖𝕓𝔸𝕤𝕤𝕖𝕞𝕓𝕝𝕪, 𝕥𝕙𝕖 𝕞𝕒𝕔𝕙𝕚𝕟𝕖 𝕝𝕒𝕟𝕘𝕦𝕒𝕘𝕖, 𝕣𝕦𝕟 𝕚𝕟 𝕥𝕙𝕖 𝕓𝕣𝕠𝕨𝕤𝕖𝕣, 𝕥𝕠𝕠𝕜 𝕒 𝕞𝕠𝕕𝕦𝕝𝕖 𝕞𝕒𝕕𝕖 𝕚𝕟 ℂ++ 𝕒𝕟𝕕 𝕣𝕒𝕟 𝕚𝕥 𝕥𝕙𝕣𝕠𝕦𝕘𝕙 ℝ𝟙, 𝕒𝕟𝕕 𝕞𝕒𝕟𝕒𝕘𝕖𝕕 𝕥𝕠 𝕣𝕖𝕨𝕣𝕚𝕥𝕖 𝕚𝕥 𝕥𝕠 𝕦𝕤𝕖 𝕥𝕙𝕖 𝕔𝕙𝕚𝕡𝕤' 𝕟𝕒𝕥𝕚𝕧𝕖 ℂℙ𝕌 𝕚𝕟𝕤𝕥𝕣𝕦𝕔𝕥𝕚𝕠𝕟𝕤. 𝕋𝕙𝕖 𝕚𝕟𝕤𝕥𝕣𝕦𝕔𝕥𝕚𝕠𝕟𝕤 𝕒𝕣𝕖 𝕔𝕒𝕝𝕝𝕖𝕕 𝕊𝕀𝕄𝔻. 𝟡𝟡% 𝕠𝕗 𝕥𝕙𝕖 𝕔𝕠𝕕𝕖 𝕨𝕒𝕤 𝕨𝕣𝕚𝕥𝕥𝕖𝕟 𝕓𝕪 ℝ𝟙, 𝕒𝕟𝕕 𝕥𝕙𝕖 𝕣𝕖𝕡𝕠 𝕚𝕤 𝕠𝕡𝕖𝕟 𝕒𝕟𝕕 𝕡𝕦𝕓𝕝𝕚𝕔𝕝𝕪 𝕒𝕧𝕒𝕚𝕝𝕒𝕓𝕝𝕖. 𝕃𝕠𝕠𝕜 𝕚𝕥 𝕦𝕡, 𝕘𝕠𝕠𝕘𝕝𝕖 𝕚𝕥, 𝕒𝕟𝕕 𝕪𝕠𝕦'𝕝𝕝 𝕗𝕚𝕟𝕕 𝕚𝕥. 𝕃𝕠𝕠𝕜 𝕒𝕥 ℝ𝟙 𝕗𝕣𝕠𝕞 𝔹ℙ𝕊ℂ, 𝕚𝕥'𝕤 𝕒𝕝𝕞𝕠𝕤𝕥 𝕔𝕖𝕣𝕥𝕒𝕚𝕟𝕝𝕪 𝕒 𝕡𝕣𝕠𝕕𝕦𝕔𝕥 𝕠𝕗 𝕒 ℂ𝕙𝕚𝕟𝕖𝕤𝕖 𝕦𝕟𝕕𝕖𝕣𝕘𝕣𝕠𝕦𝕟𝕕 𝕕𝕒𝕥𝕒 𝕔𝕖𝕟𝕥𝕖𝕣, 𝕓𝕖𝕔𝕒𝕦𝕤𝕖 𝕥𝕙𝕖 𝕌𝕊 𝕚𝕤 𝕡𝕣𝕖𝕧𝕖𝕟𝕥𝕚𝕟𝕘 𝕥𝕙𝕖 𝕤𝕙𝕚𝕡𝕞𝕖𝕟𝕥 𝕠𝕗 ℍ𝟙𝟘𝟘 𝕔𝕙𝕚𝕡𝕤 𝕥𝕠 ℂ𝕙𝕚𝕟𝕒, ℕ𝕧𝕚𝕕𝕚𝕒'𝕤 𝕞𝕠𝕤𝕥 𝕒𝕕𝕧𝕒𝕟𝕔𝕖𝕕 𝕔𝕙𝕚𝕡𝕤. 𝔹𝕦𝕥 ℂ𝕙𝕚𝕟𝕒 𝕙𝕒𝕤 𝕒 𝕝𝕠𝕥 𝕠𝕗 ℍ𝟠𝟘𝟘 𝕔𝕙𝕚𝕡𝕤. 𝕋𝕙𝕖 𝕣𝕦𝕞𝕠𝕦𝕣 𝕚𝕤 𝕥𝕙𝕒𝕥 𝕥𝕙𝕖𝕪 𝕙𝕒𝕧𝕖 𝕒 𝕔𝕝𝕦𝕤𝕥𝕖𝕣 𝕠𝕗 𝟝𝟘,𝟘𝟘𝟘 ℍ𝟞𝟘𝟘 𝕔𝕙𝕚𝕡𝕤. 𝕋𝕠 𝕘𝕚𝕧𝕖 𝕪𝕠𝕦 𝕒𝕟 𝕚𝕕𝕖𝕒, 𝕔𝕠𝕞𝕡𝕒𝕟𝕚𝕖𝕤 𝕝𝕚𝕜𝕖 𝕄𝕚𝕔𝕣𝕠𝕤𝕠𝕗𝕥 𝕙𝕒𝕧𝕖 𝕙𝕒𝕝𝕗 𝕒 𝕞𝕚𝕝𝕝𝕚𝕠𝕟 ℍ𝟙𝟘𝟘 𝕔𝕙𝕚𝕡𝕤, 𝕨𝕙𝕚𝕔𝕙 𝕒𝕣𝕖 𝕥𝕙𝕖 𝕞𝕠𝕤𝕥 𝕒𝕕𝕧𝕒𝕟𝕔𝕖𝕕. 𝔹𝕦𝕥 𝕥𝕙𝕒𝕥 𝕕𝕠𝕖𝕤𝕟'𝕥 𝕞𝕒𝕥𝕥𝕖𝕣, 𝕥𝕙𝕒𝕥 𝕚𝕗 𝕚𝕥 𝕔𝕠𝕤𝕥 𝟝 𝕞𝕚𝕝𝕝𝕚𝕠𝕟 𝕕𝕠𝕝𝕝𝕒𝕣𝕤, 𝕚𝕥 𝕕𝕠𝕖𝕤𝕟'𝕥 𝕞𝕒𝕥𝕥𝕖𝕣. 𝕀𝕥'𝕤 𝕡𝕣𝕠𝕓𝕒𝕓𝕝𝕪 𝕒 𝕝𝕚𝕖 𝕥𝕙𝕒𝕥 𝕥𝕙𝕖 𝔻𝕚𝕡𝕤 ℝ𝟙 𝕞𝕠𝕕𝕖𝕝 𝕔𝕠𝕤𝕥 𝟝 𝕞𝕚𝕝𝕝𝕚𝕠𝕟 𝕕𝕠𝕝𝕝𝕒𝕣𝕤 𝕥𝕠 𝕥𝕣𝕒𝕚𝕟, 𝕟𝕠𝕟𝕖 𝕠𝕗 𝕥𝕙𝕒𝕥 𝕚𝕤 𝕚𝕞𝕡𝕠𝕣𝕥𝕒𝕟𝕥. 𝕋𝕙𝕖𝕣𝕖 𝕪𝕠𝕦 𝕒𝕣𝕖 𝕞𝕚𝕤𝕤𝕚𝕟𝕘 𝕥𝕙𝕖 𝕡𝕠𝕚𝕟𝕥 𝕠𝕗 𝕨𝕙𝕒𝕥 𝕚𝕤 𝕣𝕖𝕒𝕝𝕝𝕪 𝕤𝕚𝕘𝕟𝕚𝕗𝕚𝕔𝕒𝕟𝕥.

ℝ𝟙 𝕔𝕒𝕟 𝕓𝕖 𝕣𝕖𝕡𝕝𝕚𝕔𝕒𝕥𝕖𝕕. ℍ𝕦𝕘𝕘𝕚𝕟𝕘 𝔽𝕒𝕔𝕖, 𝕥𝕙𝕖 ℍ𝕦𝕘𝕘𝕚𝕟𝕘 𝔽𝕒𝕔𝕖 𝕥𝕖𝕒𝕞, 𝕡𝕣𝕠𝕓𝕒𝕓𝕝𝕪 𝕥𝕙𝕖 𝕝𝕖𝕒𝕕𝕖𝕣𝕤 𝕚𝕟 𝕞𝕒𝕜𝕚𝕟𝕘 𝕥𝕙𝕚𝕟𝕘𝕤 𝕝𝕚𝕜𝕖 ℝ𝟙 𝕠𝕡𝕖𝕟𝕝𝕪 𝕒𝕧𝕒𝕚𝕝𝕒𝕓𝕝𝕖 𝕥𝕠 𝕥𝕙𝕖 𝕨𝕠𝕣𝕝𝕕, 𝕒𝕣𝕖 𝕔𝕦𝕣𝕣𝕖𝕟𝕥𝕝𝕪 𝕕𝕠𝕚𝕟𝕘 𝕒 𝕡𝕣𝕠𝕛𝕖𝕔𝕥 𝕥𝕠 𝕣𝕖𝕡𝕝𝕚𝕔𝕒𝕥𝕖 𝕥𝕙𝕖 𝕥𝕣𝕒𝕚𝕟𝕚𝕟𝕘 𝕥𝕙𝕖𝕪 𝕕𝕚𝕕 𝕠𝕟 ℝ𝟙, 𝕥𝕙𝕖 𝕡𝕖𝕠𝕡𝕝𝕖 𝕗𝕣𝕠𝕞 𝕥𝕙𝕖 𝕔𝕠𝕞𝕡𝕒𝕟𝕪 𝕥𝕙𝕒𝕥 𝕔𝕣𝕖𝕒𝕥𝕖𝕕 𝔻𝕚𝕡𝕤𝕪, 𝕒𝕟𝕕 𝕥𝕙𝕦𝕤 𝕔𝕠𝕟𝕗𝕚𝕣𝕞 𝕥𝕙𝕒𝕥 𝕚𝕥 𝕔𝕒𝕟 𝕓𝕖 𝕕𝕠𝕟𝕖. 𝕋𝕙𝕖 𝕆𝕡𝕖𝕟𝔸𝕀 𝕥𝕖𝕒𝕞 𝕚𝕥𝕤𝕖𝕝𝕗 𝕙𝕒𝕤 𝕒𝕝𝕣𝕖𝕒𝕕𝕪 𝕒𝕟𝕟𝕠𝕦𝕟𝕔𝕖𝕕 𝕥𝕙𝕒𝕥 𝕓𝕪 𝕣𝕖𝕒𝕕𝕚𝕟𝕘 𝕥𝕙𝕖 𝔻𝕚𝕡𝕤 ℝ𝟙 𝕡𝕒𝕡𝕖𝕣 𝕥𝕙𝕖𝕪 𝕗𝕠𝕦𝕟𝕕 𝕥𝕙𝕒𝕥 𝕥𝕙𝕖 𝕔𝕠𝕞𝕡𝕒𝕟𝕪 𝕞𝕒𝕟𝕒𝕘𝕖𝕕 𝕥𝕠 𝕚𝕞𝕡𝕝𝕖𝕞𝕖𝕟𝕥 𝕤𝕖𝕧𝕖𝕣𝕒𝕝 𝕠𝕗 𝕥𝕙𝕖 𝕚𝕟𝕟𝕠𝕧𝕒𝕥𝕚𝕠𝕟𝕤 𝕥𝕙𝕒𝕥 𝕥𝕙𝕖 𝕤𝕒𝕞𝕖 𝕥𝕖𝕒𝕞 𝕥𝕙𝕠𝕦𝕘𝕙𝕥 𝕠𝕗 𝕗𝕠𝕣 𝔾ℙ𝕋-𝟜. ℝ𝟙, 𝕥𝕙𝕖 ℂ𝕙𝕚𝕟𝕖𝕤𝕖 𝕞𝕠𝕕𝕖𝕝, 𝕚𝕤 𝟙.𝟝 𝕠𝕣𝕕𝕖𝕣𝕤 𝕠𝕗 𝕞𝕒𝕘𝕟𝕚𝕥𝕦𝕕𝕖 𝕔𝕙𝕖𝕒𝕡𝕖𝕣 𝕥𝕙𝕒𝕟 𝔾ℙ𝕋-𝟜, 𝕨𝕚𝕥𝕙 𝕧𝕖𝕣𝕪, 𝕧𝕖𝕣𝕪, 𝕧𝕖𝕣𝕪 𝕤𝕚𝕞𝕚𝕝𝕒𝕣 𝕣𝕖𝕒𝕤𝕠𝕟𝕚𝕟𝕘 𝕡𝕠𝕨𝕖𝕣. 𝕎𝕙𝕚𝕔𝕙, 𝕨𝕖𝕝𝕝, 𝕥𝕙𝕒𝕥'𝕤 𝕚𝕞𝕡𝕣𝕖𝕤𝕤𝕚𝕧𝕖 𝕓𝕖𝕔𝕒𝕦𝕤𝕖 𝕚𝕥 𝕞𝕖𝕒𝕟𝕤 𝕥𝕙𝕒𝕥 𝕥𝕙𝕖 𝕔𝕠𝕤𝕥 𝕠𝕗 𝕚𝕟𝕗𝕖𝕣𝕖𝕟𝕔𝕖 𝕚𝕤 𝕘𝕠𝕚𝕟𝕘 𝕥𝕠 𝕘𝕠 𝕕𝕠𝕨𝕟. 𝔸𝕟𝕕 𝕥𝕙𝕖𝕟 𝕒𝕝𝕝 𝕤𝕥𝕒𝕣𝕥𝕦𝕡𝕤, 𝕒𝕝𝕝 𝕤𝕥𝕒𝕣𝕥𝕦𝕡𝕤 𝕙𝕒𝕧𝕖 𝕒𝕔𝕔𝕖𝕤𝕤 𝕥𝕠 𝕒 𝕗𝕣𝕠𝕟𝕥𝕚𝕖𝕣 𝕞𝕠𝕕𝕖𝕝 𝕝𝕚𝕜𝕖 𝕆𝕡𝕖𝕟𝔸𝕀'𝕤 𝔾ℙ𝕋-𝟜 𝕨𝕚𝕥𝕙𝕠𝕦𝕥 𝕚𝕟𝕧𝕖𝕤𝕥𝕚𝕟𝕘 𝕥𝕙𝕖 𝕞𝕚𝕝𝕝𝕚𝕠𝕟𝕤 𝕠𝕗 𝕕𝕠𝕝𝕝𝕒𝕣𝕤 𝕠𝕗 𝕆𝕡𝕖𝕟𝔸𝕀.

ℕ𝕖𝕩𝕥 𝕔𝕠𝕞𝕖𝕤 𝕒 𝕧𝕖𝕣𝕪 𝕟𝕖𝕣𝕕𝕪 𝕒𝕟𝕕 𝕖𝕟𝕘𝕚𝕟𝕖𝕖𝕣𝕚𝕟𝕘 𝕖𝕩𝕡𝕝𝕒𝕟𝕒𝕥𝕚𝕠𝕟 𝕠𝕗 ℝ𝟙. 𝕎𝕖𝕝𝕝, 𝕟𝕠𝕥 𝕤𝕠 𝕟𝕖𝕣𝕕𝕪, 𝕀 𝕙𝕒𝕧𝕖 𝕞𝕪 𝕝𝕚𝕞𝕚𝕥𝕤 𝕒𝕟𝕕 𝕀 𝕕𝕠𝕟'𝕥 𝕜𝕟𝕠𝕨 𝕥𝕙𝕒𝕥 𝕞𝕦𝕔𝕙, 𝕓𝕦𝕥 𝕀'𝕝𝕝 𝕕𝕠 𝕞𝕪 𝕓𝕖𝕤𝕥. ℝ𝟙 𝕙𝕒𝕤 𝕞𝕒𝕟𝕪 𝕓𝕣𝕚𝕝𝕝𝕚𝕒𝕟𝕥 𝕥𝕙𝕚𝕟𝕘𝕤. 𝔽𝕠𝕣 𝕖𝕩𝕒𝕞𝕡𝕝𝕖, 𝕚𝕟𝕤𝕥𝕖𝕒𝕕 𝕠𝕗 𝕦𝕤𝕚𝕟𝕘 𝟙𝟞-𝕓𝕚𝕥 𝕗𝕝𝕠𝕒𝕥𝕚𝕟𝕘 𝕡𝕠𝕚𝕟𝕥 𝕕𝕖𝕔𝕚𝕞𝕒𝕝𝕤, 𝕥𝕙𝕖𝕪 𝕒𝕣𝕖 𝕦𝕤𝕚𝕟𝕘 𝟠-𝕓𝕚𝕥 𝕗𝕝𝕠𝕒𝕥𝕚𝕟𝕘 𝕡𝕠𝕚𝕟𝕥 𝕕𝕖𝕔𝕚𝕞𝕒𝕝𝕤. 𝕋𝕙𝕒𝕥 𝕓𝕒𝕤𝕚𝕔𝕒𝕝𝕝𝕪 𝕞𝕖𝕒𝕟𝕤 𝕥𝕙𝕒𝕥 𝕚𝕟 𝕥𝕙𝕖 𝕟𝕖𝕦𝕣𝕒𝕝 𝕟𝕖𝕥𝕨𝕠𝕣𝕜 𝕥𝕙𝕖 𝕟𝕦𝕞𝕓𝕖𝕣𝕤 𝕥𝕙𝕒𝕥 𝕨𝕖𝕣𝕖 𝕡𝕣𝕖𝕧𝕚𝕠𝕦𝕤𝕝𝕪 𝕒𝕔𝕔𝕦𝕣𝕒𝕥𝕖 𝕥𝕠 𝕒 𝕓𝕦𝕟𝕔𝕙 𝕠𝕗 𝕕𝕖𝕔𝕚𝕞𝕒𝕝 𝕡𝕝𝕒𝕔𝕖𝕤 𝕒𝕣𝕖 𝕟𝕠𝕨 𝕒𝕔𝕔𝕦𝕣𝕒𝕥𝕖 𝕥𝕠 𝕙𝕒𝕝𝕗 𝕥𝕙𝕒𝕥 𝕟𝕦𝕞𝕓𝕖𝕣. 𝔸𝕟𝕕 𝕥𝕙𝕒𝕥 𝕞𝕖𝕒𝕟𝕤 𝕥𝕙𝕖𝕪 𝕦𝕤𝕖 𝕝𝕖𝕤𝕤 𝕞𝕖𝕞𝕠𝕣𝕪 𝕒𝕟𝕕 𝕝𝕖𝕤𝕤 𝕔𝕠𝕞𝕡𝕦𝕥𝕒𝕥𝕚𝕠𝕟𝕒𝕝 𝕡𝕠𝕨𝕖𝕣, 𝕨𝕙𝕚𝕔𝕙 𝕞𝕒𝕜𝕖𝕤 𝕥𝕙𝕖𝕞 𝕗𝕒𝕤𝕥𝕖𝕣. 𝕋𝕙𝕖𝕪'𝕣𝕖 𝕒𝕝𝕤𝕠 𝕦𝕤𝕚𝕟𝕘 𝕒 𝕔𝕠𝕞𝕡𝕦𝕥𝕖𝕣 𝕤𝕔𝕚𝕖𝕟𝕔𝕖 𝕥𝕖𝕔𝕙𝕟𝕚𝕢𝕦𝕖 𝕔𝕒𝕝𝕝𝕖𝕕 𝕓𝕚𝕥 𝕤𝕙𝕚𝕗𝕥𝕚𝕟𝕘. 𝕎𝕙𝕖𝕟 𝕪𝕠𝕦 𝕡𝕣𝕠𝕘𝕣𝕒𝕞, 𝕪𝕠𝕦 𝕠𝕟𝕝𝕪 𝕥𝕙𝕚𝕟𝕜 𝕚𝕟 𝕓𝕪𝕥𝕖𝕤 𝕓𝕖𝕔𝕒𝕦𝕤𝕖 𝕚𝕥'𝕤 𝕞𝕠𝕣𝕖 𝕡𝕣𝕒𝕔𝕥𝕚𝕔𝕒𝕝, 𝕓𝕦𝕥 𝕪𝕠𝕦 𝕔𝕒𝕟 𝕞𝕠𝕧𝕖 𝕓𝕚𝕥 𝕓𝕪 𝕓𝕚𝕥, 𝕖𝕧𝕖𝕣𝕪 𝕤𝕚𝕟𝕘𝕝𝕖 𝕓𝕚𝕥 𝕠𝕗 𝕥𝕙𝕖 𝕧𝕒𝕣𝕚𝕒𝕓𝕝𝕖𝕤 𝕒𝕟𝕕 𝕞𝕖𝕤𝕤𝕒𝕘𝕖𝕤 𝕪𝕠𝕦 𝕤𝕖𝕟𝕕 𝕥𝕠 𝕥𝕙𝕖 ℂℙ𝕌. 𝕀𝕟 ℂ, 𝕚𝕥 𝕚𝕤 𝕞𝕠𝕣𝕖, 𝕗𝕠𝕣 𝕖𝕩𝕒𝕞𝕡𝕝𝕖, 𝕥𝕙𝕖 𝕤𝕚𝕘𝕟 𝕕𝕠𝕦𝕓𝕝𝕖 𝕘𝕣𝕖𝕒𝕥𝕖𝕣 𝕥𝕙𝕒𝕟 𝕠𝕣 𝕕𝕠𝕦𝕓𝕝𝕖 𝕝𝕖𝕤𝕤 𝕥𝕙𝕒𝕟 𝕚𝕤 𝕒 𝕤𝕚𝕘𝕟 𝕥𝕙𝕒𝕥 𝕒𝕝𝕝𝕠𝕨𝕤 𝕦𝕤 𝕥𝕠 𝕕𝕠 𝕓𝕚𝕥 𝕤𝕙𝕚𝕗𝕥. 𝕋𝕙𝕚𝕤 𝕚𝕤 𝕗𝕦𝕟𝕕𝕒𝕞𝕖𝕟𝕥𝕒𝕝, 𝕗𝕠𝕣 𝕖𝕩𝕒𝕞𝕡𝕝𝕖, 𝕚𝕟 𝕔𝕠𝕞𝕡𝕣𝕖𝕤𝕤𝕚𝕠𝕟 𝕒𝕝𝕘𝕠𝕣𝕚𝕥𝕙𝕞𝕤, 𝕚𝕟 𝕔𝕠𝕕𝕖𝕔𝕤, 𝕒𝕟𝕕 𝕟𝕠𝕨 𝕚𝕥'𝕤 𝕗𝕦𝕟𝕕𝕒𝕞𝕖𝕟𝕥𝕒𝕝 𝕚𝕟 𝔸𝕀. 𝔸𝕝𝕝 𝕠𝕗 𝕥𝕙𝕚𝕤 𝕞𝕖𝕒𝕟𝕤 𝕥𝕙𝕒𝕥 𝕝𝕖𝕤𝕤 ℝ𝔸𝕄 𝕚𝕤 𝕦𝕤𝕖𝕕 𝕒𝕟𝕕 𝕝𝕖𝕤𝕤 𝕡𝕣𝕠𝕔𝕖𝕤𝕤𝕠𝕣 𝕔𝕒𝕡𝕒𝕔𝕚𝕥𝕪 𝕚𝕤 𝕟𝕖𝕖𝕕𝕖𝕕. 𝕋𝕙𝕖𝕣𝕖 𝕒𝕣𝕖 𝕠𝕥𝕙𝕖𝕣 𝕥𝕙𝕚𝕟𝕘𝕤 𝕥𝕙𝕖𝕪 𝕕𝕚𝕕, 𝕝𝕚𝕜𝕖 𝕔𝕒𝕔𝕙𝕚𝕟𝕘 𝕒𝕥𝕥𝕖𝕟𝕥𝕚𝕠𝕟 𝕔𝕠𝕞𝕡𝕦𝕥𝕒𝕥𝕚𝕠𝕟, 𝕨𝕙𝕚𝕔𝕙 𝕚𝕤 𝕥𝕙𝕖 𝕔𝕠𝕟𝕤𝕚𝕕𝕖𝕣𝕒𝕓𝕝𝕖 𝕤𝕪𝕤𝕥𝕖𝕞 𝕥𝕙𝕒𝕥 𝕞𝕠𝕕𝕖𝕣𝕟 𝔾ℙ𝕋-𝕓𝕒𝕤𝕖𝕕 𝕒𝕣𝕥𝕚𝕗𝕚𝕔𝕚𝕒𝕝 𝕚𝕟𝕥𝕖𝕝𝕝𝕚𝕘𝕖𝕟𝕔𝕖 𝕞𝕠𝕕𝕖𝕝𝕤 𝕣𝕦𝕟 𝕠𝕟.

𝔸𝕟𝕠𝕥𝕙𝕖𝕣 𝕔𝕣𝕒𝕫𝕪 𝕥𝕙𝕚𝕟𝕘 𝕚𝕤 𝕥𝕙𝕒𝕥 𝕥𝕙𝕖𝕪 𝕠𝕡𝕥𝕚𝕞𝕚𝕤𝕖𝕕 𝕥𝕙𝕖 𝕔𝕠𝕕𝕖 𝕤𝕡𝕖𝕔𝕚𝕗𝕚𝕔𝕒𝕝𝕝𝕪 𝕗𝕠𝕣 𝕥𝕙𝕖 ℍ𝟠𝟘𝟘 𝕔𝕙𝕚𝕡, 𝕨𝕙𝕚𝕔𝕙 𝕙𝕒𝕤 𝕞𝕖𝕞𝕠𝕣𝕪 𝕝𝕚𝕞𝕚𝕥𝕒𝕥𝕚𝕠𝕟𝕤, 𝕒𝕟𝕕 𝕚𝕟𝕤𝕥𝕖𝕒𝕕 𝕠𝕗 𝕦𝕤𝕚𝕟𝕘 ℂ𝕌𝔻𝔸 (ℂ𝕌𝔻𝔸 𝕚𝕤 𝕝𝕚𝕜𝕖 𝕥𝕙𝕖 𝕝𝕒𝕟𝕘𝕦𝕒𝕘𝕖 𝕥𝕙𝕒𝕥 ℕ𝕧𝕚𝕕𝕚𝕒 𝕦𝕤𝕖𝕤 𝕥𝕠 𝕡𝕣𝕠𝕘𝕣𝕒𝕞 𝕥𝕙𝕖 𝕞𝕒𝕔𝕙𝕚𝕟𝕖 𝕔𝕠𝕕𝕖 𝕕𝕚𝕣𝕖𝕔𝕥𝕝𝕪), 𝕥𝕙𝕖𝕪 𝕨𝕖𝕟𝕥 𝕥𝕠 𝕡𝕣𝕠𝕘𝕣𝕒𝕞𝕞𝕚𝕟𝕘 𝕕𝕚𝕣𝕖𝕔𝕥𝕝𝕪 𝕚𝕟 𝕒𝕤𝕤𝕖𝕞𝕓𝕝𝕖𝕣. 𝕋𝕙𝕖𝕪 𝕡𝕣𝕠𝕘𝕣𝕒𝕞𝕞𝕖𝕕 𝕥𝕙𝕖 𝕞𝕖𝕥𝕒𝕝 𝕝𝕚𝕜𝕖 𝕪𝕠𝕦 𝕕𝕠 𝕨𝕙𝕖𝕟 𝕪𝕠𝕦'𝕣𝕖 𝕡𝕣𝕠𝕘𝕣𝕒𝕞𝕞𝕚𝕟𝕘 𝕒 𝕤𝕒𝕥𝕖𝕝𝕝𝕚𝕥𝕖 𝕠𝕣 𝕒 𝕞𝕚𝕤𝕤𝕚𝕝𝕖, 𝕥𝕙𝕖 𝕨𝕒𝕪 𝕔𝕠𝕞𝕡𝕦𝕥𝕖𝕣𝕤 𝕦𝕤𝕖𝕕 𝕥𝕠 𝕓𝕖 𝕡𝕣𝕠𝕘𝕣𝕒𝕞𝕞𝕖𝕕. 𝕀𝕟 𝕥𝕙𝕒𝕥 𝕨𝕒𝕪, 𝕥𝕙𝕖𝕪 𝕓𝕪𝕡𝕒𝕤𝕤𝕖𝕕 𝕞𝕒𝕟𝕪 𝕠𝕗 𝕥𝕙𝕖 𝕣𝕖𝕤𝕥𝕣𝕚𝕔𝕥𝕚𝕠𝕟𝕤 𝕒𝕟𝕕 𝕞𝕒𝕩𝕚𝕞𝕚𝕤𝕖𝕕 𝕥𝕙𝕖 𝕦𝕤𝕖 𝕠𝕗 𝕚𝕟𝕗𝕖𝕣𝕚𝕠𝕣 𝕔𝕙𝕚𝕡𝕤 𝕓𝕖𝕔𝕒𝕦𝕤𝕖 𝕠𝕗 𝕥𝕙𝕖 𝕌𝕊 𝕣𝕖𝕤𝕥𝕣𝕚𝕔𝕥𝕚𝕠𝕟 𝕠𝕟 𝕖𝕩𝕡𝕠𝕣𝕥𝕚𝕟𝕘 𝕔𝕙𝕚𝕡𝕤 𝕥𝕠 ℂ𝕙𝕚𝕟𝕒. 𝔸𝕝𝕝 𝕥𝕙𝕚𝕤 𝕚𝕟 𝕚𝕥𝕤𝕖𝕝𝕗 𝕞𝕒𝕜𝕖𝕤 𝕥𝕙𝕖 𝕞𝕠𝕕𝕖𝕝 𝕣𝕦𝕟 𝕞𝕦𝕔𝕙 𝕗𝕒𝕤𝕥𝕖𝕣 𝕨𝕚𝕥𝕙 𝕗𝕒𝕣 𝕗𝕖𝕨𝕖𝕣 𝕣𝕖𝕤𝕠𝕦𝕣𝕔𝕖𝕤. 𝔹𝕦𝕥 𝕥𝕙𝕖𝕪 𝕒𝕝𝕤𝕠 𝕔𝕙𝕒𝕟𝕘𝕖𝕕 𝕥𝕙𝕖 𝕤𝕥𝕣𝕒𝕥𝕖𝕘𝕪 𝕚𝕟 𝕨𝕙𝕚𝕔𝕙 𝕥𝕙𝕖𝕪 𝕥𝕣𝕒𝕚𝕟 𝕥𝕙𝕖 𝕞𝕠𝕕𝕖𝕝. 𝕋𝕠 𝕢𝕦𝕚𝕔𝕜𝕝𝕪 𝕦𝕟𝕕𝕖𝕣𝕤𝕥𝕒𝕟𝕕 𝕙𝕠𝕨 𝕥𝕠 𝕥𝕣𝕒𝕚𝕟 𝕒 𝕞𝕠𝕕𝕖𝕝, 𝕨𝕖 𝕙𝕒𝕧𝕖 𝕒 𝕓𝕒𝕤𝕖 𝕞𝕠𝕕𝕖𝕝 𝕨𝕙𝕚𝕔𝕙 𝕚𝕤 𝕒𝕝𝕝 𝕥𝕙𝕖 𝕥𝕣𝕒𝕟𝕤𝕗𝕠𝕣𝕞𝕖𝕣𝕤. 𝕀𝕥'𝕤 𝕥𝕒𝕜𝕚𝕟𝕘 𝕒𝕝𝕝 𝕥𝕙𝕖 𝕝𝕒𝕟𝕘𝕦𝕒𝕘𝕖 𝕠𝕗 𝕙𝕦𝕞𝕒𝕟 𝕔𝕦𝕝𝕥𝕦𝕣𝕖 𝕒𝕟𝕕 𝕤𝕥𝕣𝕦𝕔𝕥𝕦𝕣𝕚𝕟𝕘 𝕚𝕥 𝕚𝕟𝕥𝕠 𝕒 𝕞𝕒𝕥𝕣𝕚𝕩 𝕥𝕙𝕒𝕥 𝕘𝕖𝕟𝕖𝕣𝕒𝕥𝕖𝕤 𝕥𝕙𝕖 𝕨𝕙𝕠𝕝𝕖 𝕟𝕖𝕥𝕨𝕠𝕣𝕜 𝕠𝕗 𝕥𝕙𝕖 𝕒𝕥𝕥𝕖𝕟𝕥𝕚𝕠𝕟 𝕞𝕠𝕕𝕖𝕝, 𝕒𝕟𝕕 𝕥𝕙𝕖𝕟 𝕤𝕥𝕒𝕥𝕚𝕤𝕥𝕚𝕔𝕒𝕝𝕝𝕪 𝕡𝕣𝕖𝕕𝕚𝕔𝕥𝕤 𝕥𝕙𝕖 𝕟𝕖𝕩𝕥 𝕨𝕠𝕣𝕕 𝕚𝕟 𝕒 𝕤𝕥𝕣𝕚𝕟𝕘 𝕠𝕗 𝕥𝕖𝕩𝕥. 𝕋𝕙𝕒𝕥'𝕤 𝕙𝕠𝕨 𝕒𝕝𝕝 𝕘𝕣𝕖𝕒𝕥 𝕝𝕒𝕟𝕘𝕦𝕒𝕘𝕖 𝕞𝕠𝕕𝕖𝕝𝕤 𝕨𝕠𝕣𝕜.

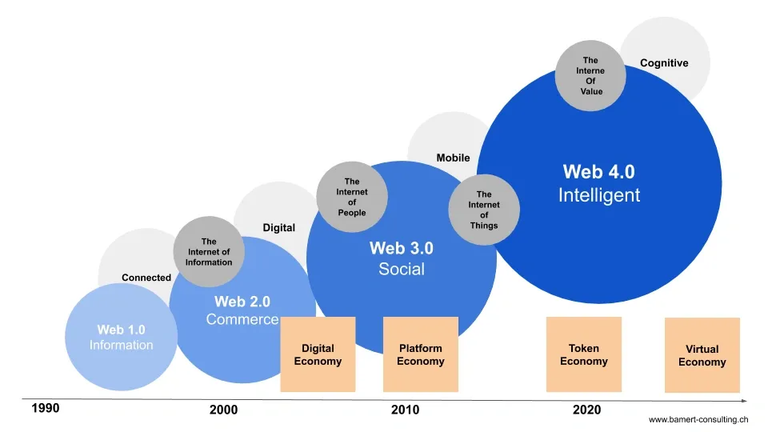

Hive and Web 4.0 - Extending The Standard, Intelligently

𝔹𝕦𝕥 𝕥𝕙𝕒𝕥 𝕣𝕖𝕤𝕡𝕠𝕟𝕤𝕖 𝕚𝕤 𝕟𝕠𝕥 𝕖𝕟𝕠𝕦𝕘𝕙. 𝕋𝕙𝕖 𝕒𝕟𝕤𝕨𝕖𝕣𝕤 𝕥𝕙𝕒𝕥 𝕃𝕃𝕄𝕤 𝕘𝕖𝕟𝕖𝕣𝕒𝕥𝕖 𝕒𝕣𝕖 𝕟𝕠𝕥 𝕒𝕝𝕨𝕒𝕪𝕤 𝕒𝕕𝕒𝕡𝕥𝕒𝕓𝕝𝕖 𝕥𝕠 𝕙𝕦𝕞𝕒𝕟𝕤, 𝕤𝕠 𝕪𝕠𝕦 𝕟𝕖𝕖𝕕 𝕒 𝕣𝕖𝕨𝕒𝕣𝕕 𝕒𝕝𝕘𝕠𝕣𝕚𝕥𝕙𝕞, 𝕒 𝕣𝕖𝕨𝕒𝕣𝕕 𝕗𝕦𝕟𝕔𝕥𝕚𝕠𝕟 𝕨𝕙𝕖𝕣𝕖 𝕥𝕙𝕖 𝕞𝕠𝕕𝕖𝕝 𝕗𝕖𝕖𝕝𝕤 𝕣𝕖𝕨𝕒𝕣𝕕𝕖𝕕 𝕗𝕠𝕣 𝕘𝕖𝕟𝕖𝕣𝕒𝕥𝕚𝕟𝕘 𝕙𝕦𝕞𝕒𝕟-𝕣𝕖𝕒𝕕𝕒𝕓𝕝𝕖 𝕒𝕟𝕤𝕨𝕖𝕣𝕤 𝕥𝕙𝕒𝕥 𝕗𝕖𝕖𝕝 𝕝𝕚𝕜𝕖 𝕒 𝕔𝕠𝕟𝕧𝕖𝕣𝕤𝕒𝕥𝕚𝕠𝕟. 𝕋𝕙𝕒𝕥'𝕤 𝕥𝕙𝕖 𝕚𝕟𝕟𝕠𝕧𝕒𝕥𝕚𝕠𝕟 𝕠𝕗 𝕆𝕡𝕖𝕟𝔸𝕀, 𝕨𝕙𝕖𝕣𝕖𝕓𝕪 𝕙𝕚𝕣𝕚𝕟𝕘 𝕥𝕙𝕠𝕦𝕤𝕒𝕟𝕕𝕤 𝕒𝕟𝕕 𝕥𝕙𝕠𝕦𝕤𝕒𝕟𝕕𝕤 𝕒𝕟𝕕 𝕥𝕙𝕠𝕦𝕤𝕒𝕟𝕕𝕤 𝕠𝕗 𝕡𝕖𝕠𝕡𝕝𝕖, 𝕒𝕡𝕡𝕒𝕣𝕖𝕟𝕥𝕝𝕪 𝕚𝕟 𝕂𝕖𝕟𝕪𝕒, 𝕥𝕙𝕖𝕪 𝕘𝕠𝕥 𝕥𝕙𝕠𝕤𝕖 𝕡𝕖𝕠𝕡𝕝𝕖 𝕥𝕒𝕝𝕜𝕚𝕟𝕘. ℙ𝕖𝕠𝕡𝕝𝕖 𝕨𝕚𝕥𝕙 𝕥𝕙𝕖 𝕞𝕠𝕕𝕖𝕝 𝕒𝕟𝕕 𝕥𝕖𝕝𝕝 𝕪𝕠𝕦 𝕧𝕖𝕣𝕪 𝕨𝕖𝕝𝕝 𝕠𝕣 𝕧𝕖𝕣𝕪 𝕓𝕒𝕕𝕝𝕪. 𝔼𝕧𝕖𝕣𝕪 𝕥𝕚𝕞𝕖 𝕙𝕖 𝕣𝕖𝕤𝕡𝕠𝕟𝕕𝕖𝕕 𝕒𝕤 𝕚𝕗 𝕚𝕥 𝕨𝕒𝕤 𝕒 𝕔𝕙𝕒𝕥, 𝕥𝕙𝕚𝕤 𝕡𝕣𝕠𝕔𝕖𝕤𝕤 𝕚𝕤 𝕔𝕒𝕝𝕝𝕖𝕕 𝕣𝕝𝕗 𝕠𝕣 𝕣𝕖𝕚𝕟𝕗𝕠𝕣𝕔𝕖𝕞𝕖𝕟𝕥 𝕝𝕖𝕒𝕣𝕟𝕚𝕟𝕘 𝕨𝕚𝕥𝕙 𝕙𝕦𝕞𝕒𝕟 𝕗𝕖𝕖𝕕𝕓𝕒𝕔𝕜. 𝔸𝕝𝕝 𝕥𝕙𝕒𝕥 𝕗𝕚𝕟𝕚𝕤𝕙𝕖𝕤 𝕥𝕦𝕟𝕚𝕟𝕘 𝕒𝕟𝕕 𝕣𝕖𝕗𝕚𝕟𝕚𝕟𝕘 𝕥𝕙𝕖 𝕞𝕠𝕕𝕖𝕝, 𝕨𝕖 𝕜𝕟𝕠𝕨 𝕚𝕥 𝕒𝕤 𝕤𝕦𝕡𝕖𝕣𝕧𝕚𝕤𝕖 𝕗𝕚𝕟𝕖-𝕥𝕦𝕟𝕚𝕟𝕘, 𝕒𝕟𝕕 𝕒𝕝𝕞𝕠𝕤𝕥 𝕒𝕝𝕝 𝕞𝕠𝕕𝕖𝕝𝕤 𝕙𝕒𝕧𝕖 𝕥𝕙𝕚𝕤. 𝔸𝕗𝕥𝕖𝕣 𝕤𝕦𝕡𝕖𝕣𝕧𝕚𝕤𝕖 𝕗𝕚𝕟𝕖-𝕥𝕦𝕟𝕚𝕟𝕘 𝕔𝕠𝕞𝕖𝕤 𝕒 𝕤𝕖𝕔𝕠𝕟𝕕 𝕡𝕣𝕠𝕔𝕖𝕤𝕤 𝕔𝕒𝕝𝕝𝕖𝕕 𝕡𝕣𝕖𝕗𝕖𝕣𝕖𝕟𝕔𝕖 𝕥𝕦𝕟𝕚𝕟𝕘, 𝕨𝕙𝕚𝕔𝕙 𝕚𝕤 𝕨𝕙𝕒𝕥 𝕞𝕒𝕜𝕖𝕤 𝕥𝕙𝕖 𝕞𝕠𝕕𝕖𝕝 𝕙𝕒𝕧𝕖 𝕔𝕖𝕣𝕥𝕒𝕚𝕟 𝕣𝕖𝕤𝕥𝕣𝕚𝕔𝕥𝕚𝕠𝕟𝕤, 𝕚𝕥 𝕥𝕖𝕝𝕝𝕤 𝕪𝕠𝕦 𝕒𝕤 𝕒 𝕝𝕒𝕟𝕘𝕦𝕒𝕘𝕖 𝕞𝕠𝕕𝕖𝕝 𝕥𝕙𝕒𝕥 𝕀 𝕔𝕒𝕟𝕟𝕠𝕥 𝕕𝕠 𝕥𝕙𝕒𝕥 𝕒𝕟𝕕 𝕚𝕥 𝕥𝕒𝕝𝕜𝕤 𝕥𝕠 𝕪𝕠𝕦 𝕒𝕓𝕠𝕦𝕥 𝕥𝕙𝕖 𝕒𝕝𝕚𝕘𝕟𝕞𝕖𝕟𝕥𝕤 𝕤𝕠 𝕥𝕙𝕒𝕥 𝕚𝕥 𝕚𝕤 𝕒𝕝𝕚𝕘𝕟𝕖𝕕 𝕨𝕚𝕥𝕙 𝕥𝕙𝕖 𝕙𝕦𝕞𝕒𝕟 𝕣𝕒𝕔𝕖. ℝ𝟙 𝕕𝕠𝕖𝕤𝕟'𝕥 𝕦𝕤𝕖 𝕙𝕦𝕞𝕒𝕟𝕤, 𝕚𝕥 𝕕𝕠𝕖𝕤𝕟'𝕥 𝕟𝕖𝕖𝕕 𝕣𝕖𝕗𝕠𝕣𝕞 𝕝𝕖𝕒𝕣𝕟𝕚𝕟𝕘 𝕨𝕚𝕥𝕙 𝕙𝕦𝕞𝕒𝕟 𝕗𝕖𝕖𝕕𝕓𝕒𝕔𝕜.

𝕋𝕙𝕖 𝕣𝕖𝕚𝕟𝕗𝕠𝕣𝕔𝕖𝕞𝕖𝕟𝕥 𝕝𝕖𝕒𝕣𝕟𝕚𝕟𝕘 𝕡𝕣𝕠𝕔𝕖𝕤𝕤 𝕨𝕒𝕤 𝕕𝕠𝕟𝕖 𝕨𝕚𝕥𝕙 𝕒𝕟 𝕖𝕒𝕣𝕝𝕚𝕖𝕣 𝕞𝕠𝕕𝕖𝕝 𝕥𝕙𝕖𝕪 𝕔𝕣𝕖𝕒𝕥𝕖𝕕 𝕔𝕒𝕝𝕝𝕖𝕕 ℝ𝟙𝟘, 𝕨𝕙𝕚𝕔𝕙 𝕚𝕤 𝕥𝕙𝕖 𝕞𝕒𝕕𝕟𝕖𝕤𝕤 𝕠𝕗 𝕟𝕠𝕥 𝕞𝕒𝕜𝕚𝕟𝕘 𝕚𝕥 𝕥𝕠𝕠 𝕔𝕠𝕞𝕡𝕝𝕖𝕩 𝕨𝕚𝕥𝕙 𝕒𝕟 𝕚𝕟𝕚𝕥𝕚𝕒𝕝 𝕥𝕣𝕒𝕚𝕟𝕚𝕟𝕘 𝕞𝕠𝕕𝕖𝕝. 𝕋𝕙𝕖𝕪 𝕓𝕦𝕚𝕝𝕥 𝟞𝟘𝟘,𝟘𝟘𝟘 𝕖𝕩𝕒𝕞𝕡𝕝𝕖𝕤 𝕠𝕗 𝕣𝕖𝕒𝕤𝕠𝕟𝕚𝕟𝕘 𝕚𝕟 𝕨𝕙𝕚𝕔𝕙 𝕒 𝕢𝕦𝕖𝕤𝕥𝕚𝕠𝕟 𝕙𝕒𝕤 𝕒 𝕣𝕖𝕒𝕤𝕠𝕟𝕖𝕕 𝕒𝕟𝕤𝕨𝕖𝕣 𝕒𝕟𝕕 𝕥𝕙𝕠𝕤𝕖 𝟞𝟘𝟘𝟘 𝕖𝕩𝕒𝕞𝕡𝕝𝕖𝕤 𝕠𝕗 𝕣𝕖𝕒𝕤𝕠𝕟𝕚𝕟𝕘 𝕨𝕖𝕣𝕖 𝕗𝕠𝕣𝕔𝕖𝕕 𝕚𝕟𝕥𝕠 𝕥𝕙𝕖 𝕗𝕚𝕟𝕒𝕝 𝕞𝕠𝕕𝕖𝕝 𝕥𝕙𝕒𝕥 𝕥𝕙𝕖𝕪 𝕥𝕣𝕒𝕚𝕟𝕖𝕕. 𝔹𝕦𝕥 𝕙𝕠𝕨 𝕕𝕚𝕕 𝕥𝕙𝕖𝕪 𝕕𝕠 𝕚𝕥 𝕨𝕚𝕥𝕙𝕠𝕦𝕥 𝕙𝕦𝕞𝕒𝕟𝕤 𝕥𝕠 𝕕𝕖𝕥𝕖𝕔𝕥 𝕨𝕙𝕖𝕥𝕙𝕖𝕣 𝕒 𝕣𝕖𝕒𝕤𝕠𝕟𝕚𝕟𝕘 𝕒𝕟𝕤𝕨𝕖𝕣 𝕨𝕒𝕤 𝕣𝕚𝕘𝕙𝕥 𝕠𝕣 𝕨𝕣𝕠𝕟𝕘? 𝕎𝕖𝕝𝕝, 𝕥𝕙𝕖𝕪 𝕒𝕤𝕜𝕖𝕕 𝕚𝕥 𝕢𝕦𝕖𝕤𝕥𝕚𝕠𝕟𝕤 𝕥𝕙𝕒𝕥 𝕟𝕠𝕣𝕞𝕒𝕝 𝕔𝕠𝕞𝕡𝕦𝕥𝕚𝕟𝕘 𝕔𝕠𝕦𝕝𝕕 𝕧𝕖𝕣𝕚𝕗𝕪. 𝔽𝕠𝕣 𝕖𝕩𝕒𝕞𝕡𝕝𝕖, 𝕚𝕗 𝕀 𝕒𝕤𝕜 𝕒 𝕞𝕠𝕕𝕖𝕝 𝕥𝕠 𝕘𝕖𝕟𝕖𝕣𝕒𝕥𝕖 𝟝𝟘𝟘 𝕕𝕚𝕗𝕗𝕖𝕣𝕖𝕟𝕥 𝕞𝕒𝕥𝕙𝕖𝕞𝕒𝕥𝕚𝕔𝕒𝕝 𝕖𝕢𝕦𝕒𝕥𝕚𝕠𝕟𝕤 𝕥𝕙𝕒𝕥 𝕝𝕖𝕒𝕕 𝕞𝕖 𝕥𝕠 𝕥𝕙𝕖 𝕟𝕦𝕞𝕓𝕖𝕣 𝟜𝟚, 𝕥𝕙𝕖 𝕞𝕠𝕕𝕖𝕝 𝕨𝕚𝕝𝕝 𝕘𝕖𝕟𝕖𝕣𝕒𝕥𝕖 𝕒 𝕝𝕠𝕥 𝕠𝕗 𝕠𝕡𝕥𝕚𝕠𝕟𝕤 𝕒𝕟𝕕 𝕚𝕥 𝕞𝕚𝕘𝕙𝕥 𝕘𝕖𝕟𝕖𝕣𝕒𝕥𝕖 𝟚𝟘𝟘𝟘. 𝕋𝕙𝕖𝕟, 𝕥𝕙𝕖 𝟙𝟘𝟘 𝕥𝕙𝕒𝕥 𝕕𝕚𝕕𝕟'𝕥 𝕘𝕖𝕟𝕖𝕣𝕒𝕥𝕖 𝕥𝕙𝕖 𝕟𝕦𝕞𝕓𝕖𝕣 𝟜𝟚 𝕝𝕚𝕥𝕖𝕣𝕒𝕝𝕝𝕪 𝕤𝕔𝕠𝕝𝕕𝕖𝕕 𝕚𝕥 𝕨𝕚𝕥𝕙 𝕥𝕙𝕖 𝕣𝕖𝕚𝕟𝕗𝕠𝕣𝕔𝕖𝕞𝕖𝕟𝕥 𝕝𝕖𝕒𝕣𝕟𝕚𝕟𝕘 𝕤𝕪𝕤𝕥𝕖𝕞. 𝕋𝕙𝕚𝕤 𝕚𝕤 𝕟𝕠𝕥 𝕒𝕟𝕕 𝕥𝕙𝕖 𝟝𝟘𝟘 𝕥𝕙𝕒𝕥 𝕕𝕚𝕕 𝕘𝕖𝕥 𝕥𝕠 𝕟𝕦𝕞𝕓𝕖𝕣 𝟜𝟚, 𝕨𝕖𝕝𝕝 𝕀 𝕔𝕒𝕟 𝕔𝕙𝕖𝕔𝕜 𝕥𝕙𝕖𝕞 𝕨𝕚𝕥𝕙 𝕒𝕟𝕪 𝕡𝕣𝕠𝕘𝕣𝕒𝕞𝕞𝕚𝕟𝕘 𝕔𝕠𝕕𝕖 𝕒𝕟𝕕 𝕥𝕖𝕝𝕝 𝕚𝕥 𝕥𝕙𝕚𝕤 𝕚𝕤 𝕒 𝕘𝕠𝕠𝕕 𝕡𝕣𝕠𝕔𝕖𝕤𝕤 𝕒𝕟𝕕 𝕥𝕙𝕖𝕟 𝕥𝕙𝕖 𝕞𝕠𝕕𝕖𝕝 𝕟𝕠𝕥 𝕠𝕟𝕝𝕪 𝕘𝕖𝕥𝕤 𝕥𝕙𝕖 𝕒𝕟𝕤𝕨𝕖𝕣𝕤, 𝕓𝕦𝕥 𝕚𝕥 𝕦𝕟𝕕𝕖𝕣𝕤𝕥𝕠𝕠𝕕 𝕥𝕙𝕒𝕥 𝕥𝕙𝕚𝕤 𝕚𝕤 𝕥𝕙𝕖 𝕔𝕠𝕣𝕣𝕖𝕔𝕥 𝕣𝕖𝕒𝕤𝕠𝕟𝕚𝕟𝕘.

𝔸𝕟𝕠𝕥𝕙𝕖𝕣 𝕖𝕩𝕒𝕞𝕡𝕝𝕖 𝕨𝕠𝕦𝕝𝕕 𝕓𝕖 𝕒𝕤𝕜𝕚𝕟𝕘 𝕔𝕠𝕕𝕖 𝕥𝕠 𝕘𝕖𝕟𝕖𝕣𝕒𝕥𝕖 𝕒 𝕔𝕖𝕣𝕥𝕒𝕚𝕟 𝕥𝕪𝕡𝕖 𝕠𝕗 𝕩𝕞𝕝 𝕤𝕥𝕣𝕦𝕔𝕥𝕦𝕣𝕖 𝕒𝕟𝕕 𝕥𝕙𝕖𝕟 𝕦𝕤𝕚𝕟𝕘 𝕣𝕖𝕘𝕦𝕝𝕒𝕣 𝕖𝕩𝕡𝕣𝕖𝕤𝕤𝕚𝕠𝕟𝕤 𝕠𝕗 𝕒𝕝𝕝 𝕝𝕚𝕗𝕖. 𝕀𝕥 𝕔𝕙𝕖𝕔𝕜𝕖𝕕 𝕚𝕗 𝕥𝕙𝕖 𝕣𝕖𝕤𝕦𝕝𝕥𝕚𝕟𝕘 𝕤𝕥𝕣𝕦𝕔𝕥𝕦𝕣𝕖 𝕚𝕤 𝕥𝕙𝕖 𝕔𝕠𝕣𝕣𝕖𝕔𝕥 𝕤𝕥𝕣𝕦𝕔𝕥𝕦𝕣𝕖 𝕒𝕟𝕕 𝕥𝕠𝕠𝕜 𝕥𝕙𝕖 𝕣𝕖𝕒𝕤𝕠𝕟𝕚𝕟𝕘 𝕥𝕙𝕒𝕥 𝕘𝕖𝕟𝕖𝕣𝕒𝕥𝕖𝕕 𝕥𝕙𝕒𝕥 𝕤𝕥𝕣𝕦𝕔𝕥𝕦𝕣𝕖 𝕒𝕟𝕕 𝕥𝕦𝕣𝕟𝕖𝕕 𝕚𝕥 𝕚𝕟𝕥𝕠 𝕒 𝕣𝕖𝕚𝕟𝕗𝕠𝕣𝕔𝕖𝕞𝕖𝕟𝕥. 𝔸𝕗𝕥𝕖𝕣 𝕥𝕙𝕒𝕥 𝕡𝕣𝕠𝕔𝕖𝕤𝕤, 𝕚𝕥 𝕕𝕚𝕕 𝕚𝕥 𝕞𝕚𝕝𝕝𝕚𝕠𝕟𝕤 𝕠𝕗 𝕥𝕚𝕞𝕖𝕤. 𝕀𝕥 𝕥𝕠𝕠𝕜 𝕠𝕟𝕝𝕪 𝕥𝕙𝕖 𝕧𝕒𝕝𝕚𝕕 𝕒𝕟𝕤𝕨𝕖𝕣𝕤 𝕒𝕟𝕕 𝕣𝕒𝕟 𝕚𝕥 𝕥𝕙𝕣𝕠𝕦𝕘𝕙 𝕥𝕙𝕖 𝕣𝕖𝕚𝕟𝕗𝕠𝕣𝕔𝕖𝕞𝕖𝕟𝕥 𝕝𝕖𝕒𝕣𝕟𝕚𝕟𝕘 𝕡𝕣𝕠𝕔𝕖𝕤𝕤, 𝕒𝕟𝕕 𝕥𝕙𝕒𝕥 𝕨𝕒𝕤 𝕚𝕥. ℍ𝕖𝕣𝕖 𝕞𝕠𝕤𝕥 𝕡𝕣𝕠𝕓𝕒𝕓𝕝𝕪 𝕥𝕙𝕖 ℂ𝕙𝕚𝕟𝕖𝕤𝕖 𝕒𝕝𝕤𝕠 𝕥𝕠𝕠𝕜 𝕒𝕕𝕧𝕒𝕟𝕥𝕒𝕘𝕖 𝕠𝕗 𝕤𝕪𝕟𝕥𝕙𝕖𝕥𝕚𝕔 𝕕𝕒𝕥𝕒 𝕔𝕣𝕖𝕒𝕥𝕖𝕕 𝕨𝕚𝕥𝕙 ℂ𝕙𝕒𝕥𝔾ℙ𝕋 𝟜 (𝕒𝕝𝕨𝕒𝕪𝕤 𝕔𝕠𝕡𝕪𝕚𝕟𝕘), 𝕦𝕤𝕚𝕟𝕘 𝕥𝕙𝕖 𝔸ℙ𝕀 𝕠𝕣 𝕖𝕧𝕖𝕟 𝕦𝕤𝕚𝕟𝕘 𝕥𝕙𝕖 𝕔𝕙𝕒𝕥 𝕞𝕖𝕔𝕙𝕒𝕟𝕚𝕤𝕞, 𝕒𝕟𝕕 𝕥𝕙𝕖𝕪 𝕞𝕒𝕪 𝕙𝕒𝕧𝕖 𝕦𝕤𝕖𝕕 𝕃𝕝𝕒𝕞𝕒 𝕨𝕚𝕥𝕙𝕠𝕦𝕥 𝕥𝕙𝕖 𝕤𝕝𝕚𝕘𝕙𝕥𝕖𝕤𝕥 𝕕𝕠𝕦𝕓𝕥; 𝕥𝕙𝕖𝕪 𝕒𝕝𝕞𝕠𝕤𝕥 𝕔𝕖𝕣𝕥𝕒𝕚𝕟𝕝𝕪 𝕦𝕤𝕖𝕕 𝕃𝕝𝕒𝕞𝕒, 𝕥𝕙𝕖𝕪 𝕒𝕝𝕞𝕠𝕤𝕥 𝕔𝕖𝕣𝕥𝕒𝕚𝕟𝕝𝕪 𝕦𝕤𝕖𝕕 𝕔𝕝𝕠𝕥𝕙. ℕ𝕠 𝕞𝕒𝕥𝕥𝕖𝕣, 𝕨𝕙𝕒𝕥 𝕞𝕒𝕥𝕥𝕖𝕣𝕤 𝕚𝕤 𝕥𝕙𝕒𝕥 𝕙𝕦𝕞𝕒𝕟𝕤 𝕒𝕣𝕖 𝕟𝕠 𝕝𝕠𝕟𝕘𝕖𝕣 𝕟𝕖𝕖𝕕𝕖𝕕 𝕗𝕠𝕣 𝕗𝕖𝕖𝕕𝕓𝕒𝕔𝕜 𝕒𝕟𝕕 𝕓𝕒𝕤𝕖 𝕥𝕣𝕒𝕚𝕟𝕚𝕟𝕘 𝕚𝕤 𝕟𝕠 𝕝𝕠𝕟𝕘𝕖𝕣 𝕥𝕙𝕖 𝕚𝕞𝕡𝕠𝕣𝕥𝕒𝕟𝕥 𝕥𝕙𝕚𝕟𝕘. ℝ𝕖𝕚𝕟𝕗𝕠𝕣𝕔𝕖𝕞𝕖𝕟𝕥 𝕝𝕖𝕒𝕣𝕟𝕚𝕟𝕘 𝕚𝕤 𝕠𝕧𝕖𝕣 𝕒𝕤 𝕠𝕟𝕖 𝕠𝕗 𝕥𝕙𝕖 𝕞𝕖𝕔𝕙𝕒𝕟𝕚𝕤𝕞𝕤 𝕠𝕗 𝕔𝕠𝕞𝕡𝕖𝕥𝕚𝕥𝕚𝕠𝕟.

𝕋𝕙𝕖 𝕠𝕥𝕙𝕖𝕣 𝕓𝕚𝕘 𝕚𝕟𝕟𝕠𝕧𝕒𝕥𝕚𝕠𝕟 𝕚𝕤 𝕥𝕙𝕖 𝕤𝕒𝕞𝕖 𝕥𝕙𝕚𝕟𝕘 𝕥𝕙𝕒𝕥 𝕞𝕒𝕜𝕖𝕤 𝕠𝕟𝕖 𝕥𝕙𝕚𝕟𝕜 𝕨𝕙𝕖𝕟 𝕪𝕠𝕦 𝕦𝕤𝕖 ℝ𝟙 𝕕𝕖𝕖𝕡𝕤. 𝔽𝕚𝕣𝕤𝕥 𝕥𝕙𝕖 𝕞𝕠𝕕𝕖𝕝 𝕤𝕥𝕒𝕣𝕥𝕤 𝕥𝕠 ‘𝕥𝕙𝕚𝕟𝕜’ 𝕒𝕟𝕕 𝕤𝕙𝕠𝕨𝕤 𝕪𝕠𝕦 𝕥𝕙𝕖 𝕥𝕙𝕠𝕦𝕘𝕙𝕥 𝕚𝕟 𝕡𝕦𝕓𝕝𝕚𝕔 𝕠𝕣 𝕪𝕠𝕦 𝕤𝕙𝕠𝕨 𝕒 𝕤𝕦𝕞𝕞𝕒𝕣𝕚𝕤𝕖𝕕 𝕧𝕖𝕣𝕤𝕚𝕠𝕟 𝕠𝕗 𝕥𝕙𝕖 𝕥𝕙𝕠𝕦𝕘𝕙𝕥. 𝔻𝕖𝕖𝕡𝕤 ℝ𝟙 𝕤𝕙𝕠𝕨𝕤 𝕪𝕠𝕦 𝕪𝕠𝕦𝕣 𝕨𝕙𝕠𝕝𝕖 𝕥𝕙𝕚𝕟𝕜𝕚𝕟𝕘 𝕡𝕣𝕠𝕔𝕖𝕤𝕤 𝕔𝕠𝕞𝕡𝕝𝕖𝕥𝕖𝕝𝕪 𝕠𝕡𝕖𝕟. 𝕀𝕟 𝕗𝕒𝕔𝕥, 𝕚𝕥 𝕚𝕤 𝕤𝕠 𝕟𝕚𝕔𝕖 𝕥𝕙𝕒𝕥 𝕞𝕒𝕟𝕪 𝕡𝕖𝕠𝕡𝕝𝕖 𝕒𝕣𝕖 𝕤𝕒𝕪𝕚𝕟𝕘, 𝕀 𝕝𝕠𝕧𝕖 𝕚𝕥 𝕨𝕙𝕖𝕟 𝕀𝕀 𝕥𝕖𝕝𝕝𝕤 𝕞𝕖 𝕙𝕠𝕨 𝕚𝕥 𝕚𝕤 𝕥𝕙𝕚𝕟𝕜𝕚𝕟𝕘, 𝕒𝕟𝕕 𝕀 𝕔𝕒𝕟 𝕙𝕚𝕕𝕖 𝕚𝕥 𝕠𝕣 𝕕𝕚𝕤𝕡𝕝𝕒𝕪 𝕚𝕥. 𝕀 𝕥𝕙𝕚𝕟𝕜 𝕥𝕙𝕖𝕪 𝕔𝕣𝕖𝕒𝕥𝕖𝕕 𝕒 𝕟𝕖𝕨 𝔾𝕌𝕀 𝕡𝕒𝕣𝕒𝕕𝕚𝕘𝕞 𝕓𝕖𝕔𝕒𝕦𝕤𝕖 𝕠𝕗 𝕥𝕙𝕚𝕤, 𝕒𝕟𝕕 𝕚𝕥 𝕘𝕖𝕟𝕖𝕣𝕒𝕥𝕖𝕤 𝕥𝕨𝕠 𝕣𝕖𝕤𝕡𝕠𝕟𝕤𝕖𝕤 𝕚𝕟 𝕒𝕟 𝕩𝕞𝕝 𝕗𝕠𝕣𝕞𝕒𝕥. 𝕀𝕟 𝕥𝕙𝕚𝕟𝕜 𝕥𝕒𝕘𝕤 𝕚𝕤 𝕥𝕙𝕖 𝕣𝕖𝕒𝕤𝕠𝕟𝕚𝕟𝕘 𝕡𝕣𝕠𝕔𝕖𝕤𝕤 𝕒𝕟𝕕 𝕚𝕟 𝕒𝕟𝕤𝕨𝕖𝕣 𝕥𝕒𝕘𝕤 𝕚𝕤 𝕥𝕙𝕖 𝕗𝕚𝕟𝕒𝕝 𝕒𝕟𝕤𝕨𝕖𝕣, 𝕒𝕟𝕕 𝕥𝕙𝕒𝕥 𝕘𝕖𝕟𝕖𝕣𝕒𝕥𝕖𝕤 𝕣𝕖𝕒𝕤𝕠𝕟𝕚𝕟𝕘 𝕖𝕢𝕦𝕚𝕧𝕒𝕝𝕖𝕟𝕥 𝕥𝕠 𝕠𝕟𝕖 𝟝𝟘 𝕥𝕚𝕞𝕖𝕤 𝕔𝕙𝕖𝕒𝕡𝕖𝕣. 𝕋𝕙𝕖𝕤𝕖 𝕒𝕣𝕖 𝕒𝕝𝕝 𝕚𝕞𝕡𝕣𝕠𝕧𝕖𝕞𝕖𝕟𝕥𝕤 𝕓𝕒𝕤𝕖𝕕 𝕠𝕟 𝕝𝕚𝕗𝕖𝕝𝕠𝕟𝕘 𝕤𝕠𝕗𝕥𝕨𝕒𝕣𝕖 𝕖𝕟𝕘𝕚𝕟𝕖𝕖𝕣𝕚𝕟𝕘 𝕒𝕟𝕕 𝕔𝕠𝕞𝕡𝕦𝕥𝕖𝕣 𝕤𝕔𝕚𝕖𝕟𝕔𝕖. 𝕋𝕙𝕖𝕤𝕖 𝕒𝕣𝕖 𝕟𝕠𝕥 𝕞𝕒𝕥𝕙𝕖𝕞𝕒𝕥𝕚𝕔𝕒𝕝 𝕘𝕖𝕟𝕚𝕦𝕤𝕖𝕤 𝕠𝕗 𝕥𝕙𝕖 𝕗𝕦𝕥𝕦𝕣𝕖 𝕠𝕣 𝕥𝕚𝕥𝕒𝕟𝕚𝕔 𝕗𝕠𝕦𝕟𝕕𝕖𝕣𝕤 𝕨𝕙𝕠 𝕒𝕣𝕖 𝕒𝕓𝕝𝕖 𝕥𝕠 𝕣𝕒𝕚𝕤𝕖 𝕓𝕚𝕝𝕝𝕚𝕠𝕟𝕤 𝕠𝕗 𝕕𝕠𝕝𝕝𝕒𝕣𝕤 𝕗𝕣𝕠𝕞 𝕤𝕠𝕧𝕖𝕣𝕖𝕚𝕘𝕟 𝕨𝕖𝕒𝕝𝕥𝕙 𝕗𝕦𝕟𝕕𝕤 𝕒𝕟𝕕 𝕟𝕠𝕨 𝕥𝕙𝕖 𝕨𝕠𝕣𝕝𝕕 𝕜𝕟𝕠𝕨𝕤 𝕚𝕥.

𝔸𝕟𝕕 𝕪𝕖𝕤, 𝕒𝕤 𝕚𝕥 𝕚𝕤 𝕒 ℂ𝕙𝕚𝕟𝕖𝕤𝕖 𝕞𝕠𝕕𝕖𝕝 𝕚𝕥 𝕙𝕒𝕤 𝕔𝕖𝕟𝕤𝕠𝕣𝕤𝕙𝕚𝕡 𝕒𝕡𝕡𝕝𝕚𝕖𝕕 𝕚𝕟 𝕚𝕥𝕤 𝕣𝕖𝕚𝕟𝕗𝕠𝕣𝕔𝕖𝕞𝕖𝕟𝕥 𝕝𝕖𝕒𝕣𝕟𝕚𝕟𝕘, 𝕓𝕦𝕥 𝕚𝕟 𝕥𝕙𝕖 𝕞𝕠𝕕𝕖𝕝 𝕥𝕙𝕒𝕥 𝕀 𝕨𝕒𝕤 𝕥𝕖𝕤𝕥𝕚𝕟𝕘 𝕝𝕠𝕔𝕒𝕝𝕝𝕪 𝕒𝕟𝕕 𝕥𝕙𝕒𝕥 𝕀 𝕨𝕒𝕤 𝕥𝕖𝕤𝕥𝕚𝕟𝕘 𝕠𝕟 𝕤𝕠𝕞𝕖 𝕗𝕣𝕚𝕖𝕟𝕕𝕤' 𝕤𝕖𝕣𝕧𝕖𝕣𝕤 𝕥𝕙𝕖𝕣𝕖 𝕚𝕤 𝕟𝕠 𝕔𝕖𝕟𝕤𝕠𝕣𝕤𝕙𝕚𝕡. 𝕋𝕙𝕖 𝕧𝕖𝕣𝕤𝕚𝕠𝕟 𝕥𝕙𝕒𝕥 𝕠𝕟𝕖 𝕔𝕒𝕟 𝕚𝕟𝕤𝕥𝕒𝕝𝕝 𝕚𝕤 𝕔𝕠𝕞𝕡𝕝𝕖𝕥𝕖𝕝𝕪 𝕠𝕡𝕖𝕟. 𝕀 𝕔𝕠𝕟𝕥𝕣𝕒𝕤𝕥 𝕒𝕝𝕝 𝕥𝕙𝕚𝕤 𝕨𝕚𝕥𝕙 𝕥𝕙𝕖 𝕗𝕒𝕔𝕥 𝕥𝕙𝕒𝕥 𝕊𝕒𝕞 𝔸𝕝𝕥𝕞𝕒𝕟 𝕣𝕖𝕔𝕖𝕟𝕥𝕝𝕪 𝕒𝕟𝕟𝕠𝕦𝕟𝕔𝕖𝕕, 𝕥𝕠𝕘𝕖𝕥𝕙𝕖𝕣 𝕨𝕚𝕥𝕙 𝕃𝕒𝕣𝕣𝕪 𝔼𝕝𝕝𝕚𝕤𝕠𝕟, 𝕥𝕙𝕖 𝕗𝕠𝕦𝕟𝕕𝕖𝕣 𝕠𝕗 𝕆𝕣𝕒𝕔𝕝𝕖 𝕒𝕟𝕕 𝕄𝕒𝕤𝕒𝕪𝕠𝕤𝕙𝕚 𝕊𝕠𝕟, 𝕥𝕙𝕖 𝕗𝕠𝕦𝕟𝕕𝕖𝕣 𝕠𝕗 𝕊𝕠𝕗𝕥𝕓𝕒𝕟𝕜, 𝕒𝕝𝕠𝕟𝕘𝕤𝕚𝕕𝕖 𝕋𝕣𝕦𝕞𝕡, 𝕥𝕙𝕖 𝕚𝕟𝕧𝕖𝕤𝕥𝕞𝕖𝕟𝕥 𝕠𝕗 𝕙𝕒𝕝𝕗 𝕒 𝕥𝕣𝕚𝕝𝕝𝕚𝕠𝕟 𝕕𝕠𝕝𝕝𝕒𝕣𝕤, 𝟝𝟘𝟘 𝕓𝕚𝕝𝕝𝕚𝕠𝕟 𝕚𝕟 𝕘𝕣𝕚𝕟𝕘𝕠 𝕟𝕦𝕞𝕓𝕖𝕣𝕤, 𝕨𝕙𝕚𝕔𝕙 𝕚𝕤 𝕒𝕟 𝕦𝕟𝕚𝕞𝕒𝕘𝕚𝕟𝕒𝕓𝕝𝕖 𝕟𝕦𝕞𝕓𝕖𝕣 𝕥𝕠 𝕚𝕟𝕧𝕖𝕤𝕥 𝕚𝕟 𝕤𝕖𝕣𝕧𝕖𝕣𝕤. 𝕋𝕙𝕚𝕟𝕜 𝕄𝕚𝕔𝕣𝕠𝕤𝕠𝕗𝕥 𝕙𝕒𝕤 𝕙𝕒𝕝𝕗 𝕒 𝕞𝕚𝕝𝕝𝕚𝕠𝕟 𝕠𝕗 𝕥𝕙𝕖𝕤𝕖 𝕔𝕙𝕚𝕡𝕤, 𝕨𝕙𝕚𝕔𝕙 𝕄𝕖𝕥𝕒 𝕚𝕤 𝕥𝕙𝕖 𝕠𝕥𝕙𝕖𝕣 𝕓𝕚𝕘 𝕘𝕦𝕪, 𝕀 𝕥𝕙𝕚𝕟𝕜 𝕥𝕙𝕖𝕪 𝕙𝕒𝕧𝕖 𝕝𝕚𝕜𝕖 𝟚𝟘𝟘,𝟘𝟘𝟘 𝕔𝕙𝕚𝕡𝕤 𝕠𝕗 𝕥𝕙𝕖 𝕒𝕕𝕧𝕒𝕟𝕔𝕖𝕕 𝕔𝕙𝕚𝕡𝕤 𝕒𝕟𝕕 𝕥𝕙𝕖𝕤𝕖 𝕡𝕖𝕠𝕡𝕝𝕖 𝕞𝕒𝕕𝕖 𝕚𝕥 𝕨𝕚𝕥𝕙 𝕔𝕙𝕖𝕒𝕡 𝕔𝕙𝕚𝕡𝕤. 𝔸𝕝𝕝 𝕥𝕙𝕚𝕤 𝕦𝕤𝕚𝕟𝕘 𝕥𝕙𝕖 𝕡𝕣𝕖𝕞𝕚𝕤𝕖 𝕥𝕙𝕒𝕥 𝕒𝕣𝕥𝕚𝕗𝕚𝕔𝕚𝕒𝕝 𝕚𝕟𝕥𝕖𝕝𝕝𝕚𝕘𝕖𝕟𝕔𝕖 𝕙𝕒𝕤 𝕠𝕟𝕝𝕪 𝕠𝕟𝕖 𝕨𝕒𝕪 𝕥𝕠 𝕘𝕣𝕠𝕨 𝕒𝕟𝕕 𝕥𝕙𝕒𝕥 𝕚𝕤 𝕥𝕠 𝕥𝕙𝕣𝕠𝕨 𝕔𝕙𝕚𝕡𝕤 𝕒𝕥 𝕥𝕙𝕖 𝕡𝕣𝕠𝕓𝕝𝕖𝕞, 𝕤𝕚𝕝𝕧𝕖𝕣 𝕒𝕟𝕕 𝕔𝕙𝕚𝕡𝕤, 𝕤𝕚𝕝𝕧𝕖𝕣 𝕒𝕟𝕕 𝕔𝕙𝕚𝕡𝕤, 𝕕𝕒𝕥𝕒𝕔𝕖𝕟𝕥𝕖𝕣𝕤 𝕒𝕤 𝕗𝕒𝕣 𝕒𝕤 𝕥𝕙𝕖 𝔸𝕣𝕚𝕫𝕠𝕟𝕒 𝕕𝕖𝕤𝕖𝕣𝕥.

𝕎𝕙𝕒𝕥 𝕒 𝕔𝕙𝕚𝕡 𝕚𝕤 𝕓𝕒𝕤𝕚𝕔𝕒𝕝𝕝𝕪 𝕞𝕖𝕞𝕠𝕣𝕪 𝕒𝕟𝕕 𝕡𝕣𝕠𝕔𝕖𝕤𝕤𝕚𝕟𝕘, 𝕞𝕖𝕞𝕠𝕣𝕪 𝕚𝕤 𝕥𝕙𝕖 𝕤𝕚𝕫𝕖 𝕠𝕗 𝕥𝕙𝕖 𝕔𝕠𝕟𝕥𝕖𝕩𝕥 𝕨𝕚𝕟𝕕𝕠𝕨 𝕒𝕟𝕕 𝕡𝕣𝕠𝕔𝕖𝕤𝕤𝕚𝕟𝕘 𝕚𝕤 𝕥𝕙𝕖 𝕤𝕡𝕖𝕖𝕕 𝕒𝕥 𝕨𝕙𝕚𝕔𝕙 𝕥𝕠𝕜𝕖𝕟𝕤 𝕒𝕣𝕖 𝕠𝕦𝕥𝕡𝕦𝕥. ℝ𝟙 𝕔𝕙𝕒𝕟𝕘𝕖𝕕 𝕥𝕙𝕖 𝕘𝕒𝕞𝕖 𝕓𝕖𝕔𝕒𝕦𝕤𝕖 𝕨𝕚𝕥𝕙 𝕒 𝕗𝕣𝕒𝕔𝕥𝕚𝕠𝕟 𝕠𝕗 𝕞𝕖𝕞𝕠𝕣𝕪 𝕒𝕟𝕕 𝕞𝕦𝕔𝕙 𝕝𝕖𝕤𝕤 ℂℙ𝕌 𝕠𝕣 𝔾ℙ𝕌 𝕚𝕥 𝕚𝕤 𝕒𝕔𝕙𝕚𝕖𝕧𝕚𝕟𝕘 𝕤𝕚𝕞𝕚𝕝𝕒𝕣 𝕣𝕖𝕤𝕦𝕝𝕥𝕤. 𝕎𝕙𝕒𝕥 𝕚𝕤 𝕘𝕠𝕚𝕟𝕘 𝕥𝕠 𝕙𝕒𝕡𝕡𝕖𝕟 𝕙𝕖𝕣𝕖 𝕚𝕤 𝕥𝕙𝕒𝕥 𝕒𝕝𝕝 𝕥𝕙𝕖 𝕔𝕠𝕞𝕡𝕒𝕟𝕚𝕖𝕤 𝕒𝕣𝕖 𝕘𝕠𝕚𝕟𝕘 𝕥𝕠 𝕤𝕥𝕒𝕣𝕥 𝕚𝕞𝕡𝕝𝕖𝕞𝕖𝕟𝕥𝕚𝕟𝕘 𝕓𝕖𝕔𝕒𝕦𝕤𝕖 𝕥𝕙𝕖𝕪 𝕙𝕒𝕧𝕖 𝕒𝕝𝕣𝕖𝕒𝕕𝕪 𝕤𝕥𝕒𝕣𝕥𝕖𝕕 𝕥𝕠 𝕚𝕞𝕡𝕝𝕖𝕞𝕖𝕟𝕥 𝕥𝕙𝕖 ℝ𝟙 𝕚𝕞𝕡𝕣𝕠𝕧𝕖𝕞𝕖𝕟𝕥𝕤. 𝕎𝕖'𝕣𝕖 𝕘𝕠𝕚𝕟𝕘 𝕥𝕠 𝕤𝕖𝕖 𝕒 𝕃𝕒𝕞𝕒 𝕞𝕠𝕕𝕖𝕝 𝕚𝕞𝕡𝕝𝕖𝕞𝕖𝕟𝕥𝕚𝕟𝕘 ℝ𝟙, 𝕨𝕖'𝕣𝕖 𝕘𝕠𝕚𝕟𝕘 𝕥𝕠 𝕤𝕖𝕖 𝕒𝕟 𝕆𝕡𝕖𝕟𝔸𝕀 𝕞𝕠𝕕𝕖𝕝 𝕚𝕞𝕡𝕝𝕖𝕞𝕖𝕟𝕥𝕚𝕟𝕘 𝔸𝕟𝕥𝕙𝕣𝕠𝕡𝕚𝕔'𝕤 ℝ𝟙 𝕨𝕚𝕥𝕙 ℂ𝕝𝕠𝕥. 𝔼𝕧𝕖𝕣𝕪𝕓𝕠𝕕𝕪. 𝔹𝕦𝕥 𝕥𝕙𝕒𝕥'𝕤 𝕟𝕠𝕥 𝕨𝕙𝕒𝕥 𝕞𝕒𝕥𝕥𝕖𝕣𝕤, 𝕨𝕙𝕒𝕥 𝕞𝕒𝕥𝕥𝕖𝕣𝕤 𝕚𝕤 𝕙𝕠𝕨 𝕚𝕥 𝕔𝕙𝕒𝕟𝕘𝕖𝕕 𝕥𝕙𝕖 𝕘𝕒𝕞𝕖, 𝕓𝕖𝕔𝕒𝕦𝕤𝕖 𝕤𝕠 𝕞𝕒𝕟𝕪 ℂ𝕝𝕠𝕦𝕕 𝕡𝕣𝕠𝕧𝕚𝕕𝕖𝕣𝕤 𝕚𝕟𝕧𝕖𝕤𝕥𝕖𝕕 𝕚𝕟 𝕥𝕙𝕖𝕤𝕖 𝕔𝕙𝕚𝕡𝕤, 𝕚𝕥'𝕤 𝕟𝕠𝕥 𝕝𝕚𝕜𝕖 𝕥𝕙𝕖𝕪'𝕣𝕖 𝕘𝕠𝕚𝕟𝕘 𝕥𝕠 𝕝𝕠𝕤𝕖 𝕥𝕙𝕖 𝕞𝕠𝕟𝕖𝕪. 𝕋𝕙𝕖𝕟 𝕥𝕙𝕖𝕪'𝕣𝕖 𝕘𝕠𝕚𝕟𝕘 𝕥𝕠 𝕤𝕥𝕒𝕣𝕥 𝕤𝕖𝕝𝕝𝕚𝕟𝕘 𝕔𝕙𝕖𝕒𝕡𝕖𝕣 ℂ𝕙𝕚𝕡 𝕥𝕚𝕞𝕖 𝕓𝕖𝕔𝕒𝕦𝕤𝕖 𝕟𝕠𝕨 𝕚𝕥 𝕥𝕒𝕜𝕖𝕤 𝕝𝕖𝕤𝕤. ℂ𝕠𝕞𝕡𝕒𝕟𝕚𝕖𝕤 𝕥𝕙𝕒𝕥 𝕨𝕒𝕟𝕥 𝕥𝕠 𝕦𝕤𝕖 𝔸𝕀 𝕥𝕠 𝕘𝕖𝕟𝕖𝕣𝕒𝕥𝕖 𝕤𝕠𝕝𝕦𝕥𝕚𝕠𝕟𝕤 𝕗𝕠𝕣 𝕠𝕥𝕙𝕖𝕣 𝕔𝕠𝕞𝕡𝕒𝕟𝕚𝕖𝕤, 𝕒𝕤 𝕡𝕒𝕣𝕥 𝕠𝕗 𝕥𝕙𝕖𝕚𝕣 𝕡𝕣𝕠𝕕𝕦𝕔𝕥𝕤, 𝕚𝕥'𝕤 𝕘𝕠𝕚𝕟𝕘 𝕥𝕠 𝕓𝕖 𝕞𝕦𝕔𝕙 𝕔𝕙𝕖𝕒𝕡𝕖𝕣 𝕗𝕠𝕣 𝕥𝕙𝕖𝕞 𝕒𝕟𝕕 𝕥𝕙𝕖 𝕞𝕠𝕤𝕥 𝕚𝕟𝕔𝕣𝕖𝕕𝕚𝕓𝕝𝕖 𝕥𝕙𝕚𝕟𝕘 𝕚𝕤 𝕥𝕙𝕒𝕥 𝕚𝕥 𝕔𝕙𝕒𝕟𝕘𝕖𝕤 𝕥𝕙𝕖 𝕔𝕦𝕝𝕥𝕦𝕣𝕖, 𝕚𝕥 𝕔𝕙𝕒𝕟𝕘𝕖𝕤 𝕥𝕙𝕖 𝕡𝕙𝕚𝕝𝕠𝕤𝕠𝕡𝕙𝕪.

𝕋𝕠 𝕦𝕟𝕕𝕖𝕣𝕤𝕥𝕒𝕟𝕕 𝕥𝕙𝕚𝕤 𝕨𝕠𝕣𝕝𝕕, 𝕥𝕙𝕚𝕤 𝕒𝕡𝕡𝕣𝕠𝕒𝕔𝕙, 𝕨𝕙𝕒𝕥 𝕕𝕠 𝕪𝕠𝕦 𝕟𝕖𝕖𝕕 𝕥𝕠 𝕜𝕟𝕠𝕨: 𝕨𝕙𝕒𝕥 𝕚𝕤 𝕒 𝕥𝕠𝕜𝕖𝕟, 𝕨𝕙𝕒𝕥 𝕚𝕤 𝕒 𝕟𝕖𝕦𝕣𝕒𝕝 𝕟𝕖𝕥𝕨𝕠𝕣𝕜, 𝕨𝕙𝕒𝕥 𝕕𝕠𝕖𝕤 𝕓𝕚𝕥 𝕤𝕙𝕚𝕗𝕥 𝕞𝕖𝕒𝕟, 𝕨𝕙𝕖𝕟 𝕥𝕠 𝕦𝕤𝕖 𝕒 𝕓𝕚𝕟𝕒𝕣𝕪 𝕥𝕣𝕖𝕖, 𝕙𝕠𝕨 𝕕𝕠𝕖𝕤 𝕒 𝕥𝕣𝕒𝕟𝕤𝕗𝕠𝕣𝕞𝕖𝕣 𝕨𝕠𝕣𝕜? 𝕎𝕙𝕒𝕥 𝕚𝕤 𝕥𝕙𝕖 𝕒𝕥𝕥𝕖𝕟𝕥𝕚𝕠𝕟 𝕞𝕠𝕕𝕖𝕝, 𝕨𝕙𝕒𝕥 𝕚𝕤 𝕒 𝔾ℙ𝕋 𝕘𝕖𝕟𝕖𝕣𝕒𝕥𝕚𝕧𝕖 𝕡𝕣𝕖𝕥𝕣𝕒𝕚𝕟𝕖𝕕 𝕥𝕣𝕒𝕟𝕤𝕗𝕠𝕣𝕞𝕖𝕣, 𝕙𝕠𝕨 𝕥𝕠 𝕥𝕣𝕒𝕚𝕟 𝕒 𝕝𝕒𝕣𝕘𝕖 𝕝𝕒𝕟𝕘𝕦𝕒𝕘𝕖 𝕞𝕠𝕕𝕖𝕝, 𝕨𝕙𝕒𝕥 𝕚𝕤 𝕥𝕙𝕖 𝕕𝕚𝕗𝕗𝕖𝕣𝕖𝕟𝕔𝕖 𝕓𝕖𝕥𝕨𝕖𝕖𝕟 ℝ𝔸𝕄 𝕒𝕟𝕕 𝕍ℝ𝔸𝕄. 𝕎𝕙𝕒𝕥 𝕚𝕤 𝕥𝕙𝕖 𝕕𝕚𝕗𝕗𝕖𝕣𝕖𝕟𝕔𝕖 𝕓𝕖𝕥𝕨𝕖𝕖𝕟 ℂℙ𝕌, 𝔾ℙ𝕌 𝕒𝕟𝕕 𝕋ℙ𝕌, 𝕙𝕠𝕨 𝕥𝕠 𝕚𝕟𝕤𝕥𝕒𝕝𝕝 𝕃𝕝𝕒𝕞𝕒 𝕒𝕟𝕕 𝔻𝕖𝕖𝕡𝕊𝕖𝕖𝕜 𝕝𝕠𝕔𝕒𝕝𝕝𝕪, 𝕨𝕙𝕒𝕥 𝕚𝕤 𝕥𝕙𝕖 𝕤𝕥𝕒𝕥𝕖 𝕠𝕗 𝕥𝕙𝕖 𝕒𝕣𝕥 𝕠𝕗 𝕔𝕠𝕟𝕥𝕖𝕩𝕥 𝕨𝕚𝕟𝕕𝕠𝕨𝕤, 𝕒𝕕𝕧𝕒𝕟𝕔𝕖𝕕 𝕡𝕣𝕠𝕞𝕡𝕥𝕚𝕟𝕘 𝕥𝕖𝕔𝕙𝕟𝕚𝕢𝕦𝕖𝕤. 𝔸𝕝𝕝 𝕥𝕙𝕖 𝕡𝕖𝕠𝕡𝕝𝕖 𝕨𝕙𝕠 𝕞𝕒𝕕𝕖 𝕗𝕦𝕟 𝕠𝕗 𝕡𝕣𝕠𝕞𝕡𝕥𝕤 𝕖𝕟𝕘𝕚𝕟𝕖𝕖𝕣𝕚𝕟𝕘 𝕨𝕖𝕣𝕖 𝕨𝕣𝕠𝕟𝕘 𝕒𝕟𝕕 𝕙𝕒𝕕 𝕟𝕠 𝕚𝕕𝕖𝕒, 𝕟𝕠 𝕔𝕝𝕦𝕖, 𝕟𝕠 𝕚𝕕𝕖𝕒, 𝕟𝕠 𝕚𝕕𝕖𝕒 𝕨𝕙𝕒𝕥 𝕥𝕙𝕖𝕪 𝕨𝕖𝕣𝕖 𝕥𝕒𝕝𝕜𝕚𝕟𝕘 𝕒𝕓𝕠𝕦𝕥. 𝕋𝕙𝕖𝕪 𝕒𝕣𝕖 𝕠𝕓𝕧𝕚𝕠𝕦𝕤𝕝𝕪 𝕞𝕒𝕜𝕚𝕟𝕘 𝕐𝕠𝕦𝕋𝕦𝕓𝕖 𝕧𝕚𝕕𝕖𝕠𝕤 𝕚𝕟𝕤𝕥𝕖𝕒𝕕 𝕠𝕗 𝕨𝕠𝕣𝕜𝕚𝕟𝕘. 𝕀 𝕜𝕟𝕠𝕨 𝕥𝕙𝕖 𝕚𝕣𝕠𝕟𝕪 𝕠𝕗 𝕨𝕙𝕒𝕥 𝕀'𝕞 𝕥𝕒𝕝𝕜𝕚𝕟𝕘 𝕒𝕓𝕠𝕦𝕥. 𝔹𝕦𝕥 𝕥𝕙𝕚𝕤 𝕚𝕟𝕔𝕝𝕦𝕕𝕖𝕤 𝕗𝕚𝕟𝕖-𝕥𝕦𝕟𝕚𝕟𝕘, 𝕠𝕟𝕖 𝕤𝕙𝕠𝕥, 𝕫𝕖𝕣𝕠 𝕤𝕙𝕠𝕥, 𝕗𝕠𝕣𝕔𝕖 𝕤𝕥𝕣𝕦𝕔𝕥𝕦𝕣𝕖 𝕠𝕦𝕥𝕡𝕦𝕥 𝕒𝕞𝕠𝕟𝕘 𝕠𝕥𝕙𝕖𝕣 𝕥𝕖𝕔𝕙𝕟𝕚𝕢𝕦𝕖𝕤, 𝕦𝕟𝕕𝕖𝕣𝕤𝕥𝕒𝕟𝕕𝕚𝕟𝕘 𝕨𝕙𝕒𝕥 𝕞𝕠𝕕𝕖𝕝 𝕕𝕚𝕤𝕥𝕚𝕝𝕝𝕒𝕥𝕚𝕠𝕟 𝕚𝕤 𝕒𝕟𝕕 𝕙𝕠𝕨 𝕥𝕠 𝕦𝕟𝕕𝕖𝕣𝕤𝕥𝕒𝕟𝕕 𝕒 𝕕𝕚𝕤𝕥𝕚𝕝𝕝𝕖𝕕 𝕞𝕠𝕕𝕖𝕝 𝕒𝕟𝕕 𝕣𝕦𝕟 𝕚𝕥, 𝕨𝕙𝕒𝕥 𝕒𝕣𝕖 𝕥𝕙𝕖 𝕓𝕖𝕟𝕔𝕙𝕞𝕒𝕣𝕜𝕤 𝕠𝕗 𝕝𝕝𝕞𝕤 𝕒𝕟𝕕 𝕣𝕖𝕘𝕦𝕝𝕒𝕣 𝕖𝕩𝕡𝕣𝕖𝕤𝕤𝕚𝕠𝕟𝕤 𝕠𝕗 𝕒𝕝𝕝 𝕝𝕚𝕗𝕖. 𝕋𝕙𝕖 𝕟𝕖𝕨 𝕤𝕠𝕗𝕥𝕨𝕒𝕣𝕖 𝕖𝕟𝕘𝕚𝕟𝕖𝕖𝕣𝕚𝕟𝕘 𝕗𝕦𝕟𝕕𝕒𝕞𝕖𝕟𝕥𝕒𝕝𝕤 𝕔𝕠𝕦𝕣𝕤𝕖.

𝕋𝕖𝕔𝕙𝕟𝕠𝕝𝕠𝕘𝕚𝕔𝕒𝕝 𝕕𝕖𝕧𝕖𝕝𝕠𝕡𝕞𝕖𝕟𝕥 𝕙𝕒𝕤 𝕓𝕖𝕔𝕠𝕞𝕖 𝕒 𝕓𝕒𝕥𝕥𝕝𝕖𝕘𝕣𝕠𝕦𝕟𝕕 𝕗𝕠𝕣 𝕘𝕖𝕠𝕡𝕠𝕝𝕚𝕥𝕚𝕔𝕒𝕝 𝕣𝕚𝕧𝕒𝕝𝕣𝕪, 𝕖𝕤𝕡𝕖𝕔𝕚𝕒𝕝𝕝𝕪 𝕓𝕖𝕥𝕨𝕖𝕖𝕟 𝕥𝕙𝕖 𝕌𝕟𝕚𝕥𝕖𝕕 𝕊𝕥𝕒𝕥𝕖𝕤 𝕒𝕟𝕕 ℂ𝕙𝕚𝕟𝕒. 𝕎𝕙𝕚𝕝𝕖 𝕥𝕙𝕖 𝕎𝕖𝕤𝕥 𝕕𝕖𝕓𝕒𝕥𝕖𝕤 𝕥𝕙𝕖 𝕡𝕣𝕚𝕧𝕒𝕔𝕪 𝕒𝕟𝕕 𝕤𝕖𝕔𝕦𝕣𝕚𝕥𝕪 𝕠𝕗 𝕒𝕡𝕡𝕤 𝕝𝕚𝕜𝕖 𝕋𝕚𝕜𝕋𝕠𝕜, ℂ𝕙𝕚𝕟𝕒 𝕡𝕣𝕖𝕤𝕤𝕖𝕤 𝕒𝕙𝕖𝕒𝕕 𝕨𝕚𝕥𝕙 𝕚𝕥𝕤 𝕥𝕖𝕔𝕙𝕟𝕠𝕝𝕠𝕘𝕚𝕔𝕒𝕝 𝕒𝕕𝕧𝕒𝕟𝕔𝕖𝕞𝕖𝕟𝕥, 𝕤𝕖𝕖𝕞𝕚𝕟𝕘𝕝𝕪 𝕠𝕓𝕝𝕚𝕧𝕚𝕠𝕦𝕤 𝕥𝕠 𝕥𝕙𝕖𝕤𝕖 𝕔𝕠𝕟𝕥𝕣𝕠𝕧𝕖𝕣𝕤𝕚𝕖𝕤. 𝕋𝕙𝕖 𝕘𝕒𝕞𝕖 𝕚𝕤 𝕟𝕠𝕨 𝕗𝕠𝕣 𝕖𝕧𝕖𝕣𝕪𝕠𝕟𝕖. ℂ𝕠𝕞𝕖 𝕡𝕝𝕒𝕪 𝕥𝕙𝕖 𝕘𝕒𝕞𝕖, 𝕚𝕗 𝕪𝕠𝕦 𝕕𝕖𝕡𝕖𝕟𝕕 𝕠𝕟 𝕪𝕠𝕦 𝕝𝕠𝕤𝕖, 𝕣𝕦𝕟 𝕒𝕨𝕒𝕪.

CREDITS:

Image: I, II.Artificial Intelligence, with the help of COPILOT (Designer) and with the support of VileTIC Innovation, III

CoolText

Dedicɑted to ɑll those poets who contɾibute, dɑγ bγ dɑγ, to mɑke ouɾ plɑnet ɑ betteɾ woɾld.