Today I will tell you about the history of computer in detail.

The Beginnings: Mechanical Calculators

The story of computers begins long before the sleek devices we know today. It started with the abacus, a simple counting tool used by ancient civilizations. Fast forward to the 17th century, and we see the birth of mechanical calculators like Blaise Pascal’s Pascaline and Gottfried Wilhelm Leibniz’s Step Reckoner. These early machines could perform basic arithmetic operations, laying the groundwork for more complex computing.

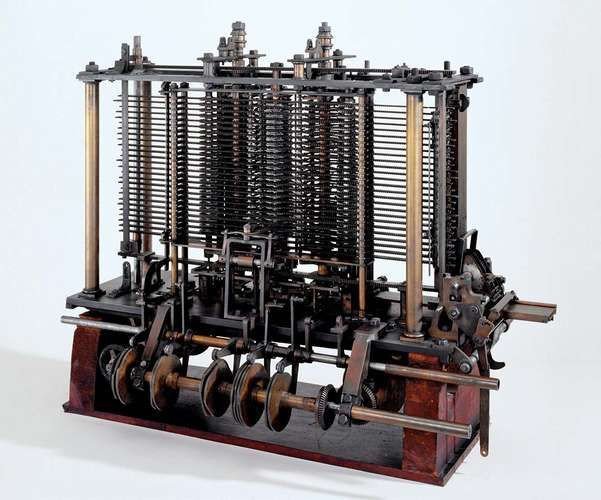

The Analytical Engine and Ada Lovelace

In the 19th century, Charles Babbage conceptualized the Analytical Engine, a mechanical computer capable of general-purpose computation. Although it was never fully built during his lifetime, his designs inspired future generations. Ada Lovelace, often credited as the world's first programmer, wrote algorithms for the Analytical Engine, foreseeing its potential beyond mere number crunching.

The Rise of Electromechanical Machines

The early to mid-20th century marked the era of electromechanical machines. One of the most famous examples is the Mark I, developed by Howard Aiken and IBM in the 1940s. It was a massive machine that used electromechanical switches to perform calculations. These machines were vital in scientific research and military applications during World War II.

The Electronic Age: ENIAC and UNIVAC

The real revolution came with the advent of electronic computers. ENIAC (Electronic Numerical Integrator and Computer), unveiled in 1946, was the world's first general-purpose electronic digital computer. It used vacuum tubes for processing, marking a shift from mechanical to electronic components. UNIVAC (Universal Automatic Computer), introduced in the 1950s, further advanced this technology, becoming one of the first commercially available computers.

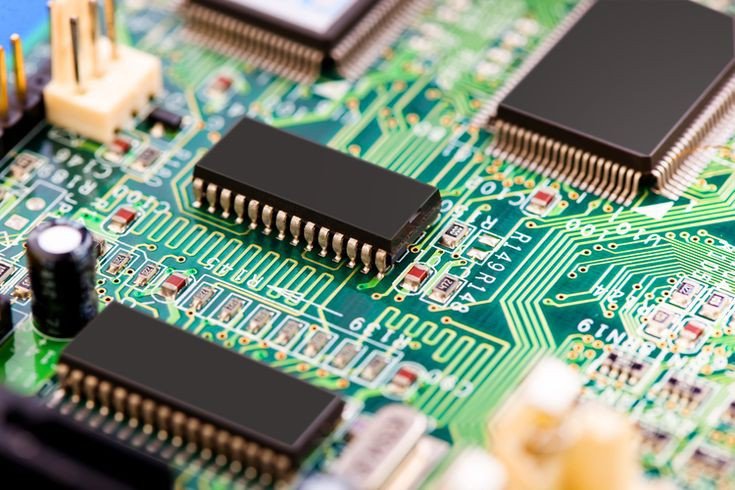

Transistors and Integrated Circuits

The 1950s and 1960s saw significant developments with the invention of transistors. Transistors replaced bulky vacuum tubes, making computers smaller, faster, and more reliable. This era also saw the birth of integrated circuits (microchips), which revolutionized computing by packing thousands to millions of transistors onto a single chip.

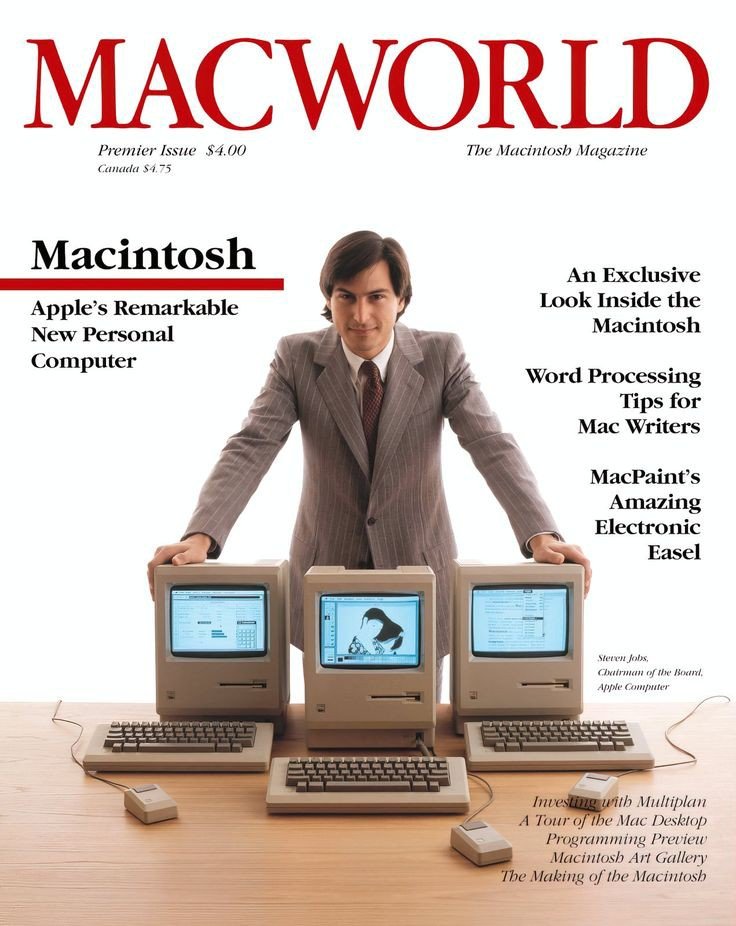

The Personal Computer Revolution

The 1970s witnessed the birth of the personal computer (PC) revolution. Companies like Apple, IBM, and Microsoft played pivotal roles in making computers accessible to individuals and small businesses. The Apple II, introduced in 1977, was one of the first successful mass-produced PCs, featuring a user-friendly interface and color graphics.

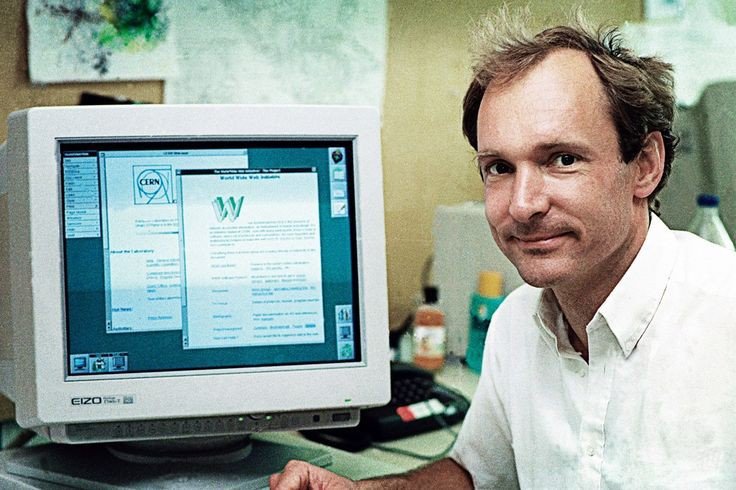

The Internet Era

The 1990s brought about the widespread use of the internet, transforming computers from standalone devices to interconnected systems. Tim Berners-Lee's invention of the World Wide Web in 1989 revolutionized how people accessed and shared information globally. This era also saw the emergence of search engines, email, social media, and e-commerce platforms.

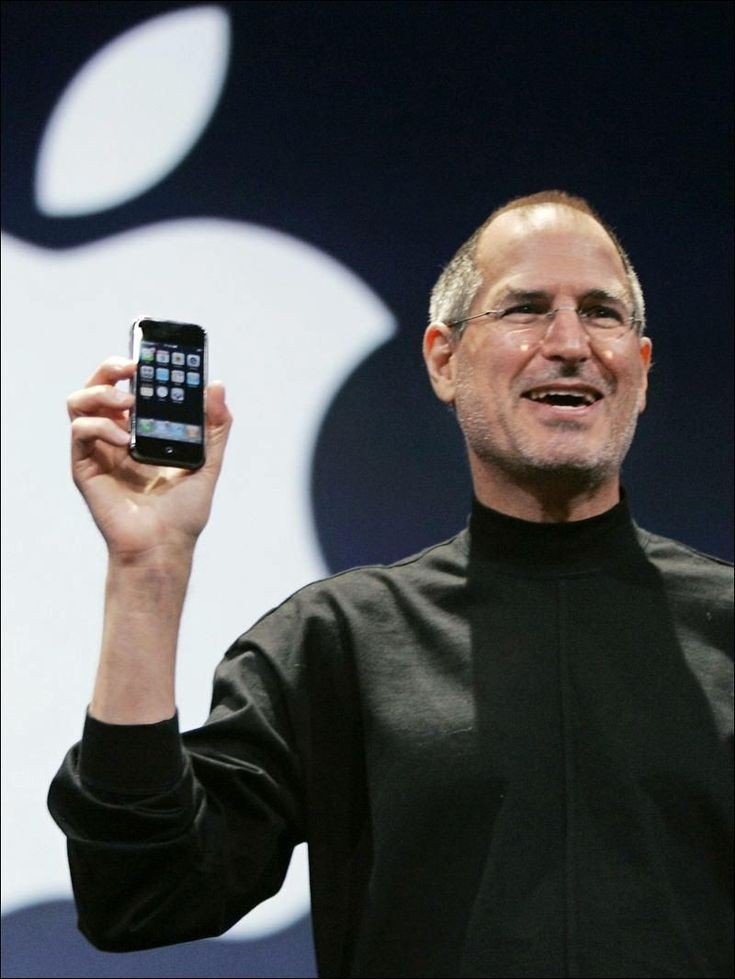

Mobile Computing and Smartphones

The early 21st century saw the rise of mobile computing with the introduction of smartphones. Devices like the iPhone, released in 2007, combined computing power with telephony, internet access, and multimedia capabilities, revolutionizing communication and entertainment. Mobile apps became an integral part of everyday life, catering to various needs from productivity to entertainment.

Cloud Computing and Artificial Intelligence

In recent years, cloud computing has become ubiquitous, offering on-demand access to computing resources over the internet. This technology enables businesses and individuals to store data, run applications, and scale computing power without the need for physical infrastructure.

Artificial intelligence (AI) has also made significant strides, with machine learning algorithms powering various applications from virtual assistants to autonomous vehicles. AI-driven technologies continue to evolve, shaping the future of computing and transforming industries across the globe.

Conclusion: A Continual Evolution

The history of computers is a testament to human ingenuity and innovation. From mechanical calculators to powerful smartphones and AI-driven systems, each era has built upon the achievements of its predecessors, pushing the boundaries of what computers can achieve. As we look to the future, advancements in quantum computing, nanotechnology, and biocomputing promise even more exciting possibilities, ensuring that the journey of computing continues to evolve and inspire generations to come.