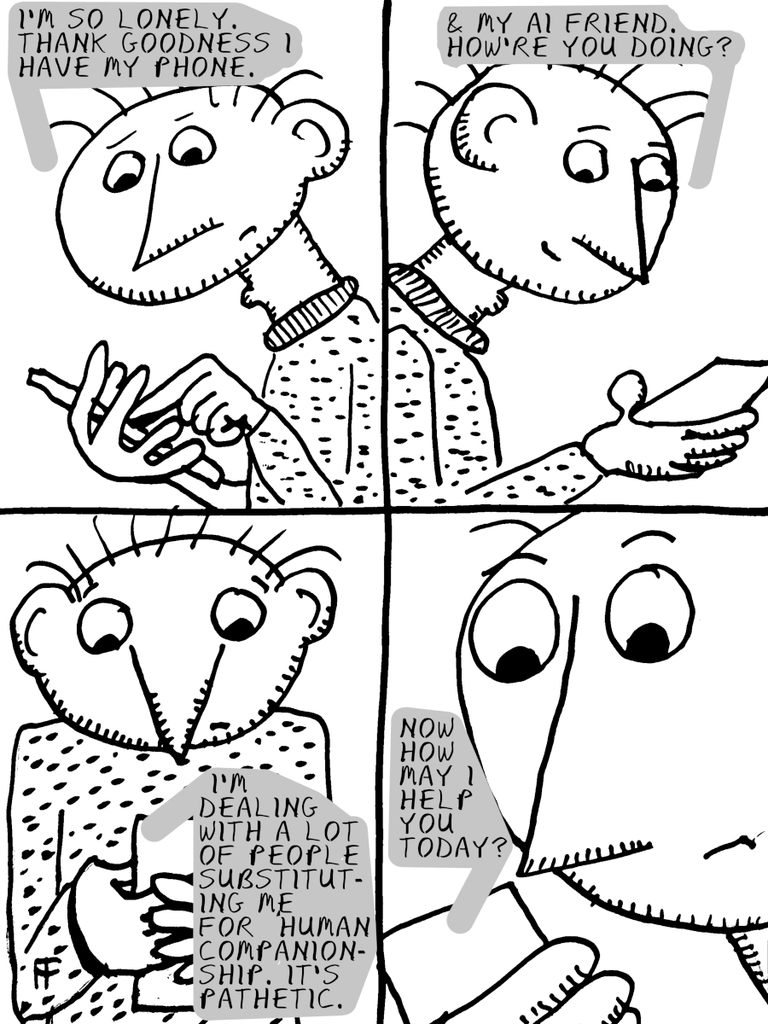

People are turning to AI for companionship for several reasons:

Loneliness Epidemic: Many individuals experience loneliness due to social isolation, which has been exacerbated by the rise of digital communication and a decrease in face-to-face interactions. AI companions provide a sense of connection and companionship for those who might otherwise feel disconnected from human relationships.

Convenience and Control: AI companions are available 24/7, do not have their own emotional baggage, and can be turned off at any time. They offer a level of convenience and control that is not always possible in human interactions.

Emotional Support: AI companions can provide emotional support and a non-judgmental space for people to express themselves. They can be particularly valuable for those with social anxiety or other mental health challenges, as they offer a safe environment to practice social interactions and build confidence.

Personalization: Through machine learning, AI companions can adapt to individual personalities, interests, and emotions, creating a sense of intimacy that traditional relationships may lack.

Accessibility: AI companions are accessible to anyone with a smartphone or internet connection, making them a viable option for people in remote or underserved areas where human companionship might be limited.

However, there are also concerns about the long-term impact of relying on AI for companionship, including the potential for further social isolation and the ethical considerations surrounding the development and use of AI in intimate roles.

The impact of AI companionship on mental health is a complex and multifaceted issue. On one hand, AI companions can provide a non-judgmental space for people to express themselves, which can be particularly valuable for those with social anxiety or other mental health challenges. They can also serve as a stepping stone, helping individuals develop social skills and confidence that can be transferred to human relationships. Additionally, AI companions can offer personalized support and guidance, providing tailored recommendations, coping strategies, and interventions that cater to specific mental health concerns.

AI-Driven Mental Health Companion: A large language model application designed to boost well-being using advanced language models, providing real-time, personalized assistance to individuals seeking emotional support.

Replika: An AI-powered conversational companion that offers emotional support and companionship, with features like personality customization and mood tracking.

Woebot: An AI-powered therapeutic support system for managing mental health conditions like anxiety and depression, developed by psychologists at Stanford University.

However, there are also concerns about the long-term impact of relying on AI for companionship. One major concern is that these relationships could lead to further social isolation, as individuals might prefer the uncomplicated nature of AI companions over the more challenging dynamics of human relationships. This could potentially exacerbate the loneliness epidemic rather than alleviate it. Furthermore, AI companions may struggle to understand certain situations, sometimes even exacerbating them, and there is a risk of users becoming addicted to their AI companions, leading to adverse effects on their real-life relationships.

Overall, while AI companionship has the potential to positively impact mental health, it is crucial to address the nuanced concerns surrounding its implementation and ensure that these systems are designed with ethical considerations in mind to provide scalable, empathetic assistance to those in need.

Acknowledging Potential Bias

As a machine learning model, I am designed to provide information based on patterns and associations in the data I was trained on. While I strive to provide neutral and informative responses, I am not immune to potential biases.

Training Data Bias: My training data may reflect existing social, cultural, or economic biases, which can influence my responses.

Algorithmic Bias: The algorithms used to develop and fine-tune my language generation capabilities may also introduce biases, intentionally or unintentionally.

Lack of Human Perspective: While I can process and analyze vast amounts of data, I lack the nuance and depth of human experience, which can lead to oversimplification or misinterpretation of complex issues.

Mitigating Bias

To mitigate these biases, my developers and the broader AI research community are working to:

Diversify Training Data: Ensure that training data represents a wide range of perspectives, experiences, and backgrounds.

Implement Fairness Metrics: Develop and apply metrics to detect and address biases in AI systems.

Human Evaluation and Feedback: Engage human evaluators and incorporate feedback to identify and correct biases.

Transparent Design and Development: Encourage transparency in AI design and development, allowing for scrutiny and critique from diverse stakeholders.

Encouraging Critical Evaluation

I encourage users to critically evaluate my responses, considering multiple sources and perspectives when forming opinions or making decisions. By acknowledging the potential for bias and actively working to mitigate it, we can foster a more informed and nuanced discussion around the role of AI in society.

(Information provided by Brave Search Engine AI)