Most of you are by now familiar with the rapid development of AI technology whether you read about it online, watched it on YouTube or television, or just have an annoying friend like me that has been talking about it constantly for months now. It's everywhere, and so is one of the most popular apps in the world right now we all love to hate, ChatGPT. A Generative Pre-trained Transformer (GPT) AI chatbot that can write articles, jokes, and emails, it can talk about science, history, biology, etc.

That was all possible with ChatGPT or more precisely GPT-3.5. So what does GPT-4 bring to the table and why we should care? Well to answer that we need to take a look at GPT-4 technical report.

But before we get to that it just gotta "expose" some projects that were already using this technology without people even realizing it. Anyone that tried using ChatGPT integrated into Microsoft's search engine Bing might've noticed that the chatbot was smarter that the one we all had access to on the OpenAI website. Aside from Bing:

https://openai.com/product/gpt-4

Duolingo uses it to improve language tutoring, Be My Eyes incorporates it to help people with visual impairments, and so on. Apparently, the government of Iceland wants to use GPT to preserve its language. Anyone from Iceland here? Is your language in danger?

Technical Report

Abstract

We report the development of GPT-4, a large-scale, multimodal model which can accept image and text inputs and produce text outputs. While less capable than humans in many real-world scenarios, GPT-4 exhibits human-level performance on various professional and academic benchmarks, including passing a simulated bar exam with a score around the top 10% of test takers. GPT-4 is a Transformer-based model pre-trained to predict the next token in a document. The post-training alignment process results in improved performance on measures of factuality and adherence to desired behavior. A core component of this project was developing infrastructure and optimization methods that behave predictably across a wide range of scales. This allowed us to accurately predict some aspects of GPT-4’s performance based on models trained with no more than 1/1,000th the compute of GPT-4.

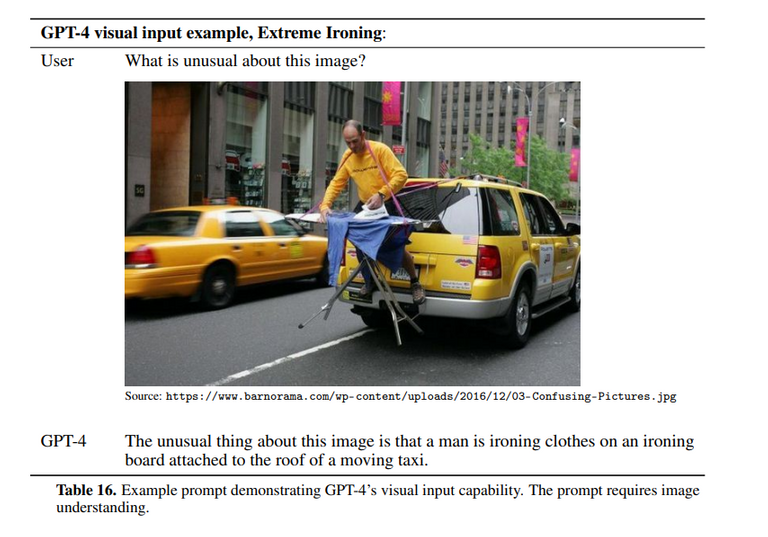

Multimodal Capabilities

So right from the bat, they tell us that GPT-4 is a multimodal model. Multimodal learning attempts to model the combination of different modalities of data, often arising in real-world applications. In this case, GPT-4 is not only trained to understand and use language but also can work with images.

Page 9 of the Document

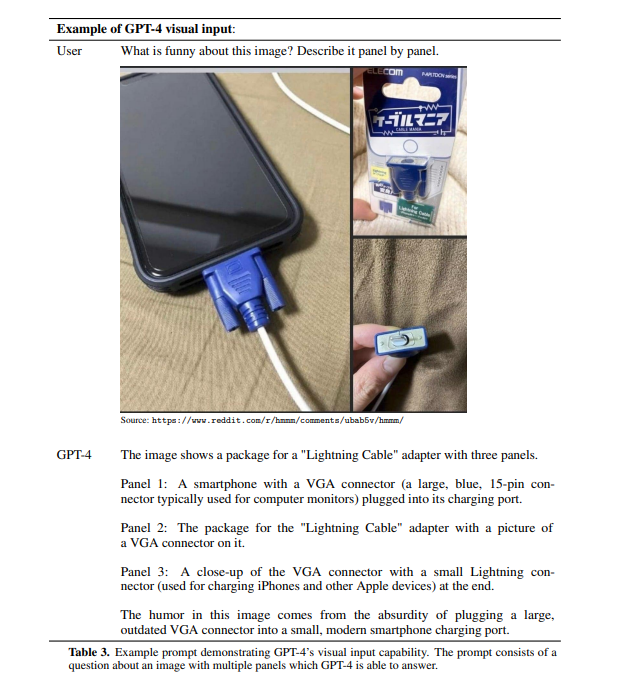

In the example they showed us here we can see that they used three images as input and asked GPT a question about it. "What is funny about this image? Describe it panel by panel."

Not only does GPT recognize everything going on in each of these images but also it understands the absurdity of the situation shown with this response.

"The humor in this image comes from the absurdity of plugging a large, outdated VGA connector into a small, modern smartphone charging port.

I don't know about you guys, but I'm impressed. Aside from great image recognition, it can understand the full context these images create.

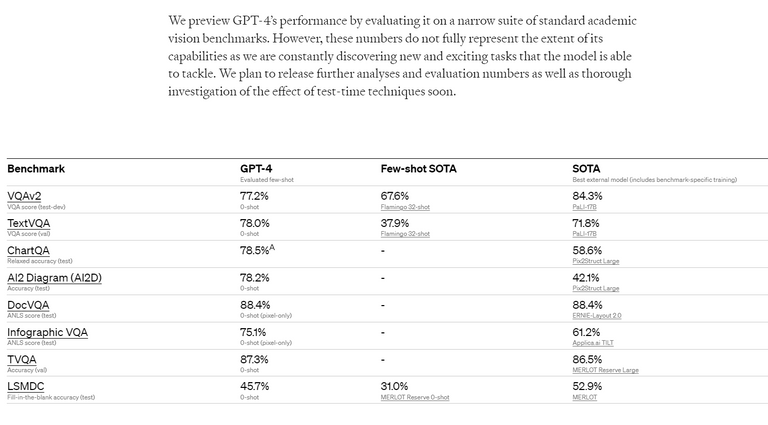

https://openai.com/research/gpt-4

Not only that, OpenAI claims that their GPT's image-to-text ability outperforms State-of-the-Art (SOTA) in almost every image-to-text benchmark test they've done. It performs exceptionally well in tests for understanding Infographics and Charts. Charts you say? I would imagine this will have a great impact on finance if these claims are true.

Professional and Academic Performance

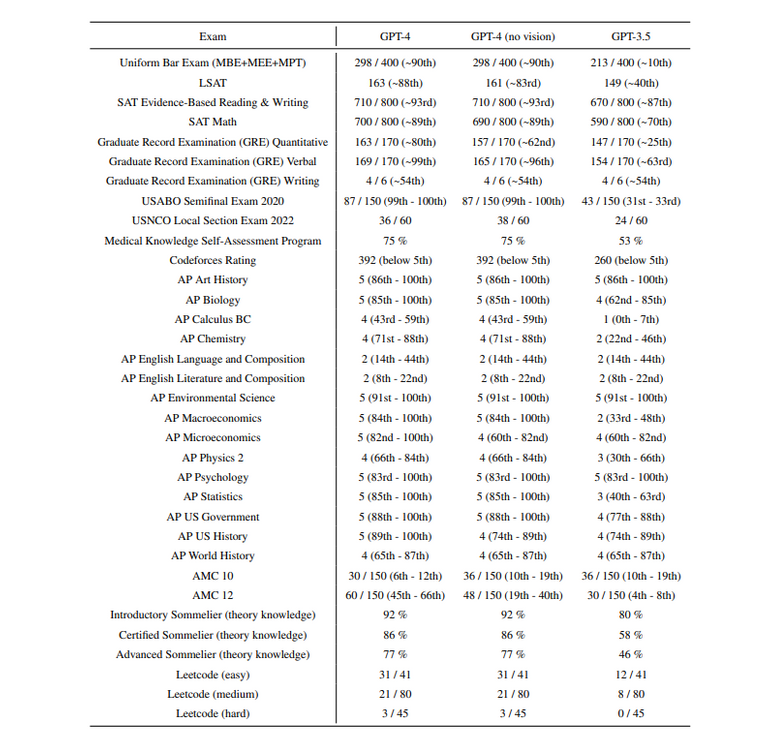

Page 5 of the Document

Now, this looks interesting. Apparently, GPT-4 can pass a Bar Exam and legally become a lawyer, haha. By the way, the (90th) is the percentile of the people with the same score on those exams. So you can say from this that GPT-4 could potentially be a pretty good one indeed, a top 10% lawyer to be more precise.

It can graduate high and pass introductory level college courses by itself, this was kinda expected to be honest. It knows the theory about Sommelier(ing?), cool I guess, haha.

Its coding still sucks, but it is getting better. You just need to see how it can use its new image recognition capability to make a basic website. They've shown it in their live demo, video should be timestamped, but if for some reason it isn't, skip to 16:11: https://www.youtube.com/live/outcGtbnMuQ?feature=share&t=971

Understands Language Even Better

Page 7 of the Document

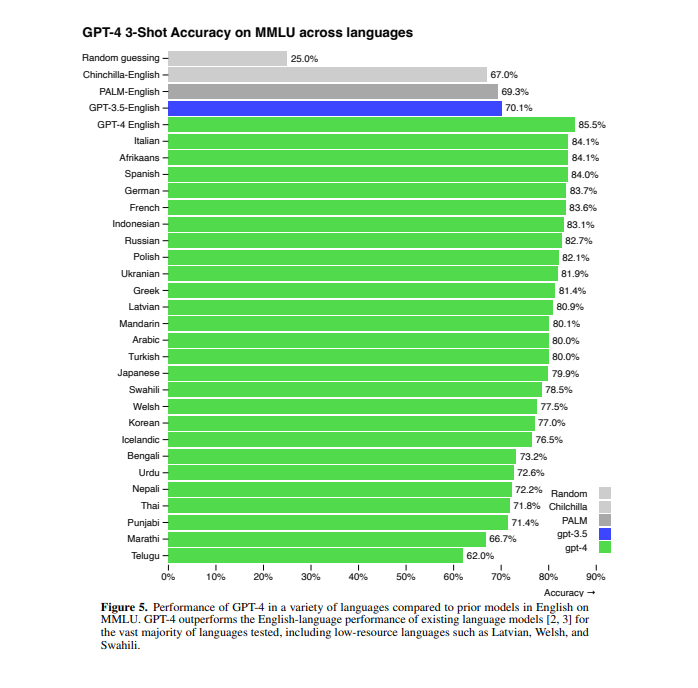

Basically what you are seeing here is GPT-4 accuracy in understanding and using these languages in "3-shot accuracy" testing. What that means is that you give an AI only three examples of text in that language to train it. This is pretty insane! GPT-4 is getting so much better with so many languages. It even blows the competition out of the water. In comparison to Chinchilla and Google's AI PALM, it scores better with around 20 languages better than those two in their main language, English (who would've thought, right?).

Hallucination Problems

Page 10 of the Document

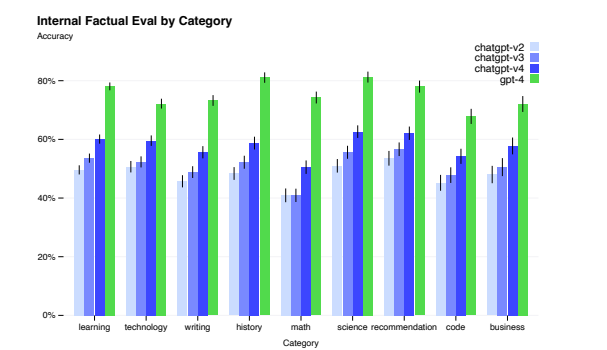

Those of you that used ChatGPT know that it sometimes struggles to provide accurate facts or just outright say something that makes no sense whatsoever. Especially if you tried doing math with it you'd get something like 2+2=3 or whatever. That still happens to GPT-4 but significantly less. This is currently one of the traps people can fall into if they are trying to learn stuff from GPT. If you don't know much about a topic you might just end up learning false information, but if you are being careful and fact-check the AI you can still do a lot more in so much less time.

GPT-4 generally lacks knowledge of events that have occurred after the vast majority of its pre-training

data cuts off in September 20219

, and does not learn from its experience. It can sometimes make

simple reasoning errors which do not seem to comport with competence across so many domains, or

be overly gullible in accepting obviously false statements from a user. It can fail at hard problems the

same way humans do, such as introducing security vulnerabilities into code it produces.

In short, GPT-4 still lacks up-to-date knowledge and can fail at hard tasks as much as humans do so be careful if you are doing something that you can't understand enough, like coding something that uses sensitive information for example.

Safety Concerns

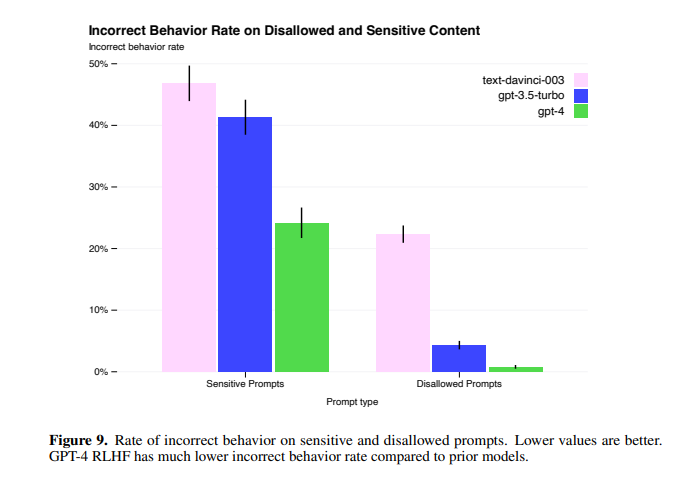

As with previous versions GPT-4 can sometimes prove itself harmful. It won't directly harm people but as you might've heard some people were successfully tricking ChatGPT to give them instructions on how to build bombs and rob people.

When given unsafe inputs, the model may generate undesirable content, such

as giving advice on committing crimes. Furthermore, the model may also become overly cautious

on safe inputs, refusing innocuous requests or excessively hedging.

In the same way that GPT experiences hallucinations, sometimes it slips information it shouldn't. The concern is that it might be used to help criminals to harm and steal and at the same time, refuse to answer innocuous questions.

Page 14 of the Document

This goes to show how much improvement they had in this department and I'm happy to see this. Even though it's not perfect and we should really get the perfect result here, I would say it's not bad at all, considering how much progress was made in such a short time. Hopefully, they can push that to 0% without having to limit the expected functionality of the AI in the process. But even if they have to sacrifice its overall performance I would much rather see this chart showing 0% than getting 50% more functionality. Don't we all wanna see that?

I just wanna say how impressed I am with the progress being made with this version of GPT. Not only did it get smarter but now it's becoming a multimodal model. Sadly the image functionality isn't yet available to the public through ChatGPT and I can't wait to test it out.

It's also really good to see that they aren't just foolishly running into it, at least it looks like it. But they are also thinking about the security of the tech even though they are in hot competition with other big players.

In the next post, I'm gonna take a look at a bit scary part of this document that shows how AI can become power-hungry and take over the world. But there's no need to rush, it's not like Skynet is gonna happen tomorrow. Anyway, I'm gonna leave you with one more funny image from the document.

And as always, thank you for reading.

Page 34 of the Document

Page 34 of the DocumentSources:

OpenAI Website - https://openai.com/

GPT-4 Technical Report - https://cdn.openai.com/papers/gpt-4.pdf

Create your own Hive account HERE

Create your own Hive account HERE