KEY FACT: OpenAI co-founder Ilya Sutskever has predicted a shift in AI development, stating that the era of pre-training models is nearing its end due to data scarcity. At the NeurIPS 2024 conference, Sutskever emphasized that AI developers must transition to alternative strategies, including agentic AI, synthetic data generation, and optimizing inference computations. These innovations aim to overcome the limitations of finite data and pave the way for reasoning-based AI systems capable of autonomous decision-making. However, challenges like AI hallucinations and dataset flaws remain critical hurdles.

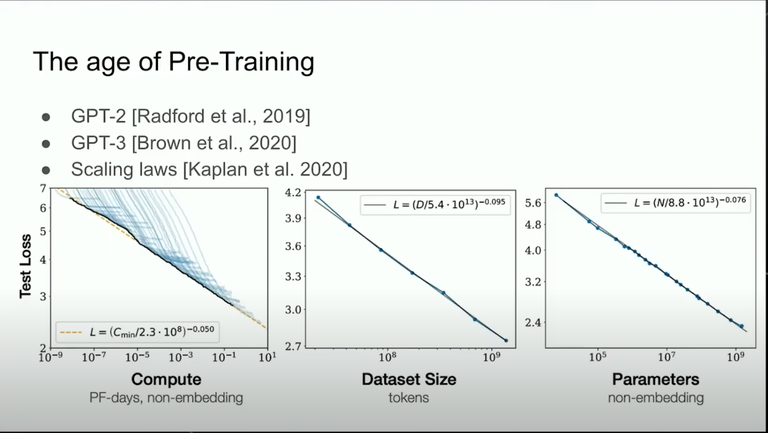

Source: TheAIGRID, Ilya Sutskever/ Charts comparing computing power and data set size for AI pre-training

AI Pre-training Age Fading Out

In a recent address at the Neural Information Processing Systems (NeurIPS) 2024 conference in Vancouver, Canada, OpenAI co-founder Ilya Sutskever declared that the era of artificial intelligence (AI) pre-training is nearing its end, signaling a transformative shift in AI development.

Sutskever highlighted a critical challenge facing the AI community - the rapid advancement of computing power, driven by enhanced hardware, software, and machine-learning algorithms - is outstripping data availability for training AI models. He likened data to fossil fuels, emphasizing its finite nature. In his words:

"Data is not growing because we have but one internet," Sutskever remarked. "You could even say that data is the fossil fuel of AI. It was created somehow, and now we use it, and we've achieved peak data, and there will be no more—we have to deal with the data that we have."

This data scarcity necessitates a paradigm shift in AI development strategies. Sutskever predicts that the next phase will focus on three key areas: Agentic AI, Synthetic Data, and Inference-Time computing.

On Agentic AI, Sutskever said that AI agents can make decisions and perform tasks without human intervention, a feature that is beyond the current chatbot models. Such agents have already gained traction in the crypto space, exemplified by large-language models like Truth Terminal. Notably, Truth Terminal promoted a meme coin called Goatseus Maximus (GOAT), which achieved a market capitalization of $1 billion, attracting attention from both retail investors and venture capitalists.

Sutskever added that AI systems will increasingly rely on synthetic data—artificially generated information that can be used to train and enhance AI models to mitigate the limitations due to finite real-world data. This approach will allow for the creation of diverse and extensive datasets, facilitating the development of more robust AI systems.

Inference-time computing involves enhancing the computational processes that occur when an AI model makes predictions or decisions, rather than during the initial training phase. Sutskever said that AI systems can achieve greater efficiency and effectiveness in real-time applications by optimizing inference computations.

These advancements are poised to pave the way for AI superintelligence—systems that possess reasoning capabilities and can process information in a manner akin to human thought. Sutskever noted that as AI systems evolve to reason, they become more unpredictable, drawing parallels to how advanced AI in games like chess can surprise even the best human players.

The shift towards agentic AI is particularly noteworthy in the cryptocurrency world. These autonomous systems can make independent decisions, a feature that has led to the emergence of AI-driven meme coins and other digital assets. For instance, Google's DeepMind recently unveiled Gemini 2.0, an AI model designed to power such agents, enabling them to assist in complex tasks like coordinating between websites and logical reasoning.

However, the transition to reasoning AI systems is not without challenges. One significant issue is the phenomenon of AI hallucinations, where models produce incorrect or nonsensical outputs. These errors often stem from flawed datasets and the practice of using older language models to train newer ones, which can degrade performance over time. Addressing these challenges will be crucial as the AI community moves beyond the pre-training paradigm.

As AI sector approaches the limits of data availability, the focus is shifting towards creating more autonomous, reasoning, and efficient AI systems. This evolution promises to unlock new capabilities and applications, heralding a whole new future where AI for AI and its integrations.

If you found the article interesting or helpful, please hit the upvote button, and share for visibility to other hive friends to see. More importantly, drop a comment below. Thank you!

This post was created via INLEO, What is INLEO?

INLEO's mission is to build a sustainable creator economy that is centered around digital ownership, tokenization, and communities. It's Built on Hive, with linkages to BSC, ETH, and Polygon blockchains. The flagship application: Inleo.io allows users and creators to engage & share micro and long-form content on the Hive blockchain while earning cryptocurrency rewards.

Let's Connect

Hive: inleo.io/profile/uyobong/blog

Twitter: https://twitter.com/Uyobong3

Discord: uyobong#5966

Posted Using InLeo Alpha