Typically a neural net is trained on large amounts of data. It will after training hopefully have good performance on this training set. Specifically, the tools for training a neural net are made in such a way that it tries to improve its performance on the training data that it is fed. If the performance on the training data is good then we would also like it to see how well it performs on data outside the training set. This is a so-called test set. It ensures that it actually generalizes to stuff that is not inside the training set.

My cat scoring high performance on being cute

If the neural net is trained very long on the training set it actually just learns all the cases in the training set. In essence it is memorizing all the elements in the set. If this happens it cannot do anything new. More practically, it will fail to perform well on the test set. So there is some sweet-spot where you let it learn the training set long enough that it performs well on both sets but not too long so that it only performs well on the training set.

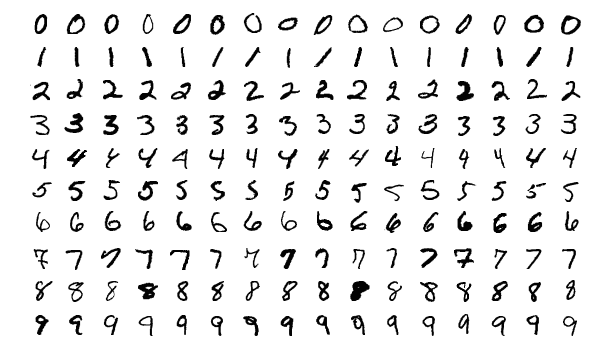

This way of training neural nets crucially depends on the data. The data has to have a clear pattern for the neural net to be able to identify it. For example, if we want the neural net to classify handwritten numbers the data set is less than 100.000 images of single numbers with pixel size 28x28 MNIST. 100.000 images might seem like a lot but it is only a small number with respect to all possible pictures. Assuming the pixels can be black or white 2^(28*28)=2^784 which many times larger than the image set as 2^17 is already bigger than 100.000.

You can also view language like that. A sentence of words is a very specific collection of letters. If we would consider all combinations of letters of sentence length we would already get a number which is greater than all the particles in the (observable) universe ~10^80.

Returning to neural nets, during the training process it is trying to find a pattern. Given a fixed neural net architecture all the possible neural nets are much larger than the actual data set. From that perspective the optimized neural net is sparse in the collection of all neural nets. The more philosophical point here is that the creation of patterns is generically not random as the time required to generate a pattern using randomness or even finding a pattern with randomness is practically infinite with respect to the size of the universe.