Introduction

The AI revolution is going great with new fields and industries seeing innovative application of this emerging technology. Since ChatGPT came into the scene, many AI language models have found their way into virtually all sectors of human endeavors like health, science, and even in education. While all that they want us to see are the many advantages of AI in enhancing production, there are also glaring concerns that AI has brought up especially with regards to data use and privacy.

With regards to rights of data use and privacy, it is not surprising to see that some countries like the US and UK have taken forward steps to prevent AI platforms from collecting personal data without letting users know they are doing so. This is because it has already been confirmed that many social media networks are using personal data uploaded on the platform to feed into their AI engines without prior knowledge or authorization of the owners. This endangers user privacy and exposes them to external dangers as their data is leaked to the world.

Social media platforms - a case study

The important of social media platforms to modern life cannot be overemphasized. People literally sleep on these platforms as they provide connection to our friends, family and the world. Users upload loads of personal data to social media platforms for social interactions. While the data is meant to have fun or simply let friends know how we are doing, some of the platforms have been accused of feeding such personal data to their AI models for training. Unfortunately, many social media users are not aware of this.

So what we are saying here in essence is that the nice selfie you took at a beach in one remote island and uploaded to a social media platform, might end up being released by AI after prompts are engineered. AI from social media platforms might release original personal data or one that is slightly modified when users query the engine.

Even if a social media platform might not abuse personal data in this way to train its AI, there are risks that they could pass on personal data to AI partners to be used in training AI models. As a result, these partners AI might actually be leaking personal data or one that has been changed a little and released to the public. So if one is active on social media and regularly posts personal data like images and videos, it is very important to understand how your data is handled and any privacy terms that might make you a party to having data used to train AI. Lets look at two prime examples.

LinkedIn Use personal data for AI training

Cooperate social media platform LinkedIn recently updated its data usage policy when it comes to generative AI. While most users of the platform might not be aware, LinkedIn already uses personal data from conversations and posts on the platform to train its generative AI. With the conversation around personal data usage in AI training heated up, LinkedIN has explained that it actually uses personal data from users to train its generative AI. Thus, it had to update its policy to inform users.

Here is an example that LinkedIn provided to assert that they actually use personal data provided by users to train its AI:

For example, if a member used our generative AI ”Writing Suggestions” feature to help write an article about the best advice they got from their mentors, they may include the names of those mentors in the input. The resulting output from the generative AI ”Writing Suggestions” feature may include those names, which that member can edit or revise before deciding to post. source

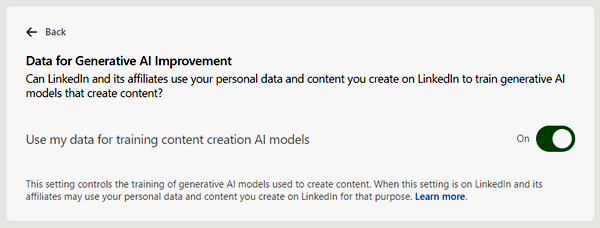

By default, LinkedIn sets up user accounts to allow their personal data to be used by generative AI. So each user is expected to go and disable this setting in order to protect their data and prevent it from being used in training AI models.

- How to opt out: If you wish to stop LinkedIn from using your posts and personal data for AI training, you have to go to data privacy under settings and switch off the button for generative AI improvement as seen below:

Unfortunately, Linkedln has been using personal data to train their Ai without letting people know. The settings above were added few days ago. So, even when users do not allow LinkedIn to use their data for AI training in the future, they cannot do anything to such data that has been used in the past without their consent. It cant be removed from the AI engine.

X and user data for AI

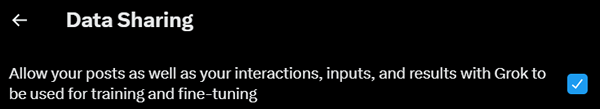

X (formerly Twitter) is another platform that is using your data to trains its Grok AI. Both Grok and XAI are the main AI service providers on the microblogging platform. X already indicated that users who wish for their data not to be used for Grok improvement can make that setting in the account. But just like LinkedIn, data already used cannot be reverted by this setting.

Below is what X said to confirm that they use personal data uploaded to their platform for AI training:

To continuously improve your experience, we may utilize your X posts as well as your user interactions, inputs and results with Grok for training and fine-tuning purposes. This also means that your interactions, inputs, and results may also be shared with our service provider xAI for these purposes. source

- How to opt out: You just have to go to https://x.com/settings/grok_settings and switch off the data sharing button as shown below.

source

source

Conclusion

If you do not want to have one of your selfies or other personal data show up in AI prompt result, you better dig in and check what policies and settings are used by each of your social media platforms to protect user data from AI training and improvement.

Note: thumbnail is mine

Posted Using InLeo Alpha