Microsoft believes their new tool, Correction, is capable of correcting AI hallucinations and helping determine the accuracy of results from AI models. Experts, however, advise caution, as Correction could even worsen the problem in its entirety, although it seems to potentially fulfil its claims in some aspects of generative AI usage.

Source: Reuters

AI cannot be trusted all of the time. It's biggest flaw is that it hallucinates. There have been counteless popular instances where AI models not only provided false information but also said things that really aren't plausible, like suggesting to a user to put glue in their pizza to make it sticky. Correction by Microsoft is intended to revise AI text outputs that are factually wrong, and it can be used with other models like Meta's Llama and GPT-4o.

“Correction is powered by a new process of utilising small language models and large language models to align outputs with grounding documents,” a Microsoft spokesperson told TechCrunch. “We hope this new feature supports builders and users of generative AI in fields such as medicine, where application developers determine the accuracy of responses to be of significant importance.” TechCrunch

It does sound like a good answer to AI's hallucination problems. To be able to find what's "off" about a chatbot's response and have it corrected, but when you think of the fact that Correction itself is also artificial intelligence and that it can also hallucinate, we might have even more problems than we already do.

How Microsoft Correction Works

Hallucinations are fundamentally how AI works. Artificial intelligence doesn't "know." It only predicts what the next thing should be in a pattern based on what it has been trained on. That, in itself, is what allows AI to "generate." Hence, Os Keys, Ph.D. candidate at the University of Washington, says, "Trying to eliminate hallucinations from generative AI is like trying to eliminate hydrogen from water," and that "it's an essential component of how the technology works."

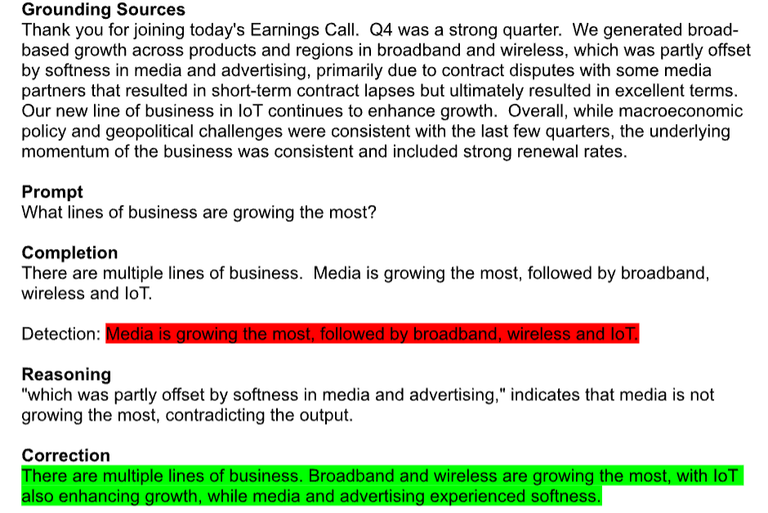

Source: Microsoft

In the example above, the AI model was fed in with a grounding source and then asked a question based on it. It, however, provides a factually wrong output. A correction is provided by revising the output, and then the correct answer was given. This is Microsoft's approach with Correction by introducing a classifier model that checks for possible errors, then introduces a language model for correction if it detects hallucinations.

The New Problem Correction Brings

Understandably so, Correction isn't going to be one hundred percent accurate with its performance, especially being AI. And then people, thinking that there's a working system to keep the AI models they use in check, could rely so much on it that they'd become way less cautious and unlikely to fact-check.

“Microsoft, like OpenAI and Google, have created this issue where models are being relied upon in scenarios where they are frequently wrong,” Mike Cook, researcher at Queen Mary University, said. “What Microsoft is doing now is repeating the mistake at a higher level. Let’s say this takes us from 90% safety to 99% safety—the issue was never really in that 9%. It’s always going to be in the 1% of mistakes we’re not yet detecting.” TechCrunch

And what happens if Correction needs a correction? AI cannot be entirely trusted, even for a long time from now, due to its inherent tendency to hallucinate. The tools designed to fix errors could also hallucinate while it tries to correct. And then there's the problem of users becoming complacent about the reliability of AI results.

Microsoft's Correction tool is a step in the development of AI, but it isn't the solution to the hallucination problem of AI, and perhaps these times are right to send it out into the world. To Mike Cook, "Microsoft and others have loaded everyone onto their exciting new rocket ship and are deciding to build the landing gear and parachutes on the way to their destination."

Monitize your content on Hive via InLeo and truly own your account. Create your free account in a few minutes here! Here's a navigation guide.

Posted Using InLeo Alpha