In reference to @neoxian's post The Stupidity of Hivewatchers , I have been both a participant and observer of anti-abuse efforts on both STEEM and HIVE. I'm talking more about the response to plagiarism, citation, etc., and less about obvious abuse such as spam. This post has plenty of merit, but I'd like to take a different tack. While I feel anti-abuse is important, the current methodology we as a community have been supporting has been missing the mark for a long time. I quit participating in anti-abuse simply because it was quixotic, thankless and downright soul-sucking, attributes that would be better served by a non-human.

@klye was on target with his response with regard to what I feel is the correct technical approach to anti-abuse:

As he stated, the human element needs to be removed from the equation. The real question is does the technology exist in order to feed JSON data into an API in order to detect plagiarism? If one conducts a simple google search for 'plagiarism api' or 'AI generated content detection API', one gets multiple hits. From that we can at least agree on the possibility the technology exists and more exploration is warranted.

@steevc offered his opinion in regard to the human element of anti-abuse, and I must say that I agree with him

I have seen some of this heavy-handedness at times, and unilateral decisions being made regarding people's continued ability to earn rewards. I in no way want to discount the difficulty of policing human behavior, especially when real financial incentives are involved, but a tiny minority of humans doing so in obscure fashion is not the answer.

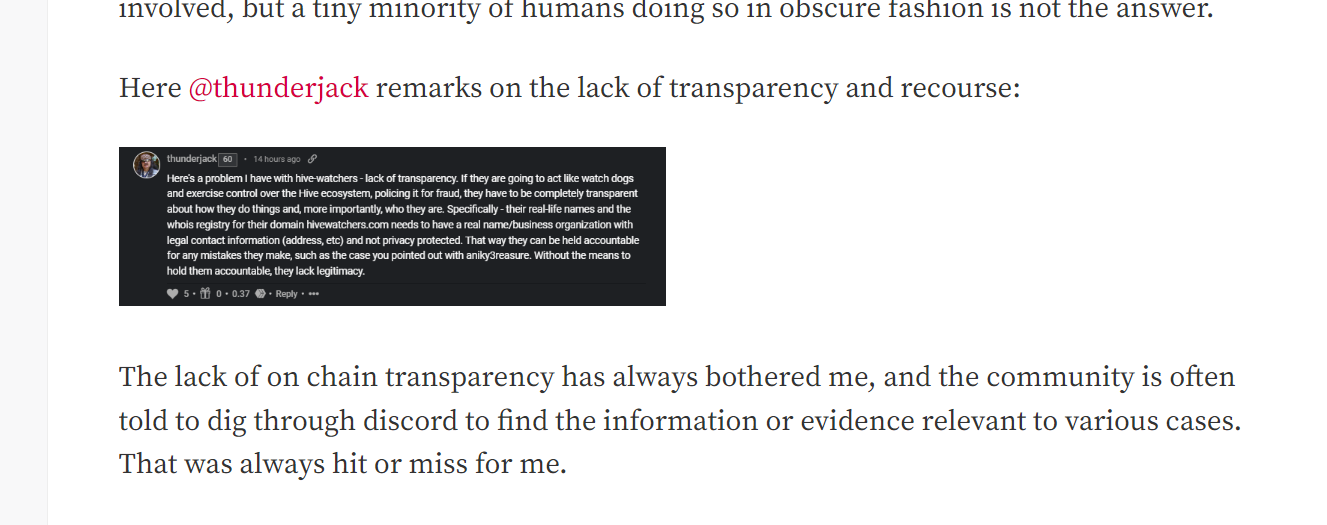

Here @thunderjack remarks on the lack of transparency and recourse:

The lack of on chain transparency has always bothered me, and the community is often told to dig through discord to find the information or evidence relevant to various cases. That was always hit or miss for me.

Here @hivewatcher basically eludes to the futility of their own efforts:

An infinite struggle to fight invisible users? Would these same users be invisible to a well-tuned algorithm?

I don't know enough about the individual cases referenced in the post above, but we have had an entrenched anti-abuse system in place, one that has neither changed nor innovated from what I can see. I've seen lots of bad actors get ensnared and rightly so, but I've also seen good folks get ensnared for reasons that are less clear. I'm not here to pass judgment, make accusations, or impugn anyone's character that participates in HW (@guiltyparties, @logic, et al.). What I will say is that we need a better and more impartial system, a system with explicit rules and predetermined responses to detected abuse. Some ideas:

- When abuse is detected, an automated response is provided along with the links to the detected sources

- An automated reply system that allows the user to either provide proof of ownership, or notice that proper citation was inserted.

- A mechanism by which a warning is first issued, and subsequent violations are met with incrementally higher penalties. This would be all visible on chain, allowing the runway for people to improve their behavior, and for curators to tune their blacklists.

- Provide a decay mechanism for penalties (eliminate or minimize the use permabans). If someone posts a certain number of compliant posts, their penalty will decay at a certain rate

- Detect AI and provide the probability that AI was used to generate the post along with what percentage of what is thought to be AI generated

Let's be honest with ourselves. We will never be able to detect or prevent all abuse. What we can do is up our detection game and provide the larger community the information and tools to participate in the policing of the chain, and rely less on empowering a few humans and all the faults that come with that. Centralizing this effort to a pseudonymous group presents all sorts of legal and ethical implications. We don't want to centralize any sort of power, particularly one that could be used to chill speech.

So how do we move forward? As a community we need to come together to discuss the feasibility of alternatives. Whatever the solution will be, it will not be free. We'll need compute resources, paid access to API's, etc. Could it be accomplished for the 145 HBD per day the community is currently paying for the centralized service? I'm not sure, but it's worth exploring. The real question is do we as a community have the resolve to develop a new solution or do we continue to criticize the current one? I feel like the technology is out there, and we have the resources to divert to such an effort.

If anyone would like to start a focus group to explore alternatives I am willing to join. Let's determine what resources we need, both technical and financial, and get a proposal together. Even the input of HW team could be invaluable to creating an enduring solution--perhaps this would free them to pursue more worthwhile endeavors. Please CC anyone that might be interested in the comments below.

cc: @enforcer48, @pfunk, @antisocialist, @smooth, @azircon

.PNG)

.png)