I am building polenx.com and learning along the way more about fullstack development. By building the platform alone I have to learn a lot about databases, process communication, deployment orchestration, server management and simply making stuff run.

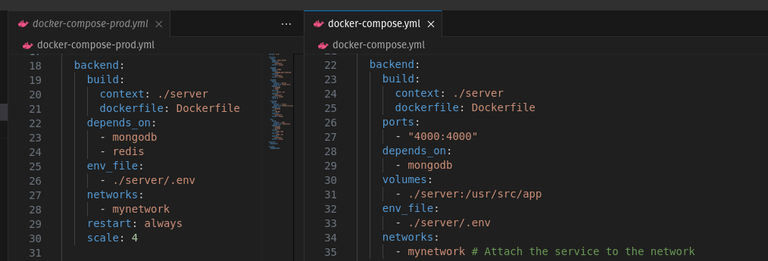

Different docker-compose for development and production

Why this post is necessary for Hive!

I decided I should document the parts of development I find interesting because a long time ago existed an initiative on this blockchain known as @utopian-io

Utopian used to sponsor posts that could drive traffic to Hive. I contributed by writing programming tutorials. I wrote so many tutorials that once in a while I still have to Google something I forgot about and still YEARS LATER I still find my own posts on Hive teaching myself how to do something I forgot about! This is simply amazing.

Sadly I can't seem to find a new initiative with the weight utopian had. When they existed one tutorial from an unknown author (like I was/am) could get dozens of HIVE and HBD as rewards if it was well written because they used to upvote good content with their huge voting power.

Well, I want Hive to gain traction, so I will try to get back to writing, but those won't be, for now, full blown tutorials like I used to make. I want to focus more on what I am doing now, that usually is a tad bit more advanced to what I used to write about back then. Let's get started!

My personal project

Follow @polenx on Hive

Polenx.com is my personal project. I am building it almost from scratch and taking care of back, front, database, hosting, testing, building, deploying, all that myself. It is a great learning experience.

Scaling a backend with Docker Compose

Scaling on docker compose is easy. I am still learning about Docker Swarm, I have used it in the past but I need to refresh my memory, so I am using the simplest solution, which is to tell docker-compose to scale the backend to 4 instances. It spins 4 containers and theoretically distributes the load evenly between them.

backend:

build:

context: ./server

dockerfile: Dockerfile

depends_on:

- mongodb

- redis

env_file:

- ./server/.env

networks:

- mynetwork

restart: always

scale: 4

But I am using sockets and nginx. Of course it wouldn't be that simple. A socket connection needs to stay connected to the same "physical" server, if nginx were to redirect each request to a different container it would break.

I tried using Redis to share the state between the containers, but still I got the channels broken for some reason.

I really expected

const pubClient = require("redis").createClient(redisUrl); // Import the Redis client

const subClient = require("redis").createClient(redisUrl); // Import the Redis client

// Use Redis adapter for Socket.IO

io.adapter(redisAdapter({ pubClient, subClient }));

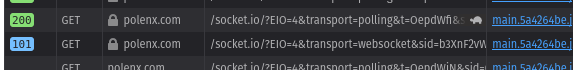

To be enough so that the channel wouldn't matter, I expected that the user could jump around the containers and because the state is on the Redis adapter then the socket connection would still work, but it doesn't. If I am using one instance only the code works fine, but as soon as I add at least one other instance the socket connection gets 400 errors because the connection ID doesn't match (which is weird, isn't? I am using Redis after all!), so I had to find another way.

Making sure that the same user connects to the same container

I started researching about what could I do, and I found an nginx configuration that would allow that to happen. The configuration is

upstream backend {

ip_hash;

server backend_1:port;

server backend_2:port;

server backend_3:port;

server backend_4:port;

}

This makes nginx distribute the connection from the user to the same container always (as long as the IP remains the same). It is an easy solution for a not intuitive problem (for me).

The result

What matters is that it works. To test it I had to connect as many devices as I could using different IP addresses. I used my mobile phone using mobile data, my tablet connected to a VPN and my computer connected to my home wifi.

With all 3 devices having different addresses I loaded up the website and waited for new data to be sent. It worked. All 3 devices got the chart and the trading history updated at the same time.

Going forward

I still want to test further and understand what is happening and why really in depth. I imagine I was trying to use Redis with a socket server the way it was intended to be used, but for some reason the server being load balanced by both Docker and Nginx was breaking it. My best assumption is that. It connects to a container, then send a new packet that unexpectedly gets sent to another random container and that is when the communication is broken.

I need to improve performance on the website, and I need to make sure the solutions I have are the best. Ideally in the future I want to change from Docker Compose to either Docker Swarm or Kubernetes, and hopefully be able to scale the application horizontally (as node.js applications should).

New features and news will be posted on the profile @polenx while more personal development posts will be made here in my personal account

Ending notes

I would like to end this post with some keywords, so that in the future when I am looking for content on what to post about I will find my own tutorial again. Not only that, but I hope that it brings new readers for Hive, and hopefully for my blog specifically :)

I am building a web3 app that is a decentralized exchange that uses the Hive blockchain as a trading engine. The solution uses JavaScript and NodeJS, it serves the backend using express and socket.io using Redis as an adapter. The database, where I index the trading history to avoid querying twice the blockchain, is ran on MongoDB using a persistent volume storage. Everything is containerized in docker, the docker containers are orchestraded by Docker Compose. I use two different Docker Composers for the dev environment, where I don't need scalability but I need hot reload, and for the production environment, where I need scalability but it just needs to build and run once.