Although there is still one COVID-positive out of four at home, I so far managed to go through this undetected. I hope that I can already claim victory and say that life is back to normal…

In the meantime, I had in mind several of my research articles as potential topics for today. However, they all rely on a context for new phenomena in particle physics called compositeness, a subject that I have never really addressed in my blogs. A general introduction to composite models was therefore a natural scope for the blog of today.

Before starting, I would like to point out that for those who do not want to get too much particle physics in one shot, there is a short way to read this blog. It is indeed possible to end the present introduction and then move directly to the last section of the blog, that consists of its “Too Long; Didn’t Read” (TL;DR) version. For the others, feel free to enjoy the longer version, that contains a lot of interesting pieces of information, at least from my perspective.

As discussed a few months ago in this blog, there are good motivations to consider that the Standard Model of particle physics is not the end of the story, and that a more fundamental theory of nature should be hiding right around the corner. Composite models consist in a very popular option for such a theory of beyond the Standard Model physics, and the idea behind them is quite simple.

A composite model comprises two sectors of particles.

- First, we have a massless Standard Model sector containing all known elementary particles, with the exception of the Higgs boson.

- Second, there is another sector of (much) heavier fundamental particles that are sensitive to a new strong interaction. This new strong interaction allows these elementary particle to combine and form very massive composite particles.

We are however still missing a Higgs boson, which is a problem as it has been observed. This is solved by the fact that there are new symmetries dictating the form of the new composite sector. These symmetries have properties that are such that a Higgs boson, much lighter than all other composite particles, naturally emerges from the theory. Another issue is that all Standard Model particles have mass (and cannot thus be massless). This is solved by allowing the theory to mix particles from the Standard Model sector with composite particles. This is called partial compositeness.

Let’s now investigate how all of this works in more details, and how composite models are searched for at particle colliders such as at CERN’s Large Hadron Collider.

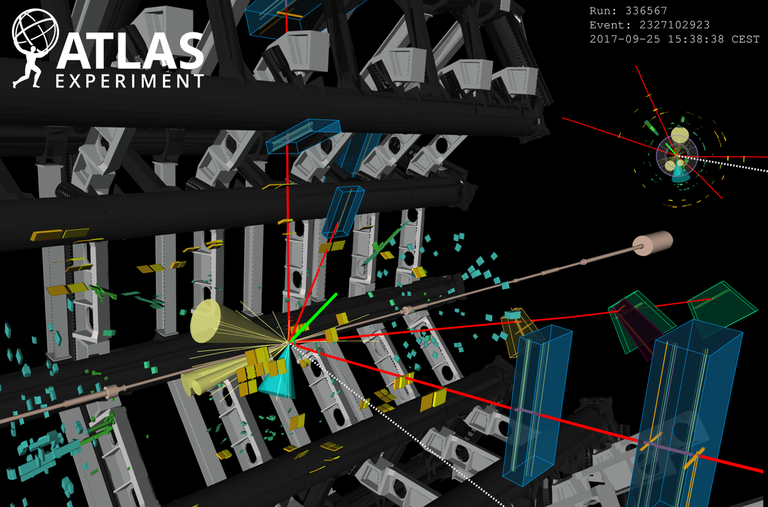

[Credits: Original image from the ATLAS collaboration (CERN)]

The hierarchy problem in a nutshell

Before digging fully into the topic, let’s discuss a bit the hierarchy problem of the Standard Model, that is the cornerstone of many theories beyond it (including composite models). The word hierarchy here refers to the two energy regimes relevant for the Standard Model: that of the masses of the mediators of the weak interactions (the W and Z bosons), and that at which gravity effects cannot be ignored (gravity is not part of the Standard Model).

The former regime is associated with the so-called electroweak scale, and roughly relies on an energy scale equal to 100 GeV (1 GeV is the proton mass). The latter regime is related to the Planck scale, that is a scale of 1019 GeV (or 10,000,000,000,000,000,000 GeV). We thus have a clear hierarchy between these two scales, the Planck scale being 100,000,000,000,000,000 larger than the electroweak scale.

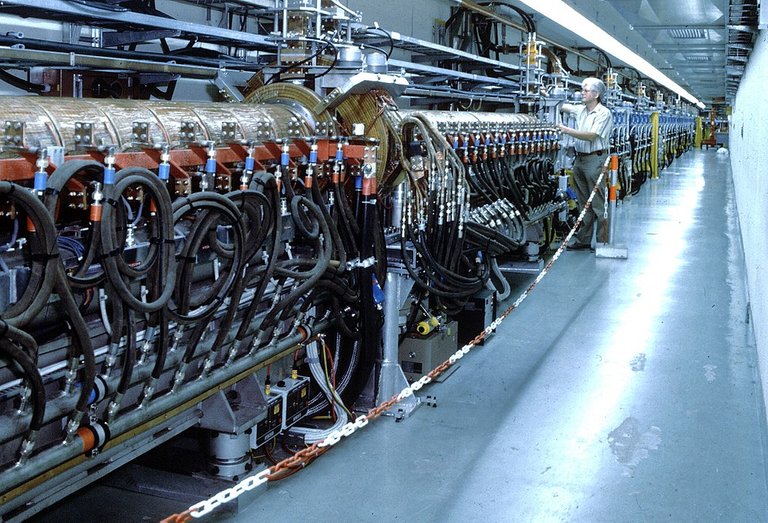

This is a problem because of the quantum nature of the Standard Model. When we aim to compute predictions for any quantity in high-energy physics, we must indeed always include what we call quantum corrections.

For instance, let’s take a single Higgs boson. This Higgs boson can convert itself into a top quark and an antitop antiquark, that then immediately recombine themselves into the initial Higgs boson. In practice, the quark-antiquark pair is virtual, i.e. it has no real existence. Theses virtual particles are thus only an intuitive way to understand quantum field theory more easily. However, the effect of this phenomenon can be quantitatively estimated: virtual effects are found to impact observable quantities.

Whereas this sounds crazy, this is how quantum field theory works. We need to consider a whole bunch of virtual effects to any observable to get precise predictions for them. The funniest part is that data tells us that this works like a charm.

[Credits: Eric Bridiers (CC BY-ND 2.0)]

Let’s close the parenthesis to go back to our hierarchy problem, and let’s focus on the ‘size of the Higgs field’. This consists of the parameter that controls the order of magnitude of the masses of the W and Z bosons, two masses that are of about 100 GeV (i.e. the electroweak scale). For that reason, the size of the Higgs field has to be of about 100 GeV too. However, quantum correction effects do not like that that much, and have the tendency to make the size of the Higgs field equal to 10,000,000,000,000,000,000 GeV (i.e. the Planck scale).

There are only two ways to avoid that. Either new physics must exist (that’s our favourite option) or there must be some unacceptable tuning of the parameters of the Standard Model.

The effects of the quantum corrections involve sums and differences of the masses of all the particles of the Standard Model. Therefore, it is possibly to fine-tune all masses so that quantum corrections cancel out exactly, and allow us to keep the size of the Higgs field to about 100 GeV. The real issue with this possibility is that we must tune each mass up to its 30th decimal. This is of course first very inelegant (and physicists like elegant theories), and moreover untestable experimentally (our apparatuses will never be so precise).

We thus prefer to go with the second option: there must be some new particles lying not too far around the corner, and that modify how quantum corrections affect the size of the Higgs field. The net result of their presence of that the size of the Higgs field is kept nicely to a value around 100 GeV. In the following, I explain how this works in the framework of composite models.

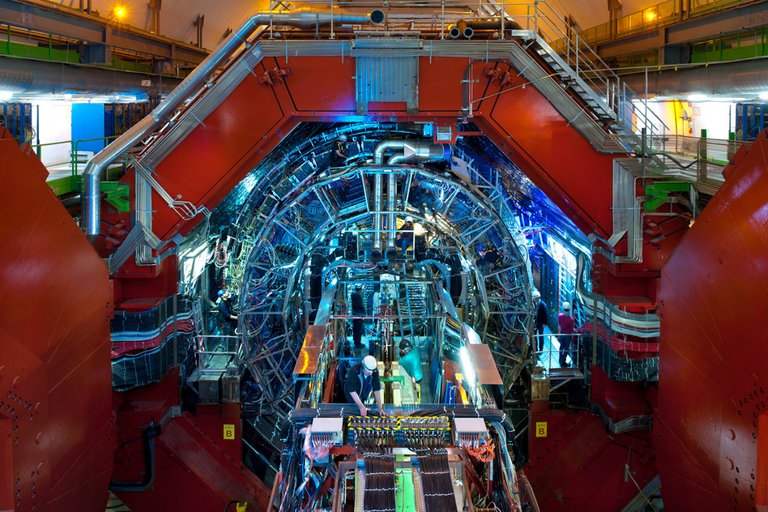

[Credits: CERN]

From the hierarchy problem to composite theories

In order to explain how composite models arise, let’s restart from the Higgs boson example introduced above. We mentioned the existence of quantum corrections to some of its properties, and these corrections originated (in our example) from a pair of virtual top-antitop quarks. Let’s now assume that we want to calculate practically the related quantum effects.

This amounts to consider all configurations for the virtual particles, and in particular to account for all possibilities with respect to the energy and momentum that they carry. These virtual particles can thus be either soft (they have a small amount of energy), or hard (they carry a large amount of energy). In terms of mathematics, this means that we integrate (or sum over) all options for the energy and momentum of the virtual particles, from 0 to the Planck scale.

We must thus in particular consider cases in which the virtual energy scale reaches the Planck scale. Those cases are those driving the hierarchy problem, introducing a dependence of the quantum effects to the Planck energy scale. This is precisely what sends the size of the Higgs field to the gigantic value of 10,000,000,000,000,000,000 GeV.

In composite models, this is solved through the existence of an intermediate energy regime at which the theory content changes. Such an energy scale (the compositeness scale) is usually taken not too far from the energies typically probed at CERN’s Large Hadron Collider. At this scale, the theory changes and there is no reason to consider quantum effects to the size of the Higgs associated with energies equal to or larger than this new scale in the Standard Model, because we need to consider a different theory.

This setup has nothing surprising in it. It mimics (and generalises) the way in which the strong force (one of the three fundamental interactions) works. In the quantum theory of the strong interaction, we have light particles called quarks and antiquarks that combine at a given confinement scale to form protons, neutrons and many more heavier composite particles.

In composite models, we do the same, but after multiplying masses and scales by some factor to be above the energy regime already probed so far (and thus be OK with respect to current data that does not exhibit any sign of compositeness).

[Credits: CERN]

New heavy fundamental particles are thus assumed to exist at energy scales not probed so far, and these particles combine to form a full zoo of new composite objects. So far so good, but those things are heavy and the Higgs boson is light (its mass has to be 125 GeV, which is lighter than the heaviest particle of the Standard Model).

This is not a real problem, as testified by the quantum theory of the strong interaction (that is traditionally called quantum chromodynamics). The latter predicts the existence of light particles called pions. Somehow, the symmetries underlying the theory and their properties ensure the existence of a few particles much lighter than everything else.

It is thus sufficient to implement a similar symmetry mechanism in composite models, and get a few particles lighter than any other new composite particle. We hence equip the new part of the theory with symmetry properties similar to these of quantum chromodynamics (similar, but not fully the same as there are experimental constraints).

Therefore, we have a bunch of new very heavy elementary particles, that form very heavy composite particles, with the exception of a few lighter objects. One of these light particles is the Higgs boson, restoring thus agreement with data (as the Higgs boson has been observed and the theory started without having any Higgs boson).

Searching for composite models at particle colliders

The above framework allows for a theory featuring a light Higgs boson, in agreement with data. But is it truly the observed Higgs boson? Do we really agree with data?

The answer is “not exactly”. Composite Higgs bosons have properties slightly different from those of the Higgs boson predicted in the Standard Model of particle physics. This offers thus an indirect way to test compositeness in data, and verify how much compositeness is allowed in the Higgs boson.

Today, the properties of the Higgs boson seem to be in good agreement with the expectation of the Standard Model. However, the error bars are still large enough, so that there is room for new phenomena to hide in there. This implies that the Higgs boson can be a composite object emerging from a theoretical framework such as the one described above, without violating any crucial observation.

In order to get more conclusive statement on this options, future particle collider projects are in order, so that the properties of the Higgs boson could be cornered better.

There are however additional ways to get handles on composite models.

[Credits: CERN]

As said above, light composite particles should emerge from the theory. One of them is the Higgs boson, but in realistic composite models there is always at least another scalar particle (i.e. without any spin, like the Higgs), much lighter than anything composite in the theory.

This extra particle is so light that it is actually too light for CERN’s Large Hadron Collider to find it, because of the overwhelming background of the Standard Model. However, several proposals for future collider projects could be useful game players here. This possibility has been discussed in one of my recent scientific publications, and will be the topic of a future blog.

Complementarily, we can aim to observe composite resonances directly. In the introduction to this blog, I mentioned that the masses of the Standard Model particles were coming from the mixing of heavy composite particles with massless Standard Model particles. One of the Standard Model particles is special by virtue of its large mass: the top quark. It is therefore largely considered as a nice portal for new phenomena.

Composite resonances mixing with the top quark are generically called vector-like top partners, and they consequently give a lot of hopes to observe new phenomena. Their mass should lie in the energy regime probed at the Large Hadron Collider, and they are thus prime candidates for many experimental searches (see for instance here or there for a CMS and ATLAs example respectively). Unfortunately, there is no signal at the moment.

Finally, it is worth to mention that one of the numerous composite resonances could be a dark matter particle, so that it could be probed both from cosmology and collider physics. This is an option that I explored with my collaborators in this recent publication, and that will be again the topic of a future blog.

TL;DR

Compositeness as a viable possibility for new phenomena

Compositeness as a viable possibility for new phenomena

This blog was dedicated to a popular class of extensions of the Standard Model of particle physics: composite models.

In those models, the particle content of the theory is divided in two. We have a first bag with all particles of the Standard Model with the exception of the Higgs boson. Those particles are all initially massless. We next have a second bag with new fundamental particles, much heavier than any Standard Model particle. These have the property to be subjected to a new strong fundamental interaction.

As a consequence of this new force, the new fundamental particles form composite states, in the same manner quarks and antiquarks combine to form protons, neutrons, pions, kaons, etc. It is worth to note that among these, pions and kaons are special because they are much lighter than any other composite object made of quarks and antiquarks.

Mimicking this behaviour with the new strong force, we can end up with a bunch of very heavy composite particles, plus a few lighter ones. Among these lies the Higgs boson, that we need as it has been observed at CERN’s Large Hadron Collider. In addition, we need a new mechanism to explain the masses of the Standard Model particles. This is achieved by mixing composite objects with Standard Model ones.

Whereas all of this form a very elegant theoretical framework, the final question is about the related viability. There is a lot of data, and there are thus strong constraints on composite frameworks.

First of all, the properties of a composite Higgs boson are slightly different from those in the Standard Model. However, current data leads to uncertainties on the measurements that are large enough to allow for deviations.

Secondly, we can try to observe some of the composite particles directly. The Large Hadron Collider is trying this very hard, looking in particular for those composite particles that help to explain the large mass of the top quark. In addition, some of the composite objects could explain dark matter, and could thus be constrained both from particle accelerator experiments and cosmology (cosmic ray and dark matter direct detection experiments).

As can be imagined from the above, there is a lot of ongoing theoretical and experimental activities trying to understand how composite models could be viable from data (and from theory too). This is quite exciting, and it is clear that we will get more insights on this in a mid future, with the help of all new results that are expected to come.

Thanks a lot for reading so far, and feel free to comment and give any feedback with respect to this blog. For those interested in actual particle physics studies on-going on Hive, see you tomorrow for the third episode of our particle physics #citizienscience project!