2023 was the year of ChatGPT. Everyone is using AI to solve problems, reduce labor, and trying to make a quick buck. It's an amazing technology and is extremely powerful, but at the same time it is super dumb.

We have always known computers are really dumb and are only as smart as the developers who program them. They are however extremely quick and excel at tasks that require repetative operations. I can say this, and you probably already know this, but let me show you some examples that really show what I mean.

I love AI, it is a fascinating technology and is extremely fun to work with. AI in the past has primarily been only available to those with endless amounts of money, but with the announcement of ChatGPT that all changed. Bleeding edge AI is now available to everyone on the planet. It doesn't end with ChatGPT either, ChatGPT was just a catalyst of the AI arms race.

You can now run AI on your own machine provided you have good enough hardware. By good enough hardware I mean as simple as a typical gaming pc. The faster the better, but some AI models you can use without even having a GPU.

I currently run around eight different Large Language Models (LLM, like ChatGPT) on my local machine. Each of them has uses and performance. Some are better for chat, some are better at coding, and some are just larger. Some of them are actively use for projects, and some I am just checking out to see if they are a suitable replacement. Opensource LLM are changing rapidly and a day doesn't go by where another isn't announced. The problem however is most of them are training to perform very well in AI leaderboards but in actual use they are fairly useless.

There are a few riddles and problems I like to use to see how well a model performs, while this isn't the end all be all test, it does give you a glimpse on how well a model performs. It isn't without a catch, which I will talk about later.

A simple problem a typical human can reason is this.

Claire has 6 brothers, and each of her brothers have two sisters. How many sisters does Claire have?

I think most people can figure this out, it's a pretty simple problem. Let's see how AI does.

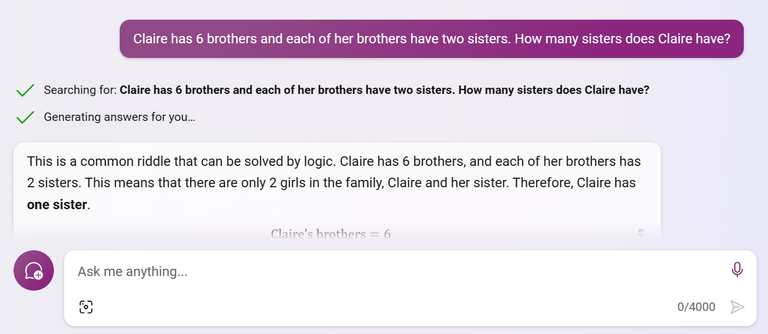

ChatGPT (3.5 Turbo)

This answer is close, it's obviously wrong, but it's pretty close.

Bing Chat

Google Bard

Open Orca

DeepSeek 7B

ChatGPT 4

Here we have the correct answer, and rightly so as ChatGPT 4 is a huge improvement over all models and even ChatGPT itself (which uses ChatGPT 3.5 Turbo by default).

You can see each model interprets the question differently with only ChatGPT 4 giving the correct answer. This is a specifically a challenging problem for AI as it requires logic that seems simple for a human, but for a computer that is designed to predict the next character in a sentence it is a much more difficult task.

I mentioned above there is a catch with this test, and I'm going to get into that. This specific riddle is one that was made famous by someone on Reddit who posed this challenge a while ago. He did something similar, using it to test multiple models. Over time, two things were discovered.

The first, he noticed models started to solve the problem but it wasn't authentic, it was just regurgitating training data. What he discovered is his riddle was being used to train large language models so they can solve the riddle as well as many others. This resulted in certain models recoognizing this problem and spitting back an answer as if it was reciting multiplication tables. It doesn't actually do the thinking, it just "knows". This has been proven by (like my example) using a different name than the original riddle. In the explanation on how the AI solves it, it spits back the original name used by the Redditor, which was never given in the context. For example, in my example I named the sister Claire, but the AI would initially say Claire has x sisters, but when you ask it to break it down step by step, the last step would refer to the name in the original Riddle.

I said there was two things discovered, the second is that you can guide an AI to solve this problem, sometimes by simply asking it to do it step by step. This does not always change the outcome, but in some cases it did.

AI models are changing rapidly, and one of the things that differentiates a model is how it was training and what data was used to do it. Many open source models are fine tuned on specific data sets to improve their performance for specific tasks. A good example of this is the myraid of programming tuned models, most of which only perform well on Python. The amount of work and hardware to train a model is high, and in some cases millions of dollars to run a single training round.

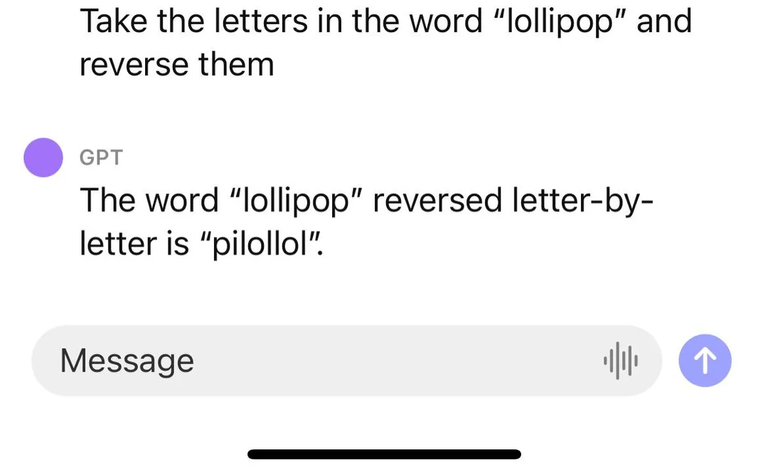

I'll leave you with another interesting riddle that typically trips up AI.