Elon Musk described Grok 3 recently as "scary smart", but he's known to hype things up when it suits him. So far, not many had access to the new model of xAI for intensive tests, but looks like the ones that did have been impressed, especially since xAI has been in the market for only 1 year. Here's the presentation, if you want to see it.

Looks like Grok 3 and the mini version outperform all competitors in the benchmarks presented by xAI in the presentation, and that while the full model is in beta and not even trained for as long as the mini version.

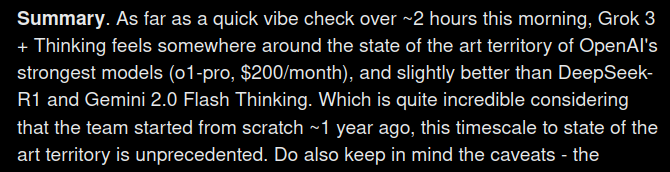

A more competent opinion is provided on X by Andrej Karpathy, someone I've seen with relevant AI tests and opinions in the past. He had access to Grok 3 and compared it to a number of other models, on a series of quick but relevant tests.

This is his summary at the end:

OpenAI recently launched o3 mini, and the full o3 will likely soon follow. If o3 is better than o1 (likely), than OpenAI will still have an edge compared to xAI, but the gap is slowly closing if they won't switch. The charts xAI presented show a remarkable growth rate for Grok, compared to their rivals.

The Race for AI Data Centers

But I want to talk a bit about their infrastructure, the 200,000 GPU cluster they have in Memphis, half of them being H100 and the other half H200, which is still the largest AI-focused data center cluster in the world.

The question immediately arises: but doesn't Google have bigger data centers? And the answer is: yes, it does, but not as a single cluster, with GPUs tightly connected. They have huge datacenters in the same location, but multiple buildings, so not as tightly connected.

So, why clusters? Aren't distributed data centers fine for AI? And the response is that they are perfectly fine for inference (i.e., in production, using compute to produce results), but not for training. For training, it's much better to have access to a big cluster, to cut the duration of the training as much as possible.

That's why all the tech giants in the US have plans to build this year clusters much bigger than xAI, somewhere in the range 400-600k GPUs.

If you think that is insane, well, xAI's next step is a cluster of 1m GPUs (5x the size of the current cluster in Memphis). In case anyone wanted to short nVidia, think twice.

Challenges for New Data Centers

(apart from high-end GPU supplies, I mean)

Cooling

Obviously, something like the data center in Memphis isn't air-cooled anymore. They are using liquid cooling, which seems to be the way to go, unless a more revolutionary method of cooling isn't invented in the meantime.

Power Requirements

This one is tough. If I don't mix things up, I think xAI's cluster in Memphis needs the power of an entire small town to run. Since bigger clusters are on the way of being built, they'll have bigger power needs.

The biggest bottleneck is the power grid, transportation of electricity. That's why the new clusters usually partner with businesses from the energy domain to have power generation built very close to the data centers.

People say small nuclear power plants would be needed, but they are built over a long time and the pace in AI is much higher than that. So, they'll have to figure out other solutions.

Power Fluctuation

The power fluctuation is extreme between the times when GPUs are at 100% and when they are idle, as the xAI team discovered at their cluster. The power generators apparently can't handle that. The solution they came up to was to give GPUs bogus tasks when they should be idle, so that they keep them working all the time, and keep the energy requirement relatively constant in time.

Much of what I included in the section about data centers comes from listening to this 5+ hours podcast of Lex Fridman, a little bit at a time for a week or so. Feel free to do the same, there's much more information there.

Posted Using INLEO