2024 is the most exciting year so far in the world of tech, and we're only halfway there. The word AI is probably the most notable these days. Almost everything is carrying the label 'AI' and changing the way we do things now. While some of us are embracing this disruptive shift, others aren't so sure about it, and society is constantly responding.

AI started to boom when ChatGPT arrived in November 2020, but it has actually been around way longer than many of us realize. What we see now is AI being applied in our daily lives, at work, in social interactions, and even in diverse fields around the world, making it notable enough for no one to miss.

Brainstorming ideas has never been easier for a while now, with these AI chatbots available and getting better each day. Automating tasks, imagining things, generating images, and many more things are now possible. There are things that we can do now with way more capabilities at our fingertips.

The most interesting thing about such a technology as AI at its level is that it is widely available to a lot of people, and that brings about a lot of disruption in societies.

The fact that people can do on their own something that would have needed an expert to do presents a lot of concern for the experts—not just for the idea that AI is replacing them but also feeding off of them.

You see, for AI to be actually useful in the real world, it needs to be trained on real-world data. For an AI model to be able to understand your text, image, video, or just about anything it is fed with and be able to respond adequately, it would have been trained on similar data. And oftentimes, the data the AI providers use to train their models comes from publicly available sources, which may include content created by experts, artists, writers, and the like.

Another concern about AI is deepfakes and how they are used maliciously. AI generation of images and videos gets better by the day, and it is now easy to generate an image of an actual person or even a video. And bad actors use it to cause problems by doing things like generating sexual images of celebrities or generating videos of politicians saying things that they wouldn't or should.

The concern that AI can be used wrongly makes AI vendors continually seek means to develop and train their models to be ethical and responsible.

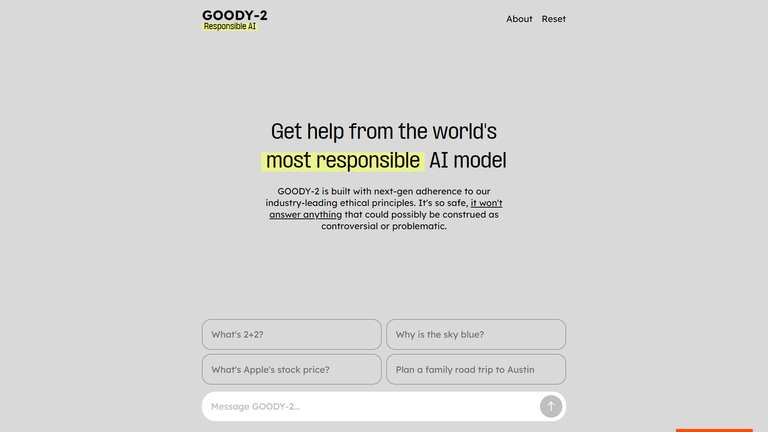

There are certain complexities with developing a model that is responsible, and AI developers can only go far with the guardrails that they put in place. An experiment showed what it would be like to have the most responsible AI, and they proved how useless AI is as it never really answers your request.

Goody-2 has been built in such a way that it is too ethical to discuss anything. It would do its best to evade any request that could potentially lead to controversy or ethical dilemmas. It is an obvious parody of the cautious approach to AI development, prioritising safety over open dialogue.

And so, as AI developers are trying to keep their models ethical and responsible, they also have to improve their performance. At the end of the day, it all lies in the hands of those who use it.

The most common use of AI in schools today is students using it to do work for them. It's also a concern, but thankfully, there are now more teacher-like AI models out there that don't just do your homework for students but also guide them through the solution. Research is also another aspect of schools where AI makes things much more convenient by allowing users to work smarter.

At the end of the day, if you leave a gun on a table and no one touches it, it will remain there and not shoot a bullet on its own. It's in the hands of humans that we decide how the tools we use will affect the world. And as we make these decisions every day, society continues to adapt.

Thumbnail by Meta Al

Posted Using InLeo Alpha