The Blocksize Debate is one of the biggest issues in crypto. The reason for this is simple: scaling is the biggest issue in crypto, because inefficiency is the biggest issue in crypto.

- Inefficiency (thousands of servers running the same code) leads to small blocksize.

- Small blocksize leads to expensive transactions.

- Expensive transactions lead to crippled business models and high overhead.

- High overhead leads to limited adoption.

- Limited adoption leads to less growth and lower token value.

- Lower token value leads to poor user retention.

- The struggle is real.

How scalable is Hive?

- Hive produces blocks every 3 seconds, with a max size of 65KB.

- That's 13MB per ten minutes.

- Bitcoin processes more than 1MB per ten minutes and basically operates at maximum capacity 24 hours a day.

- Meanwhile, Hive almost never operates at maximum capacity.

Lots of people around here (even witnesses, which I find to be shocking) often say things like, "If Hive blocks fill up, we can just increase the blocksize." Let me be blunt. This is ridiculous sentiment. Like, it's absolutely absurd to make a claim like this.

Hive couldn't even handle the bandwidth of Splinterlands bots gaming the economy to the point that the chain became massively unstable and most of the transactions were moved to a second layer. Now those operations are no longer forwarded to the main chain (and that's fine). There's a lot to be learned from this situation.

If Hive can not operate consistently at maximum capacity 24 hours a day 7 days a week, then obviously talking about increasing the blocksize like it's a casual nothing situation is... well.. quite frankly embarrassing for the person speaking (if they are a developer; noobs who don't know any better get a free pass).

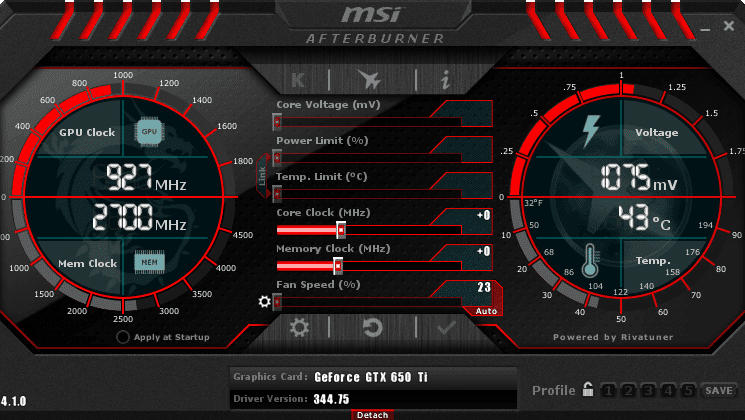

Suddenly I'm reminded of overclocking a CPU.

Have you ever overclocked a CPU before?

There are basically two variables to consider during this process.

- Increase the clock cycle (Hz) of the CPU to increase the speed.

This increases volatility within the system and lowers stability. - Increase the voltage of the CPU.

This lowers volatility & increases stability but creates massive heat.

Simple and elegant.

Most CPUs these days are underclocked by design so they have a lower chance of failing and a longer lifespan. But in most cases, the user can get a nice heatsink and increase the megahertz until the system becomes unstable and gives the classic Blue Screen of Death. Then, when this happens, the user increases voltage to re-stabilize the system. This process is repeated (while monitoring heat output at 100% usage) until the user is satisfied that they got the CPU working at an optimal level. There's a big difference between water cooling (which I've never been brave enough to try) and a crappy stock heat sink that you get for free with the CPU. Always spend at least $30 on a nice heatsink... it's worth it.

In many cases I would increase the megahertz of my CPU without even increasing the voltage. The CPUs I've bought in the last ten years were all able to overclock without even risking damage via overheating. I could have easily pushed them a bit farther but, is it really worth eking out another 10% at the risk of melting the CPU? Maybe I'll give it a whirl when I have money to burn. Wen moon?

Hive is kinda like this.

Here on the Hive blockchain, we jacked our clock cycle to the moon, but we don't have the stability to back it up. If every Hive block was getting filled to maximum, Hive would not work... I'm pretty sure of this. Maybe improvements have been made since the Splinterlands debacle, but definitely not enough to even consider increasing the blocksize. We need to hit maximum output and not crash before we reopen this discussion.

Do we even want to increase the blocksize?

Not really... think about it. Wouldn't it be cool if all of a sudden these resource credits were worth actual money and the price of Hive skyrocketed 100x? I'd certainly like to see it. When looking at 20% yields on HBD... we could legit 100x just from that dynamic alone given the scenario of deep pockets deciding to park their money here like was done on Luna (would have been a better example before LUNA crashed to zero). Make no mistake, when Hive spikes 100x... it's going to crash 98% again unless we don't play it like idiots like we did in 2017. Fear creates the pump, and it also creates the dump. FOMO and FUD are twins; two sides of the same volatile spinning coin.

So how do we "increase the voltage" of Hive?

- Nodes need to have more resources.

- Code needs to be more efficient.

- Bandwidth logistics need to be streamlined.

From what I can tell, Blocktrades and friends are doing great work on making the code more efficient. With the addition of HAF and the streamlining of the second layer with smart-contracts, and we may find ourselves primed to explode our growthrate over the next five years. Good times.

Other than that... people running nodes just need to spend more money. Obviously having more resources on your server that is running the Hive network would be better for the Hive network. However, the financial incentives to actually do this are limited.

What is the financial incentive to run a badass Hive node?

Well, if you're not in the top 20, maybe this would be a political play to show the community that you are serious and would like to get more votes to achieve that coveted position. However, witnesses that are already entrenched in the top 20 can often get lazy because the incentives are bad. Why would a witness work harder for less pay? That's what we are expecting them to do, and it's a silly expectation on many levels.

Perhaps what we really need is for blocks to fill up and most nodes to fail before anything changes. Surely, when things don't work is when the most work gets done. The bear market is for building. It would be much easier to get a top 20 witness spot if half of the witnesses nodes didn't work.

Dev fund to the rescue?

Perhaps we also need to be thinking about the incentive mechanism itself. Is there a way that Hive can employ a solid work ethic that pays witnesses more for the value they are bringing to the network? Does this mechanic already exist when we factor in the @hive.fund? To an extent, this is certainly the case. Honestly Hive is pretty bad ass and we are being grossly underestimated. How many other networks distribute money to users through a voting mechanism based on work provided? Sure, it's not perfect, but it's also not meant to be perfect and doesn't need to be perfect.

Bandwidth logistics.

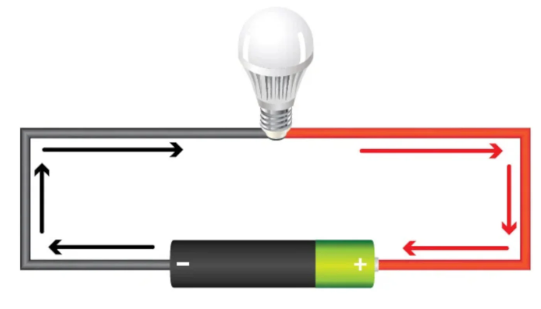

I've talked about this quite a few times in the past, and I believe that the way that Hive distributes information today will look absolutely nothing like how it distributes data ten years from now. Basically all these crypto networks are running around with zero infrastructure... or rather the current infrastructure is a model based on how WEB2 does it. This requires further explanation.

When someone wants data from the Hive network, how do they get it? They connect to one of the servers that runs Hive through the API and they politely ask the server for the information. Usually, that node will return the correct information that was asked for the vast majority of the time.

There are no rules for who can ask for data. There is no cost and there are no permissions. That's the beauty of the WEB2 Internet. Everything is "free". Most people wrongfully assume that this is also how crypto should operate, but this is not a secure robust way to do business.

If there are no rules for who can ask for data... then how do we stop DDoS attacks and stuff like that? WEB2 has come up with all kinds of way to haphazardly plug the holes of the WEB2 boat, and they've done alright. But to assume that WEB3 can afford to run the backend exactly the same as WEB2 has been doing this entire time? That's just silly. I mean it's working okay at the moment but it's not going to scale up like we want it to. Again, this is an infrastructure (or lack thereof) problem.

I am self-aware about what I sound like.

There are a lot of devs out there... smart people... who would think I was a complete moron for saying things like, "We should charge people for bandwidth." Because on a real level how is something like that going to scale up? How do we get mass adoption by throwing up a paywall? That doesn't intrinsically make much sense, right? And yet during bull runs Bitcoin sees $50 transactions... and people will pay the cost because its worth it. Meanwhile, Ethereum was peaking at $200+ per operation.

WEB2 vs WEB3

Most people do not understand the fundamental differences between WEB2 & WEB3. That's because... for the most part, WEB3 doesn't actually exist yet. It's really just an idea; a skeleton without any substance. However, I would argue that it's only a matter of time before Pinocchio becomes a real boy.

Take the DDoS attack vector for instance.

WEB3 will be totally immune to DDoS. Notice how websites that describe the 'solutions' as 'DDoS' 'MITIGATION'. Meaning... there's no way to eliminate the threat, only to lessen it. That is the price that must be paid when offering "free" service. There is no other way around it.

HOWEVER!

Think about it another way: Imagine Facebook.

Are you imagining Facebook?

Ew.

Doesn't matter what WEB2 service we are envisioning.

The only requirement is that users log in with a username and password (often enforced by email 2fa). Even though Goggle doesn't require users to have accounts, it's also a good example because anyone can ask for data anywhere in the world.

Now then, when someone goes to log into Facebook or Twitter or asks Google for search results... what happens? Servers controlled by the associated corporation need to handle the request. They need to provide access to the entire globe in order to maximize their profits. And thus begins the mitigation process that arises from 'free' service to the maximum amount of users while trying to lessen all attacks levied against the service.

Compare this to WEB3

What do we need to login to something like Hive or BTC?

Just like WEB2, we need our credentials.

However, the credentials themselves are decentralized.

The only entity that knows our password (hopefully) is us.

This is the critical difference that allows WEB3 to happen.

When we want to "log in" we don't need permission.

As long as we have the keys and the resources, access granted.

So while something like Facebook can be attacked on multiple centralized fronts (like login servers), things like Hive and Bitcoin can't be. It's not possible to overload the layer-one blockchain with requests because the layer-one blockchain requires resources be spent to use it.

We have to extend this concept to the API and data distribution before WEB3 can truly scale up to the adoption levels we are looking for. Only by doing this can we become robust and eliminate vectors like the DDoS attack. How to achieve such a feat is a topic that could no doubt fill multiple books and requires more theory-crafting that one person has to offer.

Conclusion

When should Hive increase the blocksize?

When the blocks fill up AND the nodes don't crash.

We've yet to see that happen, and we've been stress-tested multiple times.

To be perfectly frank, increasing the blocksize should be a last resort only to be used when it is all but guaranteed the network can handle it. Even in the case of the network being able to handle it, increasing the blocksize makes running the network more expensive for all parties concerned, so the only reason to do it is if it brings exponentially more value to the network than it costs to enact.

Even so, there are many ways to optimize the blockchain without increasing the blocksize. Hive is working its magic on these fronts in many categories. To streamlined indexes, less RAM usage, upgraded API, database snapshots, Hive Application Framework, second layer smart contracts, decentralized dev fund... yada yada yada.

Development continues.

The grind is real.

Posted Using LeoFinance Beta